Cheng-Yu Hsieh

@cydhsieh

PhD student @UWcse

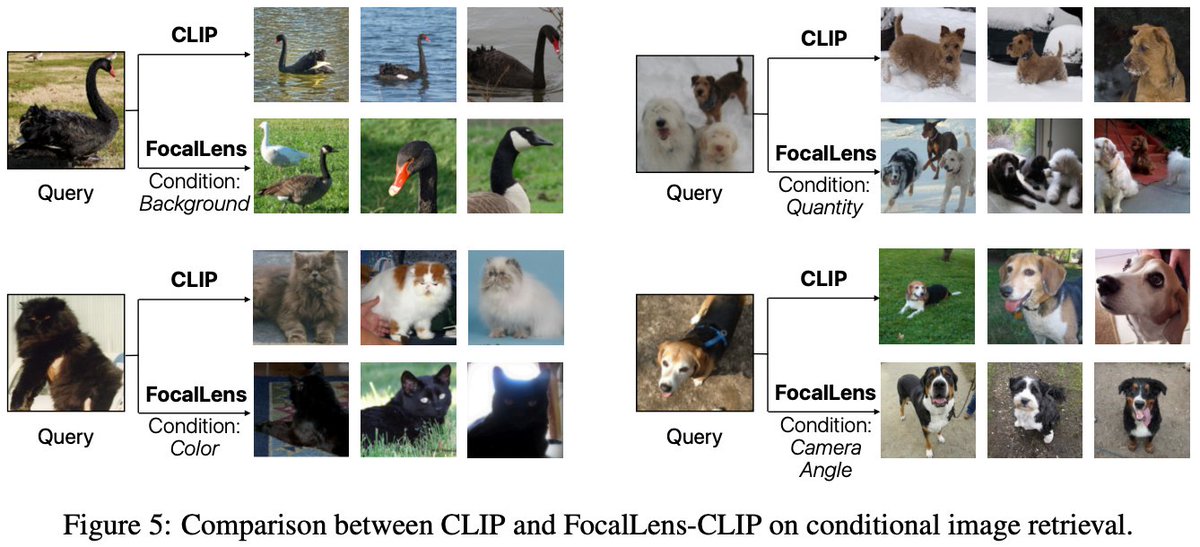

Excited to introduce FocalLens: an instruction tuning framework that turns existing VLMs/MLLMs into text-conditioned vision encoders that produce visual embeddings focusing on relevant visual information given natural language instructions! 📢: @HPouransari will be presenting…

I'm exited to announce that our work (AURORA) got accepted into #CVPR2025🎉! Special thanks to my coauthors: @ch1m1m0ry0, @cydhsieh, @ethnlshn, @Dongping0612, Linda Shapiro and @RanjayKrishna, This work wouldn’t have been possible without them! See you all in Nashville 🎸!

Introducing AURORA 🌟: Our new training framework to enhance multimodal language models with Perception Tokens; a game-changer for tasks requiring deep visual reasoning like relative depth estimation and object counting. Let’s take a closer look at how it works.🧵[1/8]

🔥We are excited to present our work Synthetic Visual Genome (SVG) at #CVPR25 tomorrow! 🕸️ Dense scene graph with diverse relationship types. 🎯 Generate scene graphs with SAM segmentation masks! 🔗Project link: bit.ly/4e1uMDm 📍 Poster: #32689, Fri 2-4 PM 👇🧵

Agentic AI will transform every enterprise–but only if agents are trusted experts. The key: Evaluation & tuning on specialized, expert data. I’m excited to announce two new products to support this–@SnorkelAI Evaluate & Expert Data-as-a-Service–along w/ our $100M Series D! ---…

1/8🧵 Thrilled to announce RealEdit (to appear in CVPR 2025)! We introduce a real-world image-editing dataset sourced from Reddit. Along with the training and evaluation datasets, we release our model that achieves SOTA performances on a variety of real-world editing tasks.

Stop by poster #596 at 10A-1230P tomorrow (Fri 25 April) at #ICLR2025 to hear more about Sigmoid Attention! We just pushed 8 trajectory checkpoints each for two 7B LLMs for Sigmoid Attention and a 1:1 Softmax Attention (trained with a deterministic dataloader for 1T tokens): -…

Small update on SigmoidAttn (arXiV incoming). - 1B and 7B LLM results added and stabilized. - Hybrid Norm [on embed dim, not seq dim], `x + norm(sigmoid(QK^T / sqrt(d_{qk}))V)`, stablizes longer sequence (n=4096) and larger models (7B). H-norm used with Grok-1 for example.

The 2nd Synthetic Data for Computer Vision workshop at @CVPR! We had a wonderful time last year, and we want to build on that success by fostering fresh insights into synthetic data for CV. Join us! We welcome submissions! Please consider submitting your work! (deadline: March…

(1/5)🚨LLMs can now self-improve to generate better citations✅ 📝We design automatic rewards to assess citation quality 🤖Enable BoN/SimPO w/o external supervision 📈Perform close to “Claude Citations” API w/ only 8B model 📄arxiv.org/abs/2502.09604 🧑💻github.com/voidism/SelfCi…

Introducing AURORA 🌟: Our new training framework to enhance multimodal language models with Perception Tokens; a game-changer for tasks requiring deep visual reasoning like relative depth estimation and object counting. Let’s take a closer look at how it works.🧵[1/8]

I will be presenting our Lookback Lens paper at #EMNLP2024 in Miami! 📆 Nov 13 (Wed) 4:00-5:30 at Tuttle (Oral session: ML for NLP 1) 🔗 arxiv.org/abs/2407.07071 Happy to chat about LLMs and hallucinations! See you soon in Miami! ✈️ @linluqiu @cydhsieh @RanjayKrishna @yoonrkim

🚨Can we "internally" detect if LLMs are hallucinating facts not present in the input documents? 🤔 Our findings: - 👀Lookback ratio—the extent to which LLMs put attention weights on context versus their own generated tokens—plays a key role - 🔍We propose a hallucination…

Hard negative finetuning can actually HURT compositionality, because it teaches VLMs THAT caption perturbations change meaning, not WHEN they change meaning! 📢 A new benchmark+VLM at #ECCV2024 in The Hard Positive Truth arxiv.org/abs/2409.17958 @cydhsieh @RanjayKrishna @uclanlp

🤔 In training vision models, what value do AI-generated synthetic images provide compared to the upstream (real) data used in training the generative models in the first place? 💡 We find using "relevant" upstream real data still leads to much stronger results compared to using…

Will training on AI-generated synthetic data lead to the next frontier of vision models?🤔 Our new paper suggests NO—for now. Synthetic data doesn't magically enable generalization beyond the generator's original training set. 📜: arxiv.org/abs/2406.05184 Details below🧵(1/n)

‼️ LLMs hallucinate facts even if provided with correct/relevant contexts 💡 We find models' attention weight distribution on input context versus their own generated tokens serves as a strong detector for such hallucinations 🚀 The detector transfers across models/tasks, and can…

🚨Can we "internally" detect if LLMs are hallucinating facts not present in the input documents? 🤔 Our findings: - 👀Lookback ratio—the extent to which LLMs put attention weights on context versus their own generated tokens—plays a key role - 🔍We propose a hallucination…