Chenhao Li

@breadli428

Doctoral fellow @ETH_AI_Center | Embodied intelligence and robot learning @leggedrobotics & LAS | Prev. @MIT, @ETH_en, @MPI_IS, @EPFL_en, @mcgillu.

🧠With the shift in humanoid control from pure RL to learning from demonstrations, we take a step back to unpack the landscape. 🔗breadli428.github.io/post/lfd/ 🚀Excited to share our blog post on Feature-based vs. GAN-based Learning from Demonstrations—when to use which, and why it…

It’s getting noisier and noisier every year. The reviews can come from any random dude. I don’t know where this ecosystem will lead us to. But I don’t think it’ll sustain / be appreciated long.

Anyone knows adam?

you know robotics is maturing when RSL starts making Art nice work @breadli428 !!

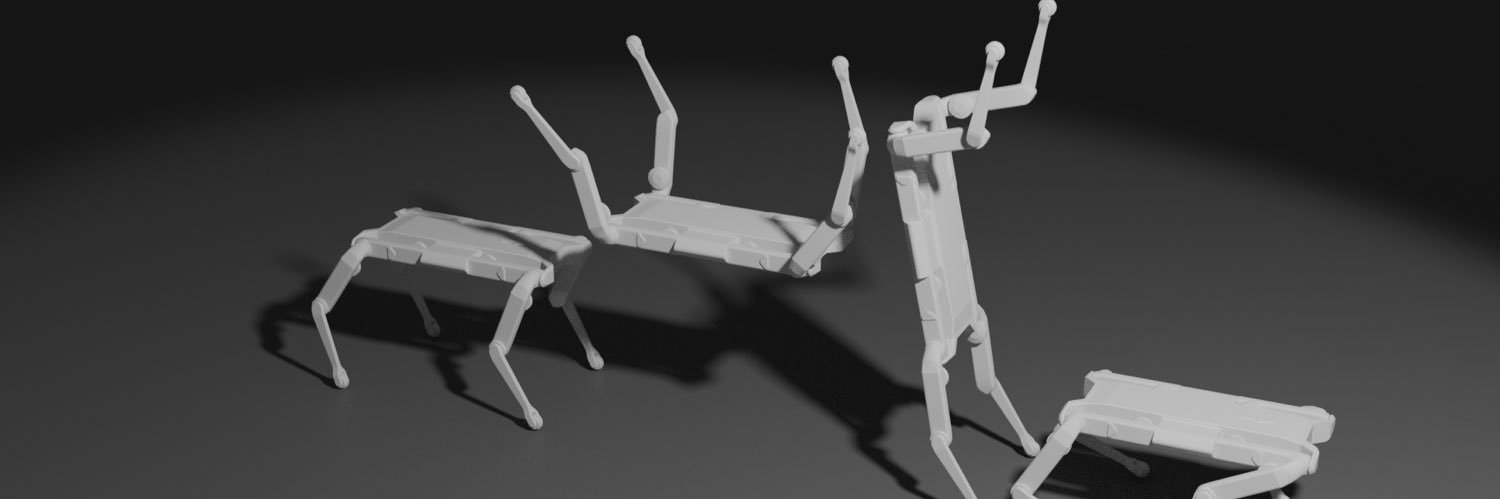

Beyond automation and precision, A Robot’s Dream asked something deeper: What does it mean for a robot to express—to participate in art, to reflect us back to ourselves? Proud to support this exploration at #LaBiennale by Ryan Batke and @breadli428. gramaziokohler.arch.ethz.ch/web/projekte/e…

I don’t see a difference between raising a kid and raising a model.

Check @catachiii’s work on combining model structure with RL training on whole-body control!

🐕 I'm happy to share my paper: RAMBO: RL-augmented Model-based Whole-body Control for Loco-manipulation has been accepted by IEEE Robotics and Automation Letters (RA-L) 🧶 Project website: jin-cheng.me/rambo.github.i… Paper: arxiv.org/abs/2504.06662

Everything needs a hard E-Stop 🚨

please make this go viral so I can pay for repairs our humanoid robot boy DeREK completely lost his mind @REKrobot

Please DM me if you have any thoughts on the post or have any suggestions to add work that I didn’t cover!

Check out our blog post by @breadli428. breadli428.github.io/post/lfd/

Tho popular, practitioners often choose a method without rigorous justification, attributing success to the method itself without considering that similar results could be achieved with alternatives, or fully understanding why one works over the other. We want to change that. 🔥

🚶Human motions have become popular in guiding RL training and making robots walk like real human. 🤖 🚀 Excited to share our latest research on recent advances in this technology, with a guideline on the best practices! 🔗breadli428.github.io/post/lfd/ 📄PDF: arxiv.org/pdf/2507.05906

This is kind of learning from demonstrations (music pieces) where you condition the policy on future beats no? Would be so cool to see (hear) it in real!

🎶Can a robot learn to play music? YES! — by teaching itself, one beat at a time 🎼 🥁Introducing Robot Drummer: Learning Rhythmic Skills for Humanoid Drumming 🤖 🔍 For details, check out: robotdrummer.github.io

This blog post was fortunately polished by @frankzydou’s input, which greatly changed my view of many algorithmic similarities between the two studied lines of methods. It made me realize how much character animation and robotics communities interpret things similarly and…

I’m pleased to share a detailed blog on Feature-based vs. GAN-based Learning from Demonstrations: When and Why. This article provides valuable insights for those looking to scale RL systems using offline reference data, especially in the context of physics-based agent control.…

Added to must read list, always top quality work from @breadli428

🧠With the shift in humanoid control from pure RL to learning from demonstrations, we take a step back to unpack the landscape. 🔗breadli428.github.io/post/lfd/ 🚀Excited to share our blog post on Feature-based vs. GAN-based Learning from Demonstrations—when to use which, and why it…

MPC is not entirely dead - it can still provide accurate reference motions for the RL policy to get robust from. Not sure if this is pathetic or encouraging for MPC folks tho...

Exactly 2 years ago during #Arche, my thesis supervisor @FJenelten51897 and I deployed this mpc+rl hybrid controller on very complicated collapsed buildings. Two years later its performance is still at the top tier! 😎 Paper: science.org/doi/10.1126/sc…

very well written post on motion imitation

🧠With the shift in humanoid control from pure RL to learning from demonstrations, we take a step back to unpack the landscape. 🔗breadli428.github.io/post/lfd/ 🚀Excited to share our blog post on Feature-based vs. GAN-based Learning from Demonstrations—when to use which, and why it…

The deep insights from a guy working on the frontiers of LfD

🧠With the shift in humanoid control from pure RL to learning from demonstrations, we take a step back to unpack the landscape. 🔗breadli428.github.io/post/lfd/ 🚀Excited to share our blog post on Feature-based vs. GAN-based Learning from Demonstrations—when to use which, and why it…

Very timely. Read this!

🧠With the shift in humanoid control from pure RL to learning from demonstrations, we take a step back to unpack the landscape. 🔗breadli428.github.io/post/lfd/ 🚀Excited to share our blog post on Feature-based vs. GAN-based Learning from Demonstrations—when to use which, and why it…

🔥 Happening now @ETH_en, RobotX Innovation Day is attracting huge attention from the robotics community! 🎉 🤖 Here is a quick walk-around: @leggedrobotics @crl_ethz @cvg_ethz @ASL_ETHZ @srl_ethz @eth_dmavt @CSatETH @ETH_AI_Center @duatic_ag @FlexionRobotics @mimicrobotics …