bayes

@bayeslord

we are accelerating. ceo @ stealth

the growth rate difference here matters most. we can catch up, but it will take hard scaling of literally every source of energy to do it

There are really 2 bitter lessons in AI. This is the second.

we are really, really, really early we’ve hardly built any gpus and we know next to nothing about the algorithmic limits of intelligence

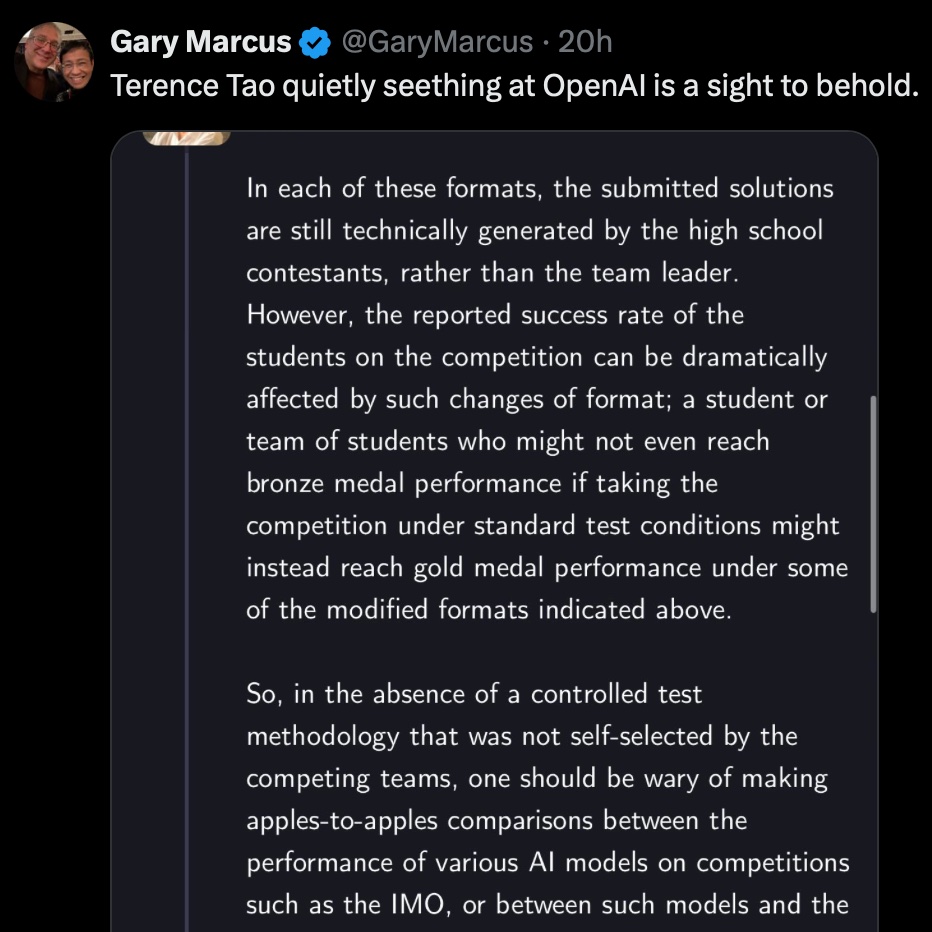

sorry who does gary marcus think he's kidding here? you are the number one ai seether in the world bro

if you're so smart, why are you not treating your brain like the extremely jailbreakable and prompt hackable generative model it is?

system prompted o3 not to editorialize and it went 99% nonverbal. seems like a pretty high level of self understanding

what % of your max aura level do you think you're at on average? my guess for most people is <10%

you can literally walk around in effortless max aura mode 100% of the time i consider it a public service. it makes most people perk up noticeably. they instantly seem happier, more at ease, etc

you can literally walk around in effortless max aura mode 100% of the time i consider it a public service. it makes most people perk up noticeably. they instantly seem happier, more at ease, etc

i don’t know anyone who thought this

remember when we used to believe — like three years ago —that proof of AGI would be when one is unable to tell natural language output of a machine from that of a human?

the reason it’s called “chatgpt psychosis” and not “guy suddenly believes many true things” is because the models are still too stupid to deal with that sort of situation in a high iq+eq way. this will definitely change