Juntang

@archanfel_anoth

xAI grok-mini pretrain lead, ex-OpenAI, sole inventor of GPT4-turbo long-context algo. Core contributor to (GPT4o, GPT4, GPT4-turbo, DaLLE 3, OAI Embedding v3)

Excited to be a member of the amazing team at @xai , and shipping the best grok3! Thrilled to lead grok3-mini training, and will ship it to all users for free in the coming days! LFG!

Thrilled that you like it!

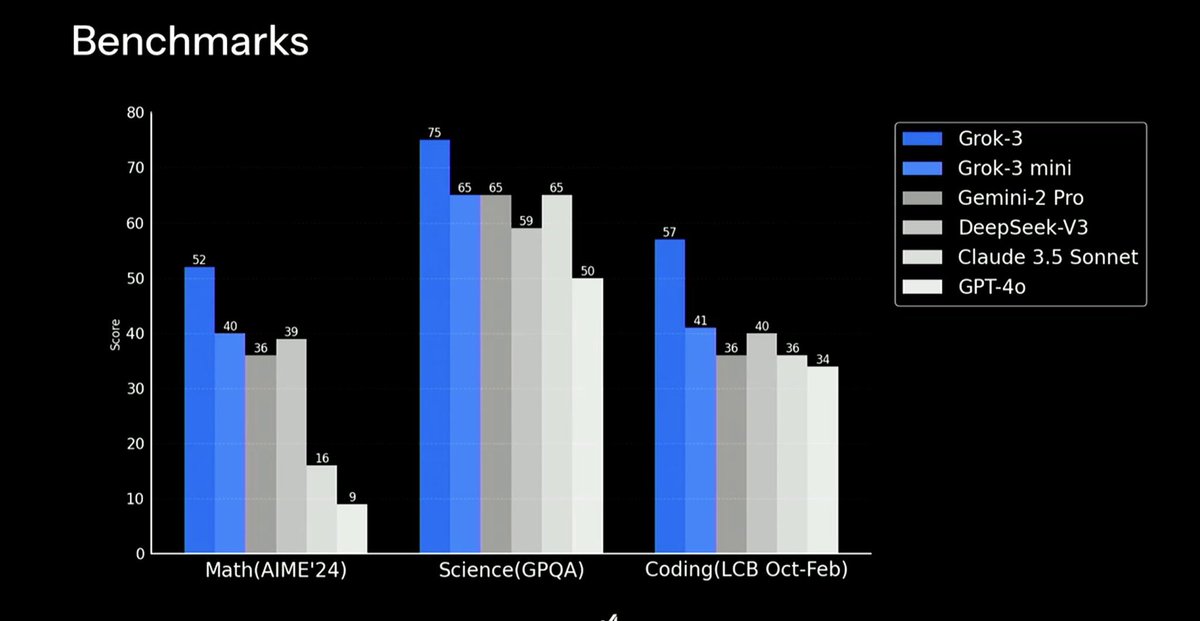

Grok-3-mini is intelligence that is too cheap to meter! It's just below Sonnet 3.7 thinking, but it is priced highly competitively 1M input - $0.30 and 1M output - $0.50 Cheap and performant models are the future! We need trillions of tokens to automate all work

We are hiring researchers and engineers at xAI to build next-gen naitive multi-modal models, please apply online or dm me if you are interested! job-boards.greenhouse.io/xai/jobs/46846…

Glad you like it! We are working hard to improve grok3 and will continue to deliver grok4!

I must say, I’m starting to like this Grok 3 guy!☺️ One thing I noticed today is that it truly seems to understand (Grok) what it’s talking about in the Richard Feynman way of explaining things: “If you can’t explain something in simple terms, you don’t really understand it.”

Try grok3 for free!

This is it: The world’s smartest AI, Grok 3, now available for free (until our servers melt). Try Grok 3 now: x.com/i/grok X Premium+ and SuperGrok users will have increased access to Grok 3, in addition to early access to advanced features like Voice Mode

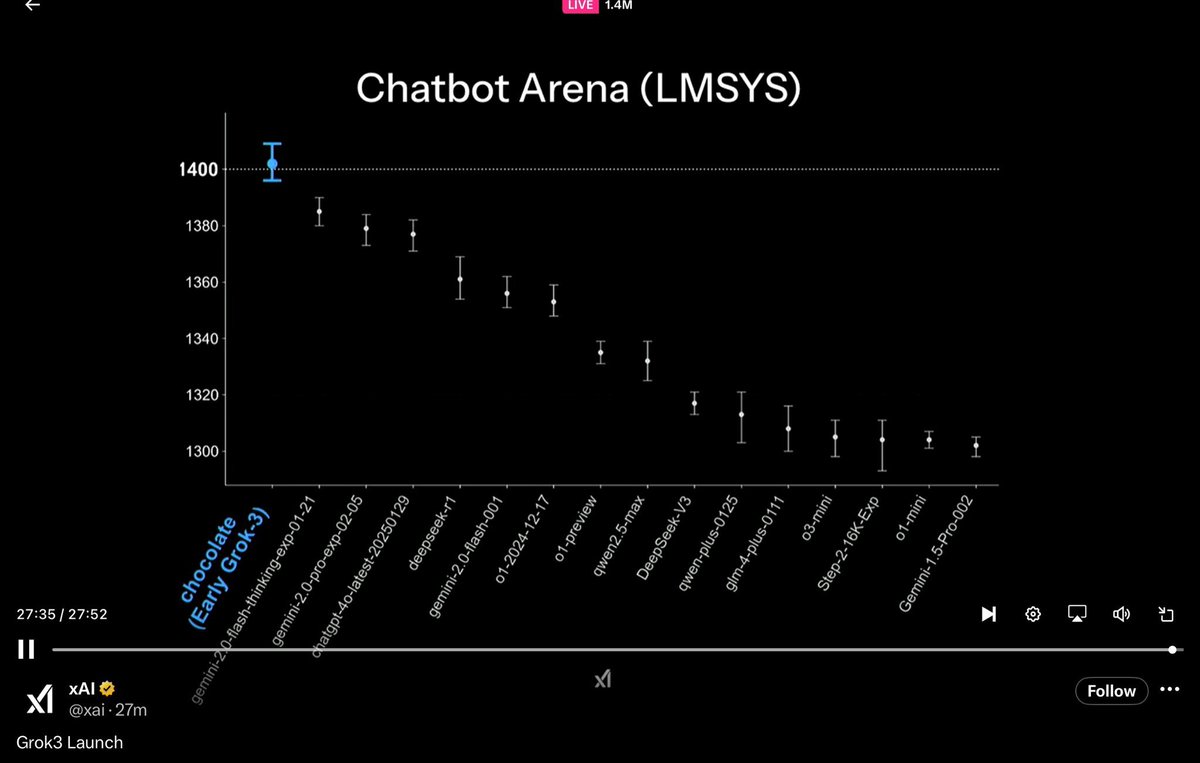

BREAKING: @xAI early version of Grok-3 (codename "chocolate") is now #1 in Arena! 🏆 Grok-3 is: - First-ever model to break 1400 score! - #1 across all categories, a milestone that keeps getting harder to achieve Huge congratulations to @xAI on this milestone! View thread 🧵…

OpenAI Sora was out of the game on day one. To be fair, I compared it with Hunyuan, which dropped 6 days ago, it's an open-source AI model and you can run it locally for free. TBH, if I were to compare it to Hailuo AI or Kling AI, Sora would look even worse. Let’s dive in:

The (true) story of development and inspiration behind the "attention" operator, the one in "Attention is All you Need" that introduced the Transformer. From personal email correspondence with the author @DBahdanau ~2 years ago, published here and now (with permission) following…