Athul Paul Jacob

@apjacob03

@MIT_CSAIL @UWaterloo | Co-created AIs: CICERO, Diplodocus | Ex: @google, @generalcatalyst, FAIR @MetaAI, @MSFTResearch AI, @MITIBMLab, @Mila_Quebec

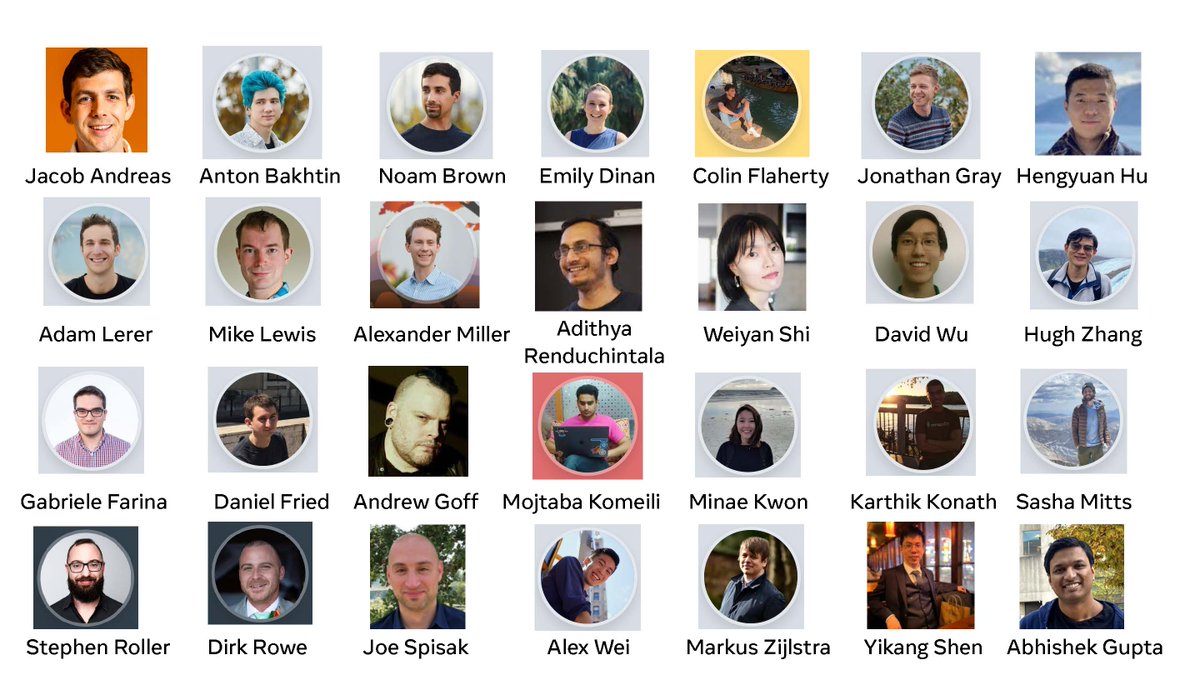

Super excited to share that I've defended my PhD @MIT_CSAIL! 🎓 I want to extend my immense gratitude to my advisor, @jacobandreas, and my thesis committee — @KonstDaskalakis, @gabrfarina, and @roger_p_levy. Huge thanks to @polynoamial, @adamlerer, @em_dinan, @ml_perception, and…

Leshem Choshen (@LChoshen) is developing the technical+social infrastructure needed to support communal training of large-scale ML systems. Before MIT was one of the inventors of "model merging" techniques; these days he’s thinking about collecting & learning from user feedback.

You've got a couple of GPUs and a desire to build a self-driving car from scratch. How can you turn those GPUs and self-play training into an incredible looking agent? Come to W-821 at 4:30 in B2-B3 to learn from @Mdjxjxnsk @tw_killian @ozansener

Still in stealth but our team has grown to 20 and we're still hiring. If you're interested in joining the research frontier of deploying RL+LLM systems, shoot me an email to chat at ICML!

Check out our latest research on data. We're releasing 24T tokens of richly labelled web data. We found it very useful for our internal data curation efforts. Excited to see what you build using Essential-Web v1.0!

[1/5] 🚀 Meet Essential-Web v1.0, a 24-trillion-token pre-training dataset with rich metadata built to effortlessly curate high-performing datasets across domains and use cases!

Hello everyone! We are quite a bit late to the twitter party, but welcome to the MIT NLP Group account! follow along for the latest research from our labs as we dive deep into language, learning, and logic 🤖📚🧠

Past work has shown that world state is linearly decodable from LMs trained on text and games like Othello. But how do LMs *compute* these states? We investigate state tracking using permutation composition as a model problem, and discover interpretable, controllable procedures🧵

There are so many cool things that can be built today with RL. Was great catching up with @natolambert and talking about RL scaling and self-driving

A RL podcast that isn't just about reasoning LMs :D -- so much exciting stuff continuing to work in RL. In 2022 people slept on the RL for control innovations bc of stable diffuction/chatgpt. In 2025 people slept on RL innovations there bc of reasoning. It doesn't need to be…

Hiring researchers and engineers for a stealth, applied research company with a focus on RL x foundation models. Folks on the team already are leading RL / learning researchers. If you think you'd be good at the research needed to get things working in practice, email me

1.5 yrs ago, we set out to answer a seemingly simple question: what are we *actually* getting out of RL in fine-tuning? I'm thrilled to share a pearl we found on the deepest dive of my PhD: the value of RL in RLHF seems to come from *generation-verification gaps*. Get ready to🤿!

Model-free deep RL algorithms like NFSP, PSRO, ESCHER, & R-NaD are tailor-made for games with hidden information (e.g. poker). We performed the largest-ever comparison of these algorithms. We find that they do not outperform generic policy gradient methods, such as PPO. 1/N

We've built a simulated driving agent that we trained on 1.6 billion km of driving with no human data. It is SOTA on every planning benchmark we tried. In self-play, it goes 20 years between collisions.

Ekin Akyürek (@akyurekekin) builds tools for understanding & controlling algorithms that underlie reasoning in language models. You’ve likely seen his work on in-context learning; I'm just as excited about past work on linguistic generalization & future work on test-time scaling.

Pratyusha Sharma (@pratyusha_PS) studies how language shapes learned representations in artificial and biological intelligence. Her discoveries have shaped our understanding of systems as diverse as LLMs and sperm whales.

Is your CS dept worried about what academic research should be in the age of LLMs? Hire one of my lab members! Leshem Choshen (@LChoshen), Pratyusha Sharma (@pratyusha_PS) and Ekin Akyürek (@akyurekekin) are all on the job market with unique perspectives on the future of NLP: 🧵

The (true) story of development and inspiration behind the "attention" operator, the one in "Attention is All you Need" that introduced the Transformer. From personal email correspondence with the author @DBahdanau ~2 years ago, published here and now (with permission) following…

Very happy to hear that GANs are getting the test of time award at NeurIPS 2024. The NeurIPS test of time awards are given to papers which have stood the test of the time for a decade. I took some time to reminisce how GANs came about and how AI has evolve in the last decade.

Thank you to the @NeurIPSConf for this recognition of the Generative Adversarial Nets paper published ten years ago with my colleagues @goodfellow_ian, Jean Pouget-Abadie, @memimo, @bingxu_, @dwf , @sherjilozair and @AaronCourville.

Announcing the NeurIPS 2024 Test of Time Paper Awards: blog.neurips.cc/2024/11/27/ann…

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this: Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢 🧵⬇️

Super excited to share NNetnav : A new method for generating complex demonstrations to train web agents—driven entirely via exploration! Here's how we’re building useful browser agents, without expensive human supervision: 🧵👇 Code: github.com/MurtyShikhar/N… Preprint:…

Why do we treat train and test times so differently? Why is one “training” and the other “in-context learning”? Just take a few gradients during test-time — a simple way to increase test time compute — and get a SoTA in ARC public validation set 61%=avg. human score! @arcprize