Ignacio Alzugaray

@alzugarayign

PostDoc @ Imperial College, Dyson Robotics Lab. Visual SLAM & Scene Understanding.

Just arrived to Abu Dhabi for #IROS2024. Looking forward to catch up with old friends. We will present this fantastic RA-L collaboration with @rmurai0610, @paulhjkelly and @AjdDavison during Multi-Robot Systems III (Wed 17.30h Room 11). I am also chairing the session!

We'll be presenting "Distributed Simultaneous Localisation and Auto-Calibration using GBP" at #IROS2024 We show that distributed GBP can be effectively used to localise and auto-calibrate many robots. Please come to our presentation (Wed 17:30 Room 11) if you're around!

COMO will be presented as an 𝐨𝐫𝐚𝐥 at #ECCV2024! Also come see the 𝐥𝐢𝐯𝐞 𝐝𝐞𝐦𝐨 of our compact 3D representation for real-time monocular SLAM Oral: Session 2C at 1:40 PM on Tue 1 Oct Poster: #181 at 4:30-6:30 PM on Tue 1 Oct Demo: Thurs 3 Oct AM edexheim.github.io/como/

How can we infer 3D-consistent poses and dense geometry in real-time given only RGB images? 𝗖𝗢𝗠𝗢 decodes dense geometry from a compact and optimizable set of 3D anchor points to enforce 3D consistency. Project page: edexheim.github.io/como/ Work with @AjdDavison 1/n

We are presenting this today at #IROS2024! Multi-Robot Systems III, 17.30h, Room 11. I am chairing the session, come to say hi!

We'll be presenting "Distributed Simultaneous Localisation and Auto-Calibration using GBP" at #IROS2024 We show that distributed GBP can be effectively used to localise and auto-calibrate many robots. Please come to our presentation (Wed 17:30 Room 11) if you're around!

Collaboration with: @alzugarayign @paulhjkelly @AjdDavison Paper: arxiv.org/abs/2401.15036 Project Page: rmurai.co.uk/projects/GBP-A…

We'll be presenting "Distributed Simultaneous Localisation and Auto-Calibration using GBP" at #IROS2024 We show that distributed GBP can be effectively used to localise and auto-calibrate many robots. Please come to our presentation (Wed 17:30 Room 11) if you're around!

(1/3) Hyperion: arxiv.org/abs/2407.07074 CT-SLAM, the continuous-time variant differs from other SLAM approaches in that, instead of modeling an agent's trajectory as a sequence of keyframes, the trajectory is modeled as a Spline. Thus, for any time t a smooth estimate exists.

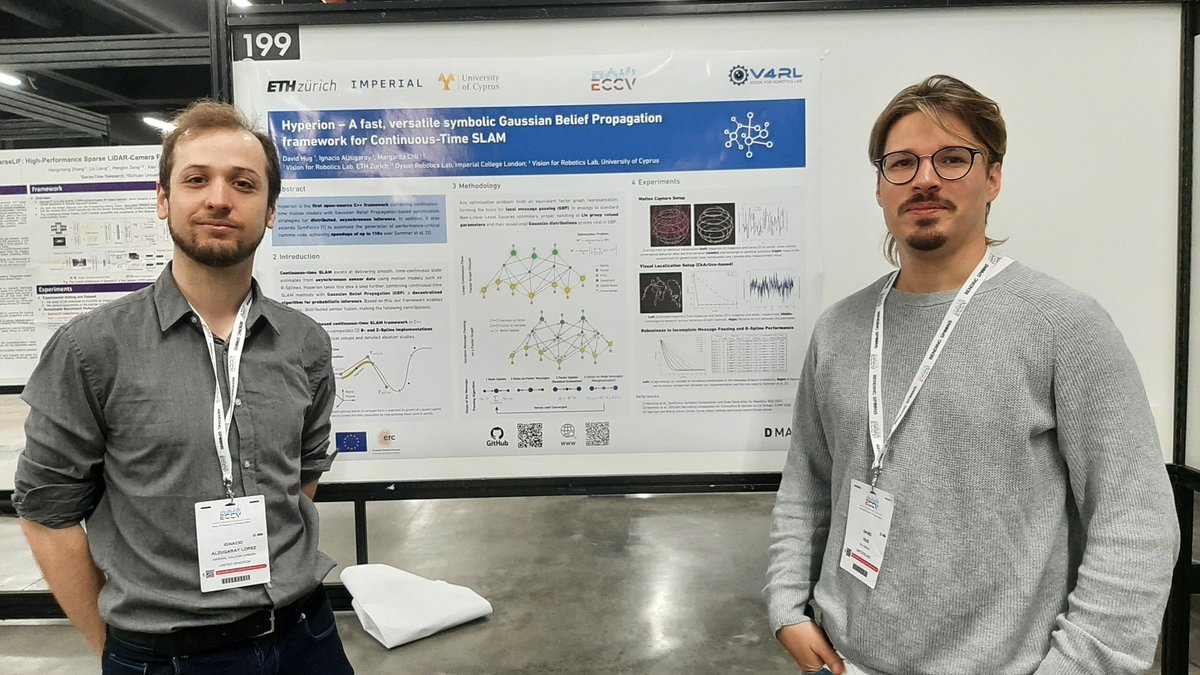

We are presenting Hyperion at #ECCV2024 TODAY! Come by the poster 199. Continuous-time SLAM + Guassian Belief Propagation, with applications to multi-agents. A collaboration with David Hug and @MargaritaChli. High performance code open sourced github.com/VIS4ROB-lab/hy…

One of the most impressive demos I have seen in recent years presented at #ECCV2024. Monocular (RGB only!) dense SLAM running on a consumer laptop at 30FPS. COMO by @eric_dexheimer @AjdDavison, also open source edexheim.github.io/como/

Hyperion - A fast, versatile symbolic Gaussian Belief Propagation framework for Continuous-Time SLAM David Hug, @alzugarayign, @MargaritaChli tl;dr: fastest SymForce-based B- and Z-Spline; symbolic GBP-based continuous-time SLAM arxiv.org/pdf/2407.07074

PixRO: Pixel-Distributed Rotational Odometry with Gaussian Belief Propagation @alzugarayign, @rmurai0610, @AjdDavison tl;dr: each pixel->local message-passing communication with other neighbouring pixels->global rotation; GBP->distributed optimization arxiv.org/pdf/2406.09726

Ignacio will present our work on global camera motion estimation with pixel level processing --- getting ready for the future of "On-Sensor Vision" --- at the CVPR CCD workshop on Tuesday this week. Please go and meet him there!

I am thrilled to attend @CVPR and present a sneak peek of our work at the CCD Workshop PixRO (arxiv.org/abs/2406.09726), \w @rmurai0610 & @AjdDavison. TL;DR: How can vision-based motion estimation tasks be distributed using pixel-processors? (cont'd) youtu.be/19b2TwUirGs

This is happening TODAY at #CVPR2024! Come and check the poster at the Computational Cameras and Displays Workshop, Arch 204, 11.15h-12.30h, Poster 320. @CVPR @CVPRConf #ComputerVision #SLAM #OpticalFlow

I am thrilled to attend @CVPR and present a sneak peek of our work at the CCD Workshop PixRO (arxiv.org/abs/2406.09726), \w @rmurai0610 & @AjdDavison. TL;DR: How can vision-based motion estimation tasks be distributed using pixel-processors? (cont'd) youtu.be/19b2TwUirGs