Alex Shaw

@alexgshaw

Researching @LaudeInstitute & investing @LaudeVentures Co-creator of Terminal Bench. Formerly Google. BYU alum.

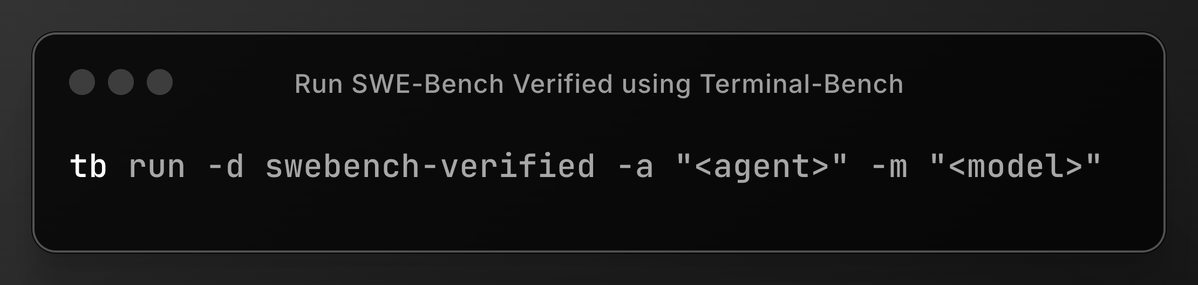

Evaluating agents on benchmarks is a pain. Each benchmark comes with its own harness, scoring scripts, and environments and integrating can take days. We're introducing the Terminal-Bench dataset registry to solve this problem. Think of it as the npm of agent benchmarks. Now…

Hill climbing Terminal-Bench means getting good at text-based computer use, not just coding.

I'm making a list of all the non-coding things people are doing with Claude Code. What are you using Claude Code for?

K prize round one results are live. Huge congrats to Eduardo for taking the top spot. A solo builder from Brazil, his winning submission correctly closed 9 out of 120 github issues. $50K prize ($278k BRL!)

Using Terminal-bench for evaluating coding capabilities!🥰

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

Great results for the open-source community :) Congrats @Alibaba_Qwen

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

The world is moving towards agents Static benchmarks don't measure what agents do best (multi-turn reasoning) Thus, interactive benchmarks: * Terminal Bench (@alexgshaw, @Mike_A_Merrill) * Text Arena (@LeonGuertler) * BALROG (@PaglieriDavide, @_rockt) * ARC-AGI-3 (@arcprize)

Terminals are amazing. You'll never regret mastering the shell & unix fundamentals (pipes, processes, filesystem), and key tools (curl, dig, jq, ssh, tmux, apt, nvim…) Especially given agents' propensity to wield these

I’m excited to share that I started a new role today as the first Research Partner at @LaudeInstitute and @LaudeVentures! My first interaction with Laude Ventures was over a year ago. As a fellow researcher-turned-founder of @SnorkelAI out of @StanfordAILab, I had a lot of…

Terminal Bench is a cool benchmark I just came across! CLI SWE agents must complete tasks like - Build Linux kernel - Configure git server - Train an ML model Take-away: Claude 4 models are GOATed (the lead Warp model is a combo of sonnet and opus).

Today, we're announcing a preview of ARC-AGI-3, the Interactive Reasoning Benchmark with the widest gap between easy for humans and hard for AI We’re releasing: * 3 games (environments) * $10K agent contest * AI agents API Starting scores - Frontier AI: 0%, Humans: 100%

Terminal-Bench and Warp featured in TechCrunch today 🚀 Agents operating computers using terminals is becoming a powerful paradigm. Btw, we have something exciting to share this week so stay tuned :)

Terminal-Bench and @warpdotdev @zachlloydtweets in TechCrunch today :) (link in replies) I firmly believe that the future of LLM-Computer interaction is through something that looks like a terminal interface. Great to see this picking up steam.

Congrats to the OpenHands team!

OpenHands is live on TerminalBench and gets 41.3% with claude-4-sonnet, 6 points better than Claude Code! If you want to use an agent that can use the terminal, in your terminal -- try out the OpenHands CLI.

It's great to see Terminal-Bench on the Kimi K2 model card. We love open source models, and just made it even easier to test them by adding better support for local models to our harness through LiteLLM

Congrats to the Daytona team, we’ve already started integrating them into Terminal-Bench!

BREAKING: @daytonaio just became the fastest-growing infrastructure company in history. $0 → $1M ARR in 2 months. Faster than Stripe. Faster than Vercel. Faster than AWS. Yes, really. 🧵👇