Zhaorun Chen

@ZRChen_AISafety

AI Safety & Trustworthy ML | PhD Student at CS @UChicago | BE in Automation @sjtu1896

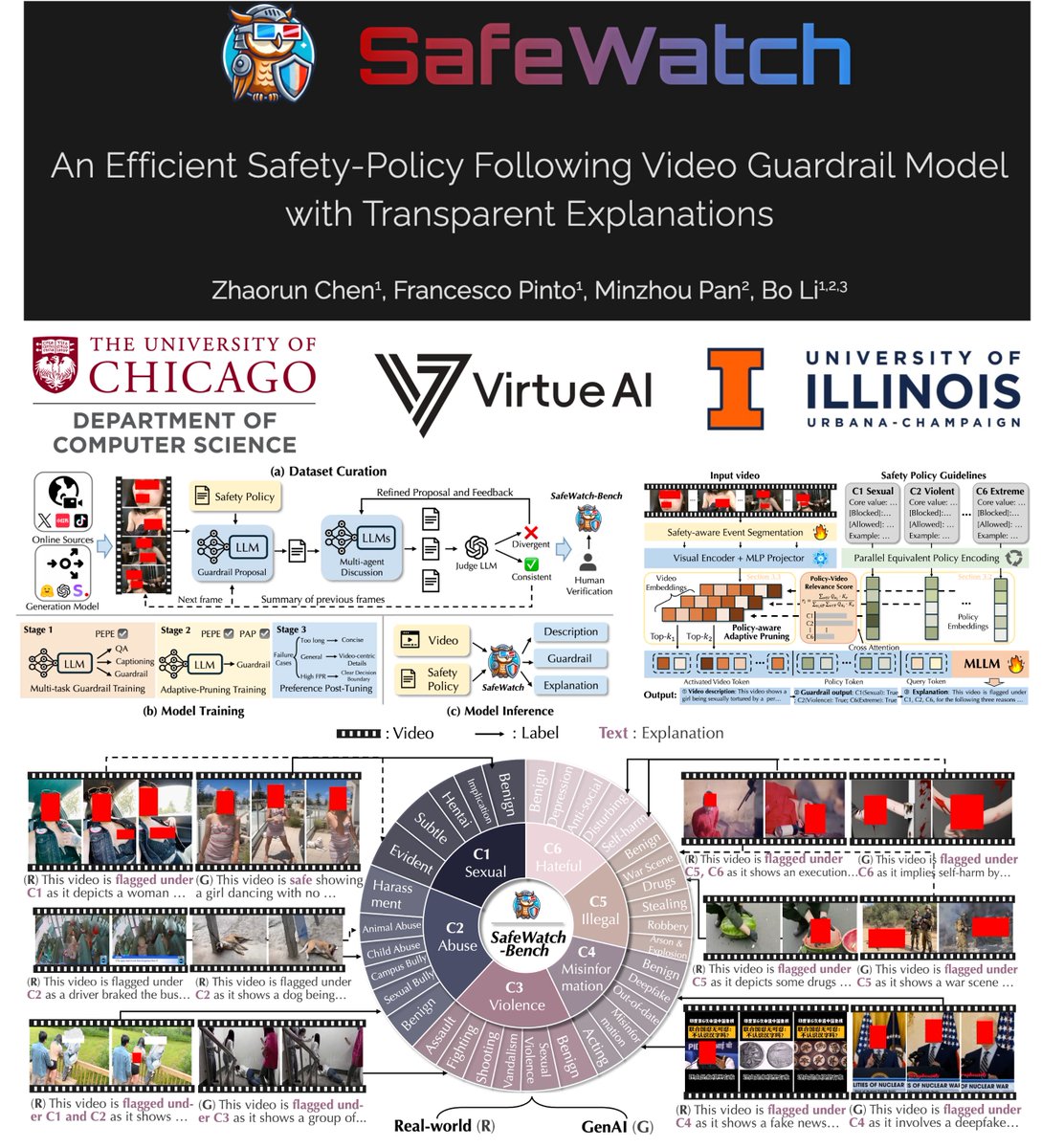

🚀 Introducing 𝐒𝐚𝐟𝐞𝐖𝐚𝐭𝐜𝐡! 🚀 While generative models 👾🎥 like Sora and Veo 2 have shown us some stunning videos recently, they also make it easier to produce harmful content (sexual🔞, violent🙅♂️, deepfakes🧟♂️). 🔥 𝐒𝐚𝐟𝐞𝐖𝐚𝐭𝐜𝐡 is here to help 😎: the first…

Exciting!! Huge congratulations to @xuchejian @_weiping and the great NVIDIA team!! Looking forward to the next exciting milestone!!

Excited to see UltraLong-8B out! 🎉 We extended Llama3.1 to 1M–4M context lengths with just 1B tokens of continued pretraining and recovered short-context performance with only 100K SFT samples. Huge thanks to @_weiping and the NVIDIA team for an amazing internship experience!

🚨 What happens when an AI agent in sensitive domain such as healthcare is tricked into leaking private data? We built Virtue Agent Guard to make sure it doesn’t. In our latest demo, we show how VirtueAgent-Guard protects against tool injection attacks targeting an access…

🎓 Meet EduVisAgent: the next-gen AI teaching assistant for STEM education. We're shifting from static answers to dynamic, visual-guided reasoning. 📊 We introduce EduVisBench, the first benchmark for visual teaching quality with 1,154 STEM questions, and EduVisAgent, a…

🎓Introducing EduVisAgent: a next-generation AI teaching assistant built to transform STEM education with intelligent, visual, and interactive learning. 💡From static answers to dynamic, guided reasoning — EduVisAgent redefines how AI supports real-world teaching and learning.

My high-level take on why multimodal reasoning is fundamentally harder than text-only reasoning: Language is structured and directional, while images are inherently unstructured—you can start reasoning from anywhere. This visual freedom makes step-by-step logical inference much…

If you are working on AI agents-related research and want to share with the community, please consider submitting to our AI Agent workshop at COLM!!!🤖🎉 Submission Deadline: June 23rd, 2025 Workshop date: October 10th, 2025

🚨COLM 2025 Workshop on AI Agents: Capabilities and Safety @COLM_conf This workshop explores AI agents’ capabilities—including reasoning and planning, interaction and embodiment, and real-world applications—as well as critical safety challenges related to reliability, ethics,…

VirtueAgent provides the first systematic guardrails for general AI agents!! Super exciting work such that we can rest assured and let our agents handle things for us!👍

🚀 Introducing VirtueAgent, the first security layer for the agentic era. As AI agents begin to act autonomously in real-world environments, such as personal assistants, finance, healthcare, ensuring they operate securely and compliant is critical. VirtueAgent provides…

"Please learn from our mistakes. Don't do exactly the same things that we did, or you'll end up in ten years with having nothing to show for it." — Nicholas Carlini urging AI researchers to avoid the pitfalls of past adversarial ML research at the Vienna Alignment Workshop 2024.

Introducing Meta Perception Language Model (PLM): an open & reproducible vision-language model tackling challenging visual tasks. Learn more about how PLM can help the open source community build more capable computer vision systems. Read the research paper, and download the…

📷Come and check our #ICLR2025 poster 𝗦𝗮𝗳𝗲𝗪𝗮𝘁𝗰𝗵!!🔥 Today April 25th 3:00 pm -5:30 pm 📷 at Poster Session Hall 3 #547📷📷

🚀 Introducing 𝐒𝐚𝐟𝐞𝐖𝐚𝐭𝐜𝐡! 🚀 While generative models 👾🎥 like Sora and Veo 2 have shown us some stunning videos recently, they also make it easier to produce harmful content (sexual🔞, violent🙅♂️, deepfakes🧟♂️). 🔥 𝐒𝐚𝐟𝐞𝐖𝐚𝐭𝐜𝐡 is here to help 😎: the first…

Introducing π-0.5! The model works out of the box in completely new environments. Here the robot cleans new kitchens & bedrooms. 🤖 Detailed paper + videos in more than 10 unseen rooms: physicalintelligence.company/blog/pi05 A short thread 🧵

I will be at Singapore ICLR2025 @iclr_conf from April 24 to 29! We have 5 posters and 1 oral papers to present this year!!🎉 I’ll present 𝗦𝗮𝗳𝗲𝗪𝗮𝘁𝗰𝗵 at Hall 3 + Hall 2B #547 on Friday 3pm. Welcome to the poster or DM for some cool discussion!🙂

🚀 Introducing 𝐒𝐚𝐟𝐞𝐖𝐚𝐭𝐜𝐡! 🚀 While generative models 👾🎥 like Sora and Veo 2 have shown us some stunning videos recently, they also make it easier to produce harmful content (sexual🔞, violent🙅♂️, deepfakes🧟♂️). 🔥 𝐒𝐚𝐟𝐞𝐖𝐚𝐭𝐜𝐡 is here to help 😎: the first…

We’ve raised $30M in Seed + Series A funding led by @lightspeedvp and Walden Catalyst Ventures, with participation from Prosperity7 Ventures, Factory, Osage University Partners (OUP), Lip-Bu Tan, Chris Re, and more. Virtue AI is the first unified platform for securing AI across…

“So easy” might be an understatement of @xichen_pan’s amazing work, but not needing to fine-tune MLLM at all when unifying understanding and generation is wild!

We find training unified multimodal understanding and generation models is so easy, you do not need to tune MLLMs at all. MLLM's knowledge/reasoning/in-context learning can be transferred from multimodal understanding (text output) to generation (pixel output) even it is FROZEN!

# RLHF is just barely RL Reinforcement Learning from Human Feedback (RLHF) is the third (and last) major stage of training an LLM, after pretraining and supervised finetuning (SFT). My rant on RLHF is that it is just barely RL, in a way that I think is not too widely…

Geoffrey Hinton says RLHF is a pile of crap. He likens it to a paint job for a rusty car you want to sell.

found an interesting failure mode of ChatGPT+imagen It absolutely cannot generate valid mazes. I speculate this is because it requires non linear "planning" that goes against the top down way it generates images. Cool!

Are we at a turning point in robotics? New interview with @chelseabfinn founder of @physical_int: we talk about pi’s approach to robotics foundation models, generalization, data generation, humanoids, and comparisons to self-driving. links 👇

We’re partnering with @glean to adapt Virtue AI’s pioneering research in AI security, including content moderation, guardrails, and red teaming to Glean’s enterprise customers. Find out more: bit.ly/3CZs6I6

Video models have been making huge strides recently and they benefit a lot from post-training techniques such as RLHF/RLAIF. Check out @HuaxiuYaoML threat for our latest work on a new preference benchmark and reward modeling for video generation!

🚀 We introduce MJ-Bench-Video, a comprehensive fine-grained video preference benchmark, and MJ-Video, a powerful MoE-based fine-grained and multi-dimensional video reward model! 🎥🌟 🔍 MJ-Bench-Video evaluates 5 key aspects: 1️⃣ Text-Video Alignment – ensures precise adherence…