Yu Xiang

@YuXiang_IRVL

Assistant Professor @UT_Dallas, Intelligent Robotics and Vision Lab @IRVLUTD, PhD @UMich, Previously @StanfordCS @uwcse @NVIDIA All views my own.

I was preparing a video to introduce our lab @IRVLUTD for a meeting. Happy to share the video here! We are looking forward to collaborating with both academia and industry. Please feel free to reach out

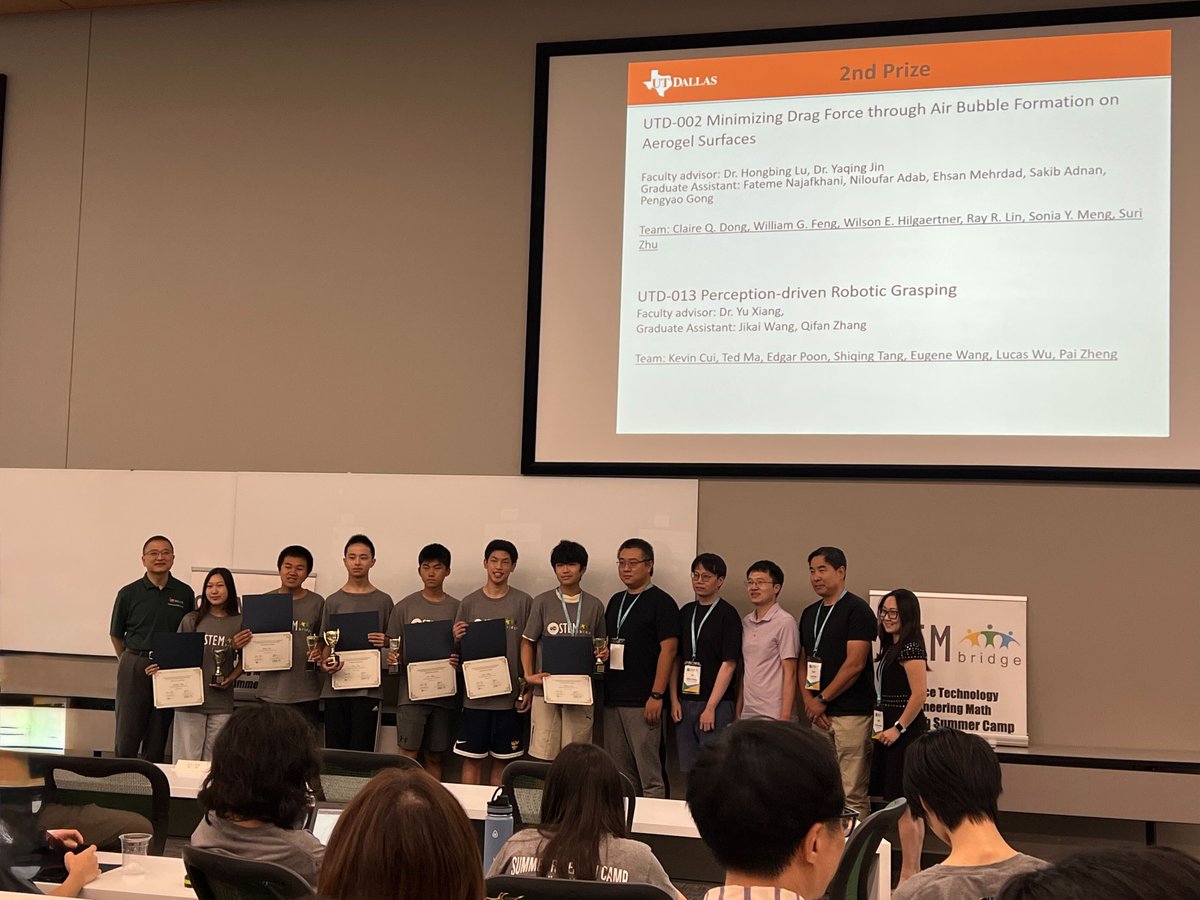

We @IRVLUTD hosted 7 high school students this summer through the STEM Bridge Program, where they explored perception-driven robotic grasping with @JwRobotics and Qifan Zhang. Their project just won 2nd place out of 15 teams—huge congrats to the team! 👏🤖

Working on NeurIPS reviews. My assignments cover topics in robotic grasping, manipulation, world model, semantic SLAM, object pose estimation and sign language recognition. Now, I understand why there are so many NeurIPS submissions

If you're interested in working on learning from simulation, direct from perception and training at scale for multi-fingered hands please reach out to me and my colleagues. We are looking for talented researchers who have worked or have experience working on those problems.

The Dex team at NVIDIA is defining the bleeding edge of sim2real dexterity. Take a look below 🧵 There's a lot happening at NVIDIA in robotics, and we’re looking for good people! Reach out if you're interested. We have some big things brewing (and scaling :)

I’m looking for a PhD research intern to work on robot foundation models @rai_inst (formerly known as Boston Dynamics AI Institute). If you have experience with imitation learning, simulation, and real robots, please feel free to DM me or apply here: jobs.lever.co/rai/f2169567-0…

TRI's latest Large Behavior Model (LBM) paper landed on arxiv last night! Check out our project website: toyotaresearchinstitute.github.io/lbm1/ One of our main goals for this paper was to put out a very careful and thorough study on the topic to help people understand the state of the…

A general research tip: pay attention to the details. When something strange happens—don’t ignore it. Especially in robotics, odd or incorrect robot behavior often reveals deeper insights. Dig in and ask why

Well, I believe that algorithms and hardware will get there—dexterous hands are the future. Saw Matthew at RSS—was too shy to ask for a photo

Time for blog post number eight -- "Vacuum grippers versus robot hands." Here's the question: when humanoids can do everything a human can, will they take over the warehouse? No! Vacuum is awesome! Vacuum grippers are the wheels of manipulation! It is only an five-minute…

Don't be too hyped about generalist robots

Tesla has paused Optimus production to revise its design – the redesign could take around two months, according, per Chinese outlet LatePost Auto. Musk's mass production goal for this year is basically out of reach. According to sources in China’s supply chain, Tesla is making…

Wrapped up a wonderful RSS! It was great to reunite with friends and finally meet so many people I only knew from X!

The secret behind this demo by @Suddhus #RSS2025 Keypoints, object poses, defined grasps and MPC

Why build a humanoid robot? Because the world is designed for humans, including all the best Halloween costumes!

So many great workshops and talks tomorrow. It will be hard to decide which one to go #RSS2025

”why are so many vision / learning researchers moving to robotics?” Keynote from @trevordarrell #RSS2025

“As a PHD student, your job is not publishing a paper every quarter. Focus on a problem in deep understanding and solve it in years under the protect of your adviser” from @RussTedrake #RSS2025

Very happy that EgoDex received Best Paper Awards of 1st EgoAct workshop at #RSS2025! Huge thanks to the organizing committee @SnehalJauhri @GeorgiaChal @GalassoFab10 @danfei_xu @YuXiang_IRVL for putting out this forward-looking workshop. Also kudos to my colleagues @ryan_hoque…

Thrilled to have received Best Paper Award at the EgoAct Workshop at RSS 2025! 🏆 We’ll also be giving a talk at the Imitation Learning Session I tomorrow, 5:30–6:30pm. Come to learn about DexWild! Work co-led by @mohansrirama, with @JasonJZLiu, @kenny__shaw, and @pathak2206.