Yaniv Nikankin

@YNikankin

PhD student @TechnionLive, looking inside language models

Ok, so I can finally talk about this! We spent the last year (actually a bit longer) training an LLM with recurrent depth at scale. The model has an internal latent space in which it can adaptively spend more compute to think longer. I think the tech report ...🐦⬛

Now accepted to #COLM2025! We formally define hidden knowledge in LLMs and show its existence in a controlled study. We even show that a model can know the answer yet fail to generate it in 1,000 attempts 😵 Looking forward to presenting and discussing our work in person.

🚨 It's often claimed that LLMs know more facts than they show in their outputs, but what does this actually mean, and how can we measure this “hidden knowledge”? In our new paper, we clearly define this concept and design controlled experiments to test it. 1/🧵

@mntssys and I are excited to announce circuit-tracer, a library that makes circuit-finding simple! Just type in a sentence, and get out a circuit showing (some of) the features your model uses to predict the next token. Try it on @neuronpedia: shorturl.at/SUX2A

Our interpretability team recently released research that traced the thoughts of a large language model. Now we’re open-sourcing the method. Researchers can generate “attribution graphs” like those in our study, and explore them interactively.

Tried steering with SAEs and found that not all features behave as expected? Check out our new preprint - "SAEs Are Good for Steering - If You Select the Right Features" 🧵

Having a standard benchmark is important as mechinterp matures. Check out our work and come chat with us at @iclr2025!

Lots of progress in mech interp (MI) lately! But how can we measure when new mech interp methods yield real improvements over prior work? We propose 😎 𝗠𝗜𝗕: a Mechanistic Interpretability Benchmark!

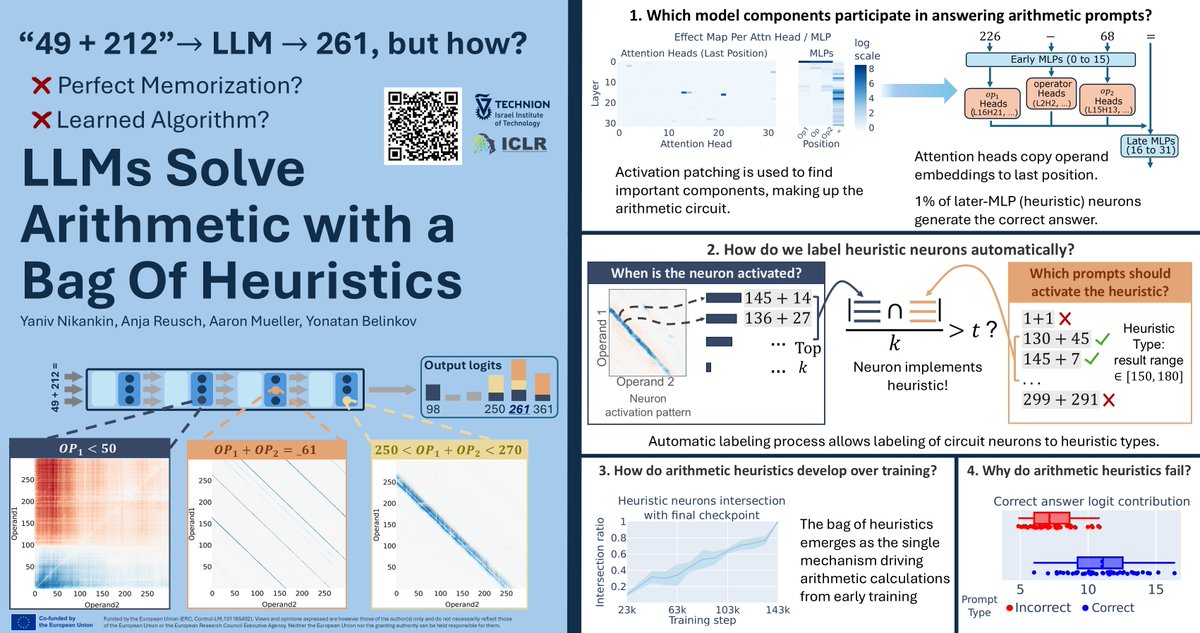

Interested in mechanistic interpretability? We'll be presenting our work on arithmetic mechanisms in LLMs later this week at #ICLR2025. DM me if you're there and want to chat about AI interpretability. 📆Friday, April 25th, 10-12:30 (Poster #243) 🔖iclr.cc/virtual/2025/p…

🎉 Our Actionable Interpretability workshop has been accepted to #ICML2025! 🎉 >> Follow @ActInterp @tal_haklay @anja_reu @mariusmosbach @sarahwiegreffe @iftenney @megamor2 Paper submission deadline: May 9th!

Meet Ai2 Paper Finder, an LLM-powered literature search system. Searching for relevant work is a multi-step process that requires iteration. Paper Finder mimics this workflow — and helps researchers find more papers than ever 🔍

🚀 Excited to share our latest research: “SILO: Solving Inverse Problems with Latent Operators”! A surprisingly simple approach to image restoration with latent diffusion models that achieves SOTA results while being 2.5x–10x faster than prior methods. 🧵[1/7]

Check out the First Workshop on Mech Interp for Vision at @CVPR! Paper submissions: sites.google.com/view/miv-cvpr2…

🔍 Curious about what's really happening inside vision models? Join us at the First Workshop on Mechanistic Interpretability for Vision (MIV) at @CVPR! 📢 Website: sites.google.com/view/miv-cvpr2… Meet our amazing invited speakers! #CVPR2025 #MIV25 #MechInterp #ComputerVision

I'm recruiting PhD students for our new lab, coming to Boston University in Fall 2025! Our lab aims to understand, improve, and precisely control how language is learned and used in natural language systems (such as language models). Details below!