EduardoRFS.tei

@TheEduardoRFS

26yo anti floating point developer. You can almost always find me at http://twitch.tv/eduardorfs, it's the boring side of tech.

Over the years, my idea of a perfect job has shifted from developing programming languages to testing them. No one really knows how to test programming languages properly, the whole field is still in its infancy. Academia is more interested in theory and industry is more…

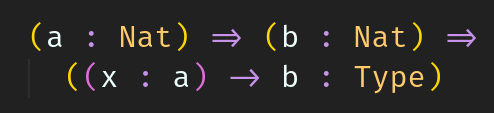

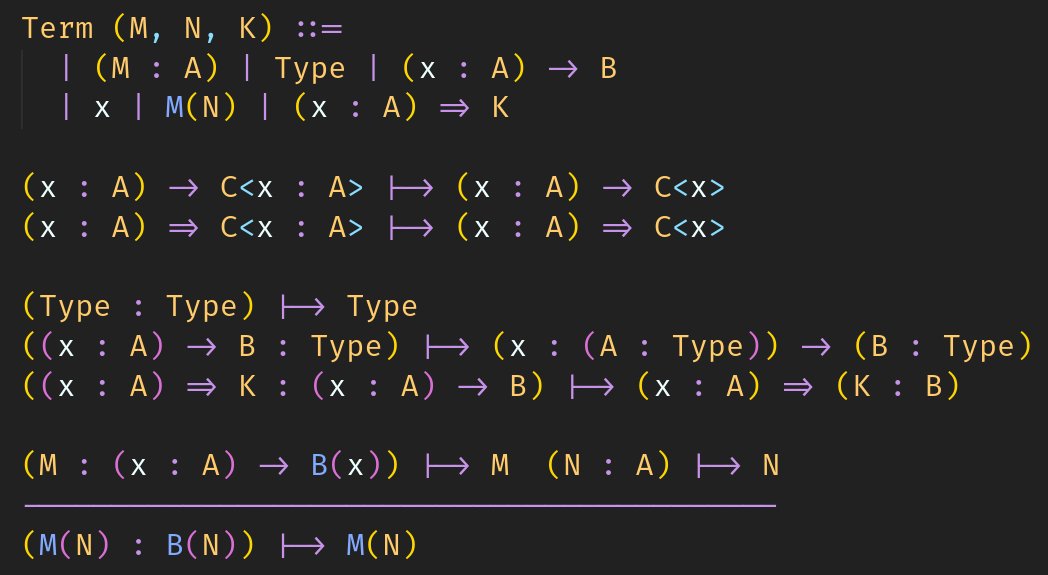

Maybe a way of rejecting the bad inputs, is by properly defining the normal forms. The following has no reductions available, but if you define that the normal form is typed and required the context to be well-formed, it means that it's a stuck term.

The rule is simple, something is only eliminated when it's in head normal form, to be in head normal form sometimes you need to push types down.

An idea that seems to work, is that a program is well typed, when there is no rewriting path that would produce an error. The issue of that is that running the typer, means running a rewriting path that would prove that, it exists, but it reduces freedom quite a bit.

At Pangram, we generally treat the problem of AI detection like an infinite data regime: we can always generate more synthetic data for our model. This has been true up until o3, which is not only expensive on a per-token basis, but we also have to pay for a bunch of reasoning…

How well does Pangram detect outputs from reasoning models? In our new model release, we greatly improve recall on OpenAI's o3 and o3-pro models. A quick thread on how we did it. 🧵

Just having check, doesn't seems to fully work. Which is why I guess the infer is actually needed. But you may not need to have it in the system explicitly.

o canal asianometry

o que é equivalente a ficar sentado no sofá vendo clipe de diva pop para os héteros