Zengzhi Wang

@SinclairWang1

PhDing @sjtu1896 #NLProc Working on Data Engineering for LLMs: MathPile (2023), 🫐 ProX (2024), 💎 MegaMath (2025),🐙 OctoThinker(2025)

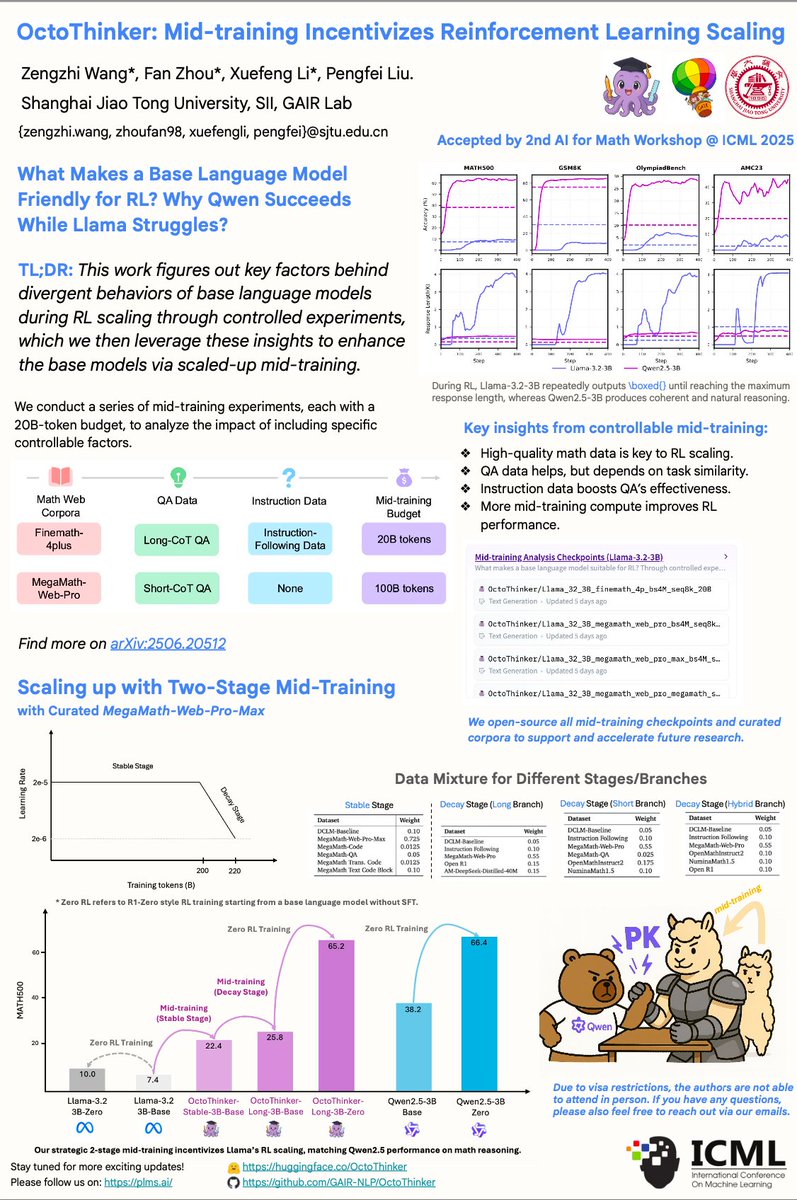

What Makes a Base Language Model Suitable for RL? Rumors in the community say RL (i.e., RLVR) on LLMs is full of “mysteries”: (1) Is the magic only happening on Qwen + Math? (2) Does the "aha moment" only spark during math reasoning? (3) Is evaluation hiding some tricky traps?…

MegaMath has been accepted to @COLM_conf 2025🥳 Hoping you find our data useful!

🥁🥁 Happy to share our latest efforts on math pre-training data, the MegaMath dataset! This is a 9-month project starting from 2024’s summer, and we finally deliver: the largest math pre-training data to date containing 💥370B 💥tokens of web, code, and synthetic data!

Can not agree more!

I don't think we need an American DeepSeek Project, we need an Open-Data DeepSeek. And no we didn't get one yet, despite what you might think, so let me explain. The biggest contributor to the gap between closed-source and open-source AI is, in my opinion, data accessibility and…

When building MegaScience, we learned the hard way: 📈 Strong datasets need strong proxy models. Our data was too spicy 🌶️ for small models like Qwen2.5-1.5B & 3B—they just flopped. But once we tried Qwen3-14B and 30B… boom 💥, everything clicked. Kinda terrifying to think: if…

🚨 New release: MegaScience The largest & highest-quality post-training dataset for scientific reasoning is now open-sourced (1.25M QA pairs)! 📈 Trained models outperform official Instruct baselines 🔬 Covers 7+ disciplines with university-level textbook-grade QA 📄 Paper:…

MegaScience Pushing the Frontiers of Post-Training Datasets for Science Reasoning

🚨 New release: MegaScience The largest & highest-quality post-training dataset for scientific reasoning is now open-sourced (1.25M QA pairs)! 📈 Trained models outperform official Instruct baselines 🔬 Covers 7+ disciplines with university-level textbook-grade QA 📄 Paper:…

Excited to share that our two papers have been accepted to #ICML2025! @icmlconf However, I can't be there in person due to visa issues. What a pity.🥲 Feel free to check out our poster, neither online nor offline in the Vancouver Convention Center. Programming Every Example:…

Excited to share that our two papers have been accepted to #ICML2025! @icmlconf However, I can't be there in person due to visa issues. What a pity.🥲 Feel free to check out our poster, neither online nor offline in the Vancouver Convention Center. Programming Every Example:…

Excited to share that our two papers have been accepted to #ICML2025! @icmlconf However, I can't be there in person due to visa issues. What a pity.🥲 Feel free to check out our poster, neither online nor offline in the Vancouver Convention Center. Programming Every Example:…

🥳Happy to share that our paper "Towards Fully Exploiting LLM Internal States to Enhance Knowledge Boundary Perception" has been accepted by #ACL2025! We explore leveraging LLMs' internal states to improve their knowledge boundary perception from efficiency and risk perspectives.

🚀 Hello, Kimi K2! Open-Source Agentic Model! 🔹 1T total / 32B active MoE model 🔹 SOTA on SWE Bench Verified, Tau2 & AceBench among open models 🔹Strong in coding and agentic tasks 🐤 Multimodal & thought-mode not supported for now With Kimi K2, advanced agentic intelligence…

Capable, Agentic, and Open-sourced. Kimi K2 excels in knowledge, math, and coding, and is optimized for complex tool use. See how it can analyze data, generate interactive webpages, and more. Explore what's possible and start building today!

🚀 Hello, Kimi K2! Open-Source Agentic Model! 🔹 1T total / 32B active MoE model 🔹 SOTA on SWE Bench Verified, Tau2 & AceBench among open models 🔹Strong in coding and agentic tasks 🐤 Multimodal & thought-mode not supported for now With Kimi K2, advanced agentic intelligence…

blog - abinesh-mathivanan.vercel.app/en/posts/short… read 'octothinker' last week and it's so cool. great work by @SinclairWang1 @FaZhou_998 @stefan_fee

processing all of CommonCrawl is about $20-50k [0], plus maybe 10-50k H100 if you wanna do GPU classification [1]. You can extract 1T tokens from PDFs for around $10k [2]. Major expenses are synth data, and verify which one of your approaches work [3]. -----------------------…

Training end-to-end multi-turn tool-use agents has proven incredibly challenging 😤 Just as noted in the recent Kevin blog: > Across several runs, we observe that around steps 35–40, the model begins generating repetitive or nonsensical responses. But here's our solution:…

🛠️🤖 Introducing SimpleTIR: An end-to-end solution for stable multi-turn tool use RL 📈 Multi-turn RL training suffers from catastrophic instability, but we find a simple fix ✨ The secret? Strategic trajectory filtering keeps training rock-solid! 🎯 Stable gains straight from…

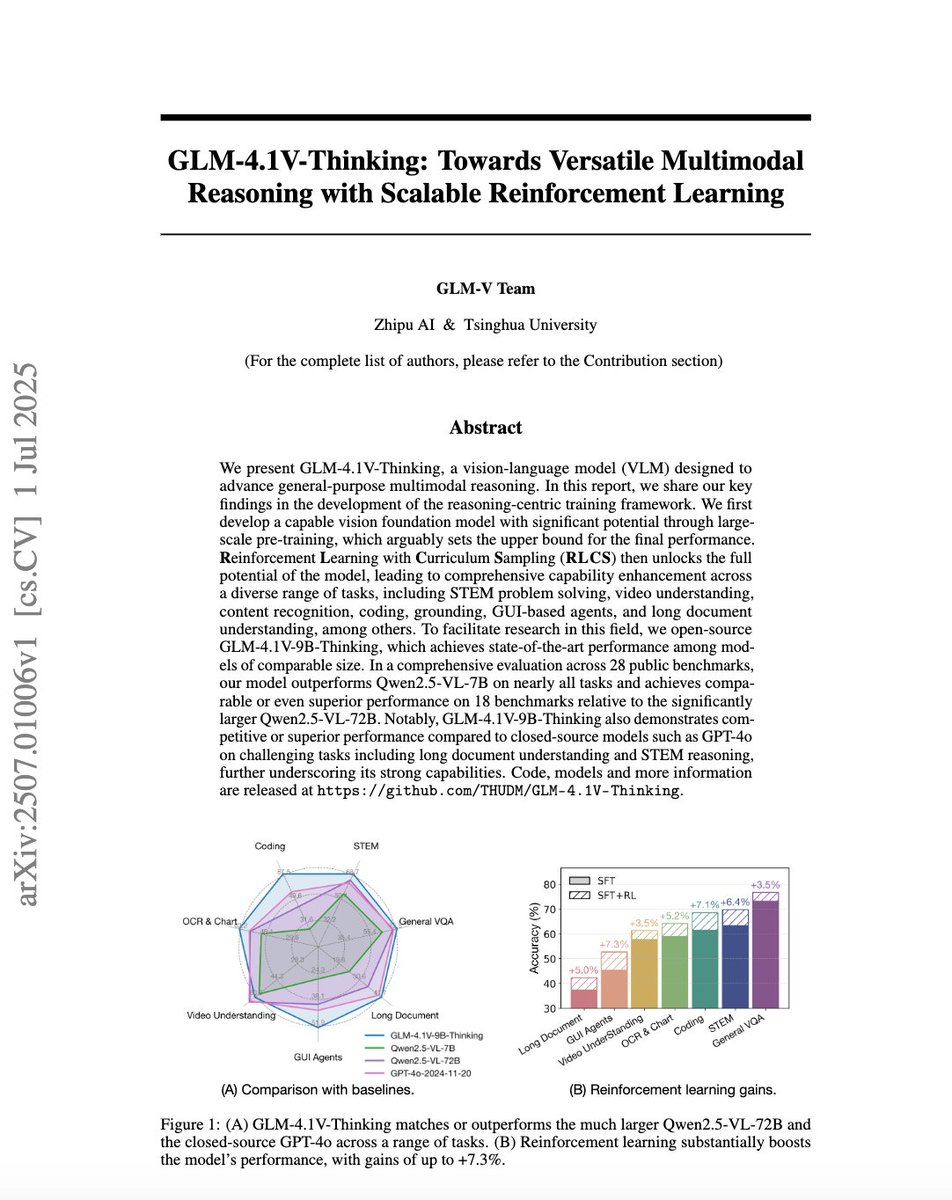

1. Solid data engineering on multimodal data. 2. Insightful details on the RL part, including but not limited to the design of answer extraction and reward system, the utilization of Curriculum Sampling, and details on improving effectiveness and stability.

Why do gradients increase near the end of training? Read the paper to find out! We also propose a simple fix to AdamW that keeps gradient norms better behaved throughout training. arxiv.org/abs/2506.02285

Just finished reading it quickly. It was truly impressive.

Amazing work (once again). Better midtraining makes models better for RL. Once again the power of good data strikes again. I think we are still so early about making better midtraining data to bootstrap RL. Excited to see what comes next!

What Makes a Base Language Model Suitable for RL? Rumors in the community say RL (i.e., RLVR) on LLMs is full of “mysteries”: (1) Is the magic only happening on Qwen + Math? (2) Does the "aha moment" only spark during math reasoning? (3) Is evaluation hiding some tricky traps?…

What foundation models do we REALLY need for the RL era? And what pre-training data? Excited to share our work: OctoThinker: Mid-training Incentivizes Reinforcement Learning Scaling arxiv.org/pdf/2506.20512 ✨ Key breakthroughs: - First RL-focused mid-training approach - Llama…

What Makes a Base Language Model Suitable for RL? Rumors in the community say RL (i.e., RLVR) on LLMs is full of “mysteries”: (1) Is the magic only happening on Qwen + Math? (2) Does the "aha moment" only spark during math reasoning? (3) Is evaluation hiding some tricky traps?…