Shurui Gui

@ShuruiGui

CS Ph.D. student at @TAMU. My research interests locate at graph learning, out-of-distribution generalization. Supervised by Dr. @ShuiwangJi

I am glad you asked… VL pipelines before 2020 relied heavily on region proposals from object detectors (not exactly 3d but at least its some sort of perceptual grouping, reminiscent of good old vision). In implementation this meant you would first run an object detector on your…

I'm not following any algorithm. I'm working on new architectures (JEPA world models), inference procedures (planning and reasoning by optimization in embedding space), and learning paradigms (self-supervised learning). When a system with these components starts working, we'll…

ah pascale and rao in a panel. after the acl teaser, this should be an interesting event.

Video generation models do not understand basic physics. Let alone the human body.

CW: Body Horror? This AI video attempt to show gymnastics is one of the best examples I have seen that AI doesn’t actually understand the human body and it’s motion but is just regurgitating available data. (Which appears to be minimal for gymnastics)

It is clear when we try any video generation models: they still focus on much more on texture than motions and physics.

Animals generate muscle actions, not pixels.

If you are a student or academic researcher and want to make progress towards human-level AI: >>>DO NOT WORK ON LLMs<<< LLMs are an off ramp. Thousands of engineers are working on LLMs with enormous computing resources. The only way you could possibly contribute is by analyzing…

Great talk by @ylecun yesterday, at the scientific symposium for the opening of the @ELLISInst_Tue! I took the liberty of summarizing one of his main take-home messages...

Introducing [#ICML2024] *Minimal Frame Averaging for More Symmetries and Efficiency*, a model-agnostic framework for efficient equivariance across a wide range of groups. Joint work w/ @JacobHelwig @ShuruiGui @ShuiwangJi P: arxiv.org/abs/2406.07598 C: github.com/divelab/MFA

Constructive mindset to deal with 👎paper reviews💡 •Accept that reviewers may have little time/experience. •They didn't get it? You didn't explain it well •Missed some details? Highlight them better •Didn't find the paper exciting? Here's your challenge for the next version!

Submitting to #NeurIPS2024, it asks for a license. Of the choices, I recommend CC BY 4.0. Roughly speaking, this allows people to share and adapt your work, but they have to cite you if they do ("BY").

Jacob @JacobHelwig and I will present our paper "SineNet: Learning Temporal Dynamics in Time-Dependent Partial Differential Equations" tomorrow (May 13) from 09:00 to 10:15 EDT in the AI4ScienceTalk series @AI4scienceTalks. Come and join us!. Details: ai4sciencetalks.github.io/projects/sinen…

Trilled to have our papers 1. Graph Structure Extrapolation for Out-of-Distribution Generalization (openreview.net/forum?id=Xgrey…) 2. Position Paper: TrustLLM: Trustworthiness in Large Language Models (arxiv.org/abs/2401.05561) (github.com/HowieHwong/Tru…) accepted at #ICML2024 !

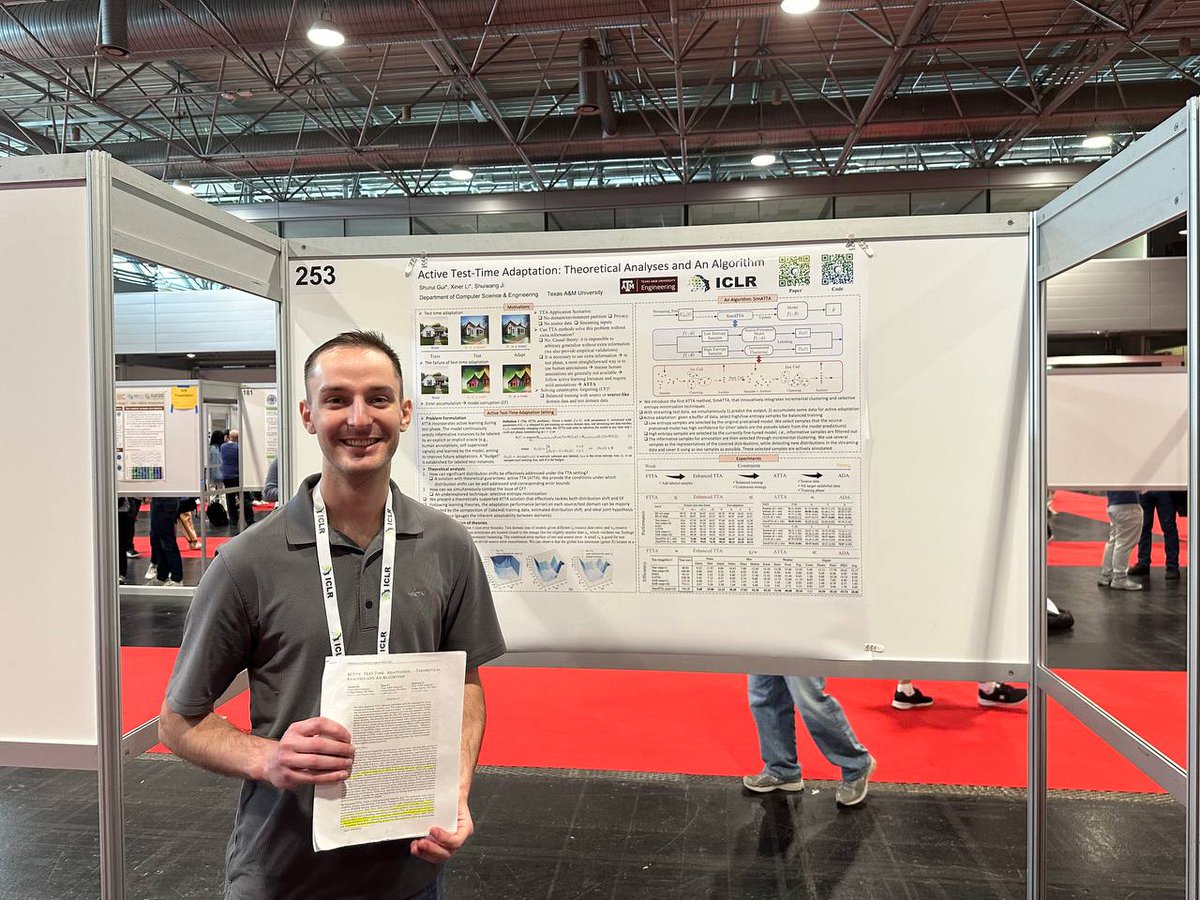

A successful poster session of our work: Active Test-Time Adaptation at #ICLR2024! Thanks @JacobHelwig for presenting on behalf of us!🥰

college admission in a few years...

NeurIPS 2024 will have a track for papers from high schoolers.

Very proud of this work by @zhenhailongW, based on collaboration with @jiajunwu_cs ‘s amazing group at Stanford. We fill in the gap between low-level visual perception and high-level language reasoning by designing an intermediate symbolic layer of vector graphics knowledge.

Large multimodal models often lack precise low-level perception needed for high-level reasoning, even with simple vector graphics. We bridge this gap by proposing an intermediate symbolic representation that leverages LLMs for text-based reasoning. mikewangwzhl.github.io/VDLM 🧵1/4