Sheheryar Zaidi

@ShehZaidi

Research Scientist at @GoogleDeepMind. Prev. PhD in Machine Learning at @OxfordStats. Google PhD Fellow in ML & Aker Scholar. Math @UniofOxford @Cambridge_Uni.

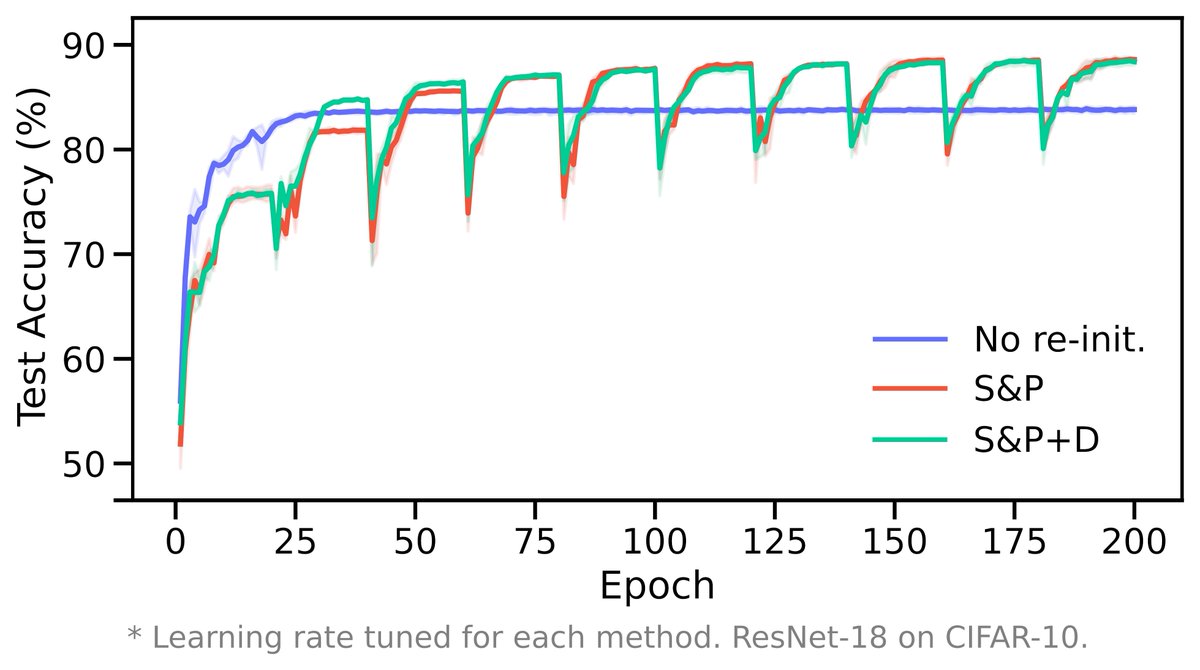

Mysterious observation: re-initializing neural nets during training can improve generalization, despite *no* change to the model, data or compute. We asked: when do re-initialization methods work? Paper📄: arxiv.org/abs/2206.10011 Poster🖼️: bit.ly/3mdjJAF (1/6)

Nice - we could have prompted our way to winning IMO gold on an already available model!

🚨 Olympiad math + AI: We ran Google’s Gemini 2.5 Pro on the fresh IMO 2025 problems. With careful prompting and pipeline design, it solved 5 out of 6 — remarkable for tasks demanding deep insight and creativity. The model could win gold! 🥇 #AI #Math #LLMs #IMO2025

Exciting and unique opportunity in our team at @GoogleDeepMind: we're hiring a laboratory scientist to build out a materials-synthesis lab 🧪 This lab will be a key part of our roadmap for training AI models capable of real-world materials discovery 🔁 job-boards.greenhouse.io/deepmind/jobs/…

Another one that came sooner than I expected: gold medal at IMO in natural language with a generalist model! 🥇

An advanced version of Gemini with Deep Think has officially achieved gold medal-level performance at the International Mathematical Olympiad. 🥇 It solved 5️⃣ out of 6️⃣ exceptionally difficult problems, involving algebra, combinatorics, geometry and number theory. Here’s how 🧵

Sharing this very unique materials science role with @GoogleDeepMind. They are looking specifically for someone to build a materials lab with them from the ground up. Time is short, deadline to apply in Jul 22! "We are seeking an exceptional and highly motivated expert in…

How might AI supercharge world-class expertise in medicine for everyone, everywhere? We’re privileged to pursue this mission @GoogleDeepMind with incredible teammates and are growing our team. We’re hiring stellar Software Engineers, Research Engineers, and Research Scientists…

🧠 Interested in pushing the scientific frontier with LLM post-training and RL? Our team is hiring a Research Engineer at Google DeepMind to accelerate materials science! 💡 Join us: job-boards.greenhouse.io/deepmind/jobs/…

🚨 Our team at GDM is hiring a research engineer to work on topics around RL, post-training + materials science! Role is based in Mountain View. DMs open if you have questions.

We introduce 🌸✨ AlphaEvolve ✨🌸, an evolutionary coding agent using LLMs coupled with automatic evaluators, to tackle open scientific problems 🧑🔬 and optimize critical pieces of compute infra ⚙️ deepmind.google/discover/blog/…

How much do algorithmic and experimental choices drive the accuracy of reconstructing tumour evolution? In this @CR_AACR commentary, @ZaccaSimo and I discuss a recent @NatureBiotech benchmarking paper exploring this question:

#TumorEvolution reconstruction is heavily influenced by algorithmic & experimental choices—this In the Spotlight commentary by @Rija_Zaidi_ & @ZaccaSimo highlights a recent @NatureBiotech paper by @TheBoutrosLab, @VanLooLab, @kellrott, @MaxGalder, et al. bit.ly/3VAMRQs

Our paper is out! We introduce the SPRINTER algorithm to infer clone proliferation rates from scDNAseq data 🧬 Great to work with the excellent @ojlucas, @Ward__Sophie, @abi_bunkum, @AFrankell, @MarksHilly, @NnennayaKanu, @CharlesSwanton, @ZaccaSimo & others!

🚨So proud to present the first study from our @CCG_UCL group together with @CharlesSwanton @NnennayaKanu and led by @ojlucas with key collaboration @Ward__Sophie and fundamental support @Rija_Zaidi_ @abi_bunkum & many others Out in @NatureGenet 👇 doi.org/10.1038/s41588… 🧵👇

Congratulations to Demis, John and the whole AlphaFold team! Two Nobels for deep learning in one year – wild times! 🎉

BREAKING NEWS The Royal Swedish Academy of Sciences has decided to award the 2024 #NobelPrize in Chemistry with one half to David Baker “for computational protein design” and the other half jointly to Demis Hassabis and John M. Jumper “for protein structure prediction.”

I’m beyond thrilled to share that our work on using deep learning to compute excited states of molecules is out today in @ScienceMagazine! This is the first time that deep learning has accurately solved some of the hardest problems in quantum physics. science.org/doi/abs/10.112…

Incredible achievement. Only 1 point away from a gold medal + it solved P6! 🎉🥈This felt a bit further in the future than it was. Excited about the implications of such systems for open problems in math.

We’re presenting the first AI to solve International Mathematical Olympiad problems at a silver medalist level.🥈 It combines AlphaProof, a new breakthrough model for formal reasoning, and AlphaGeometry 2, an improved version of our previous system. 🧵 dpmd.ai/imo-silver

Nuts rate of progress in the field!

Gemini and I also got a chance to watch the @OpenAI live announcement of gpt4o, using Project Astra! Congrats to the OpenAI team, super impressive work!

Exploring hyaluronic acid gels in your research? Check out our latest publication for a detailed, simple, reproducible, and scalable manufacturing process using BDDE or PEGDE crosslinking #Hydrogel #HyaluronicAcid #RegenerativeMedicine

Gemini 1.5 Pro has entered the (LMSys) Arena! Some highlights: -The only "mid" tier model at the highest level alongside "top" tier models from OpenAI and Anthropic ♊️ -The model excels at multimodal, and long context (not measured here) 🐍 -This model is also state-of-the-art…

More exciting news today -- Gemini 1.5 Pro result is out! Gemini 1.5 Pro API-0409-preview now achieves #2 on the leaderboard, surpassing #3 GPT4-0125-preview to almost top-1! Gemini shows even stronger performance on longer prompts, in which it ranks joint #1 with the latest…

A very natural idea (in retrospect!) given that MLPs are most often illustrated as graphs – nice work.

🔍How can we design neural networks that take neural network parameters as input? 🧪Our #ICLR2024 oral on "Graph Neural Networks for Learning Equivariant Representations of Neural Networks" answers this question! 📜: arxiv.org/abs/2403.12143 💻: github.com/mkofinas/neura… 🧵 [1/9]

We build neural codecs from a *single* image or video, achieving compression performance close to SOTA models trained on large datasets, while requiring ~100x fewer FLOPs for decoding ⚡ #CVPR2024 c3-neural-compression.github.io

PhD finally done! Thank you @yeewhye for guiding me through such a wonderful journey, and huge thanks to @jmhernandez233 @OxfordTVG for an excellent viva discussion. 🎓🎉

Another one flew the nest! Though @ShehZaidi has been flying high for a while now :)