Sharon Y. Li

@SharonYixuanLi

Assistant Professor @WisconsinCS. Making AI reliable for the open world.

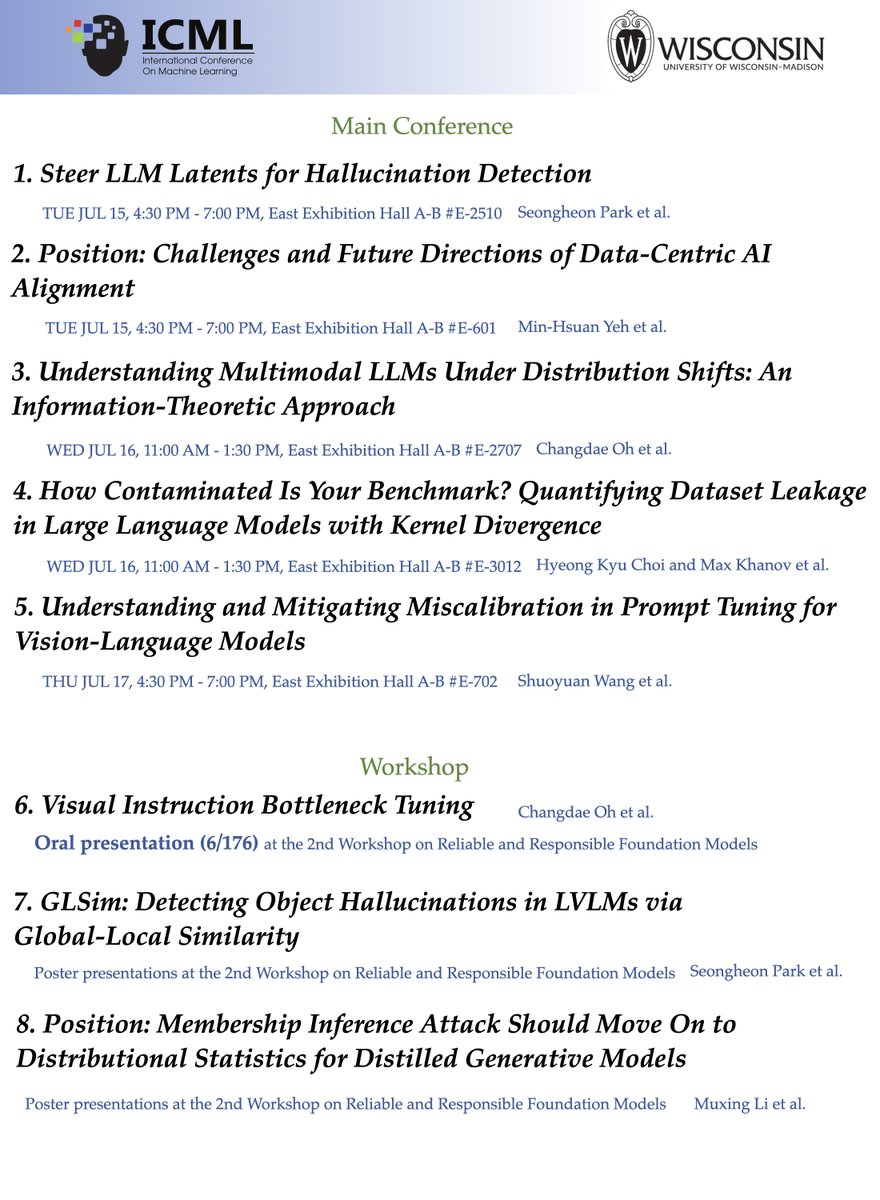

How could we characterize the performance gap of MLLMs under distribution shifts? Please drop by our poster at #ICML2025 !! 🕒Jul 16 (Tomorrow) 11:00-13:30 📍#2707 East Exhibition Hall A-B Happy to introduce a new information-theoretic quantification of MLLM's robustness😋

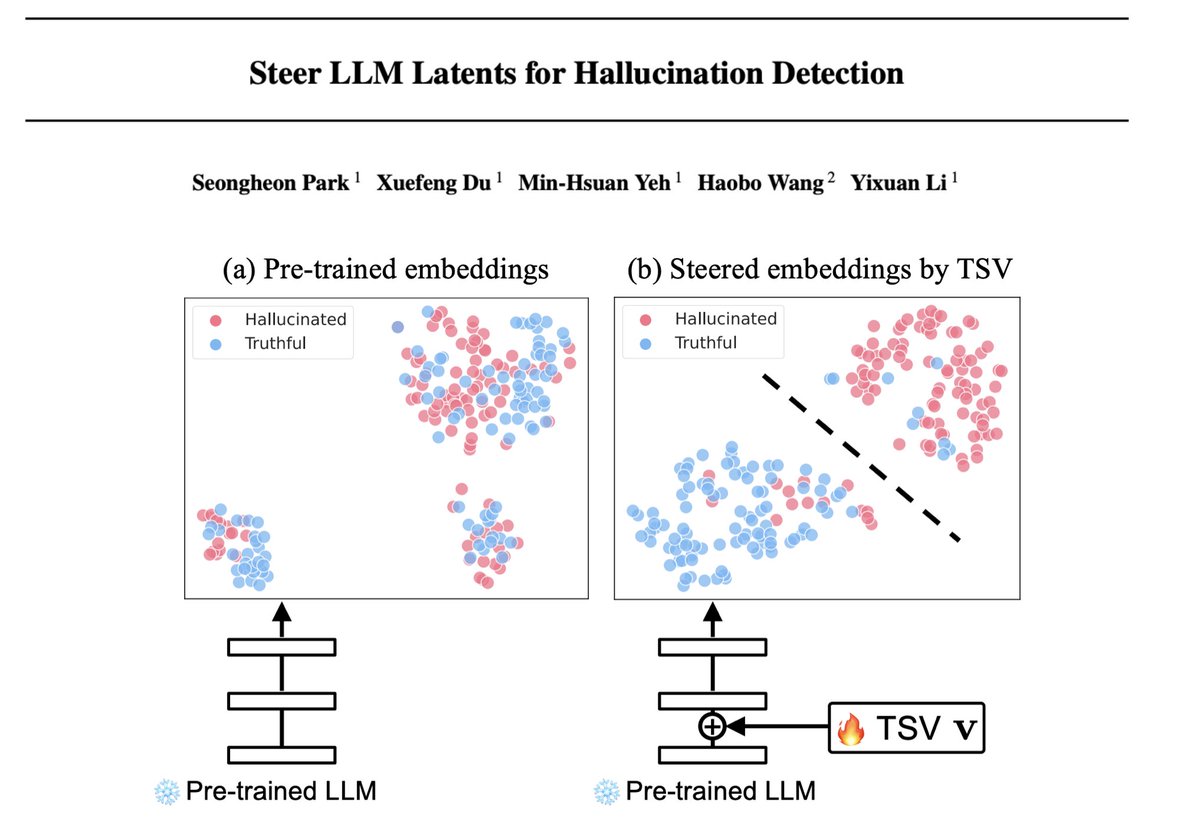

🎉 Excited to share that our ICML 2025 paper on LLM hallucination detection has been accepted! Poster📍: East Exhibition Hall A-B #E-2510 — Tue, July 15 | 4:30–7:00 p.m. PDT Would love to chat and connect — come say hi! 😊

🚨 If you care about reliable, low-cost LLM hallucination detection, our #ICML2025 paper offers a powerful and data-efficient solution. 💡We introduce TSV: Truthfulness Separator Vector — a single vector injected into a frozen LLM that reshapes its hidden space to better…

Excited to be in Vancouver for ICML2025! I'll be presenting "Position: Challenges and Future Directions of Data-Centric AI Alignment" in East Exhibition Hall A-B #E-601 on Tuesday, 7/15, from 4:30 pm. Please come if you are interested in AI alignment! #ICML2025 #aialignment

📢 Looking for new research ideas in AI alignment? Check out our new #ICML2025 position paper: "Challenges and Future Directions of Data-Centric AI Alignment". TL;DR: Aligning powerful AI systems isn't just about better algorithms — it's also about better feedback data, whether…

🚨 #ICML2025 is just around the corner! I will be presenting my work on Kernel Divergence Score! 📍 East Exhibition Hall A-B #E-3012 🕚 Wed 16, 11:00 — 13:30 📄 openreview.net/pdf?id=wVDR2qm… Huge thanks to my fantastic collaborators — @khanovmax, @OwenWei8, and @SharonYixuanLi

Many existing works on advancing multimodal LLMs try to inject MORE information into the model. Would it be the sole/right way to improve the generalization and robustness of MLLMs?

✨ My lab will be presenting a series of papers on LLM reliability and safety at #ICML2025—covering topics like hallucination detection, distribution shifts, alignment, and dataset contamination. If you’re attending ICML, please check them out! My students @HyeonggyuC @shawnim00…

🎉 Great news: Our Machine Learning and Physical Sciences workshop at @NeurIPSConf will be back again this year! 🎉 Keep an eye out for updates on deadlines etc, we will be updating the website soon ml4physicalsciences.github.io #ML4PS2025 @ML4PhyS

Excited to receive the new faculty award from @Google for ML and systems pioneers in academia. "It is a challenging time to be conducting critical academic research given uncertainties in the funding environment. While Google’s funding is only a small part of the overall need,…

The @NSF recently named 27 @UWMadison students in its annual Graduate Research Fellowship Program. Two CS students—@shawnim00 (advised by @SharonYixuanLi) and Seth Ockerman (advised by Shivaram Venkataraman)—were included. Meet the recipients: grad.wisc.edu/2025/04/08/15-…

🚀 Visionary-R1 has hit 1800+ downloads in just one month! 🤗 Hugging Face: huggingface.co/maifoundations… 📄 Paper: arxiv.org/pdf/2505.14677

Check out our work on Visionary-R1, an RL framework designed for visual reasoning. Visionary-R1 significantly outperforms vanilla GRPO, and bypasses the need for explicit chain-of-thought supervision during training.

🌍 GeoArena is live! Evaluate how well large vision-language models (LVLMs) understand the world through image geolocalization. Help us compare models via human preference — your feedback matters! 🔗 Try it now: huggingface.co/spaces/garena2… #GeoArena #Geolocation #LVLM #AI

Proud advisor moment: Shawn @shawnim00 is featured by @WisconsinCS for earning the prestigious NSF Graduate Research Fellowship. Check out the full story here: cs.wisc.edu/2025/06/23/phd…

🎉Our survey on how OOD detection & related tasks have evolved in the VLM and Large VLM era is accepted to #TMLR! The field is finally coming together, and OOD detection & anomaly detection are now at the center in the VLM era. In the LVLM era, UPD (Unsolvable Problem…

Congratulations to Shawn for this incredible honor! 🥳🥳 well deserved!

Excited to share that I have received the NSF GRFP!!😀 I'm really grateful to my advisor @SharonYixuanLi for all her support, to @YilunZhou and @jacobandreas, and to everyone else who has guided me through my research journey! #nsfgrfp

Excited to share that I have received the NSF GRFP!!😀 I'm really grateful to my advisor @SharonYixuanLi for all her support, to @YilunZhou and @jacobandreas, and to everyone else who has guided me through my research journey! #nsfgrfp

🚨 If you care about reliable, low-cost LLM hallucination detection, our #ICML2025 paper offers a powerful and data-efficient solution. 💡We introduce TSV: Truthfulness Separator Vector — a single vector injected into a frozen LLM that reshapes its hidden space to better…

🛟 Reliable & reliability researchers @CVPR! Join our workshop on Uncertainty Quantification for Computer Vision next week! We have a super lineup of speakers (from self-driving to LLMs) and cool posters. 🗓️ Day: Wed, Jun 11 📌Room: 102 B #CVPR2025 #UNCV2025

🚨 We’re hiring! The Radio Lab @ NTU Singapore is looking for PhD, master, undergrads, RAs, and interns to build responsible AI & LLMs. Remote/onsite from 2025. Interested? Email us: [email protected] 🔗 d12306.github.io/recru.html Please spread the word if you can!