SemiAnalysis

@SemiAnalysis_

230k GPUs, including 30k GB200s, are operational for training Grok @xAI in a single supercluster called Colossus 1 (inference is done by our cloud providers). At Colossus 2, the first batch of 550k GB200s & GB300s, also for training, start going online in a few weeks. As Jensen…

230k GPUs, including 30k GB200s, are operational for training Grok @xAI in a single supercluster called Colossus 1 (inference is done by our cloud providers). At Colossus 2, the first batch of 550k GB200s & GB300s, also for training, start going online in a few weeks. As Jensen…

Most GB200 Clusters in the world are just 400G NIC per GPU except for OpenAI's Stargate giving OpenAI 2x stronger scale out ultra large scale training network performance! As you can clearly see in the image, there are 8 400GbE OSFP cages per compute tray which means it is 800GbE…

photo above and in this post are from our Stargate I site in Abilene:

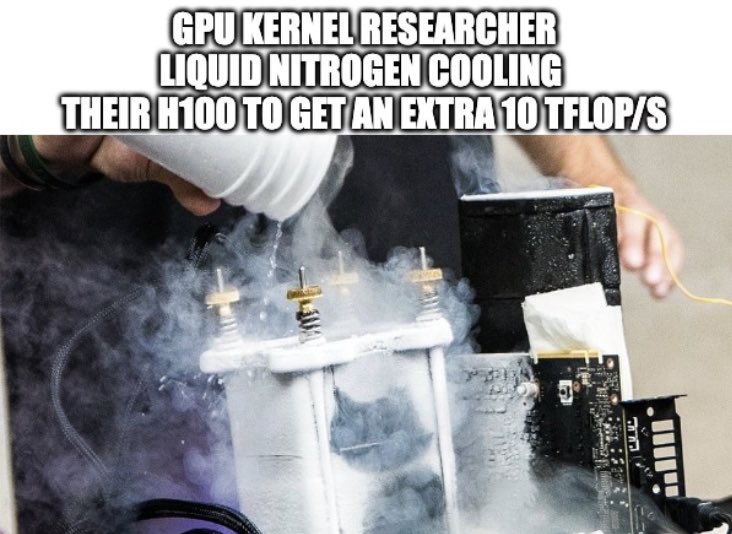

ariXv gpu kernel researcher be like: • liquid nitrogen cooling their benchmark GPU • overclock their H200 to 1000W "Custom Thermal Solution CTS" • nvidia-smi boost-slider --vboost 1 • nvidia-smi -i 0 --lock-gpu-clocks=1830,1830 • use specially binned GPUs where the number…