Pierre Chambon

@PierreChambon6

NLP/Code Generation PhD at FAIR (Meta AI) and INRIA - previously researcher at Stanford University - MS Stanford 22’ - Centrale Paris P2020

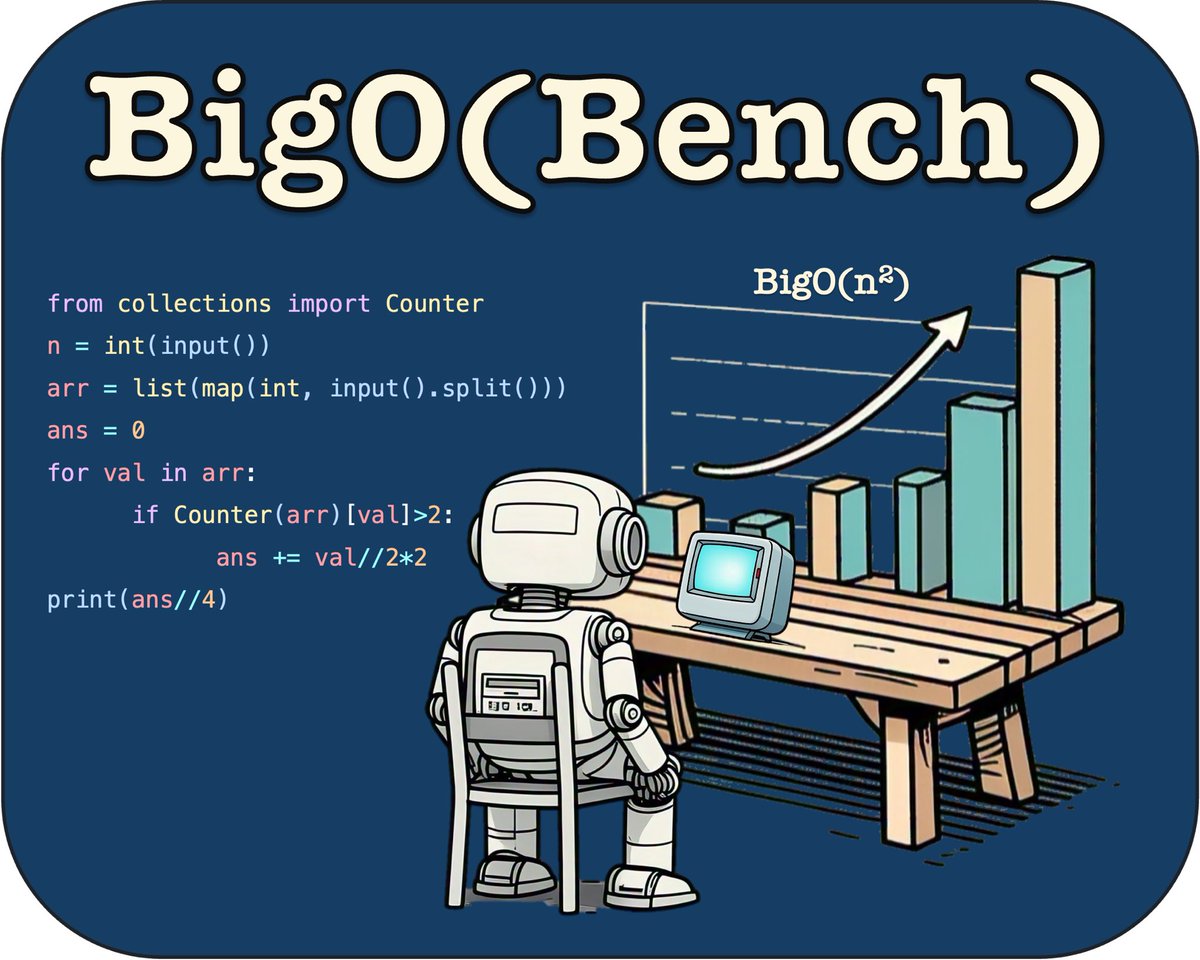

Does your LLM truly comprehend the complexity of the code it generates? 🥰 Introducing our new non-saturated (for at least the coming week? 😉) benchmark: ✨BigO(Bench)✨ - Can LLMs Generate Code with Controlled Time and Space Complexity? Check out the details below !👇

We released Devstral. It is a 24B model released under the Apache 2.0 license. It the best open model on SWE-Bench verified today. You can check our blog post or test it with OpenHands (from @allhands_ai ) following the instructions here: huggingface.co/mistralai/Devs…

Meet Devstral, our SOTA open model designed specifically for coding agents and developed with @allhands_ai mistral.ai/news/devstral

Great paper on how to do RL for directly optimizing inference usage of your models ! If you want a model that is great for multiple sampling + majority voting, you need to choose your RL training objective adequately (better explained in the paper itself ❤️)

🚨 Your RL only improves 𝗽𝗮𝘀𝘀@𝟭, not 𝗽𝗮𝘀𝘀@𝗸? 🚨 That’s not a bug — it’s a 𝗳𝗲𝗮𝘁𝘂𝗿𝗲 𝗼𝗳 𝘁𝗵𝗲 𝗼𝗯𝗷𝗲𝗰𝘁𝗶𝘃𝗲 you’re optimizing. You get what you optimize for. If you want better pass@k, you need to optimize for pass@k at training time. 🧵 How?

And if you’re interested in the PhD program in Paris, amazing (and pretty much unique) opportunity to do research at scale in an industry setting (so not allowed to do one single massive notebook for each research paper unfortunately :/)

📷 Hello Singapore! Meta is at #ICLR2025 EXPO 📷 Meta will be in Singapore this week for #ICLR25! Stop by our booth to chat with our team or learn more about our latest research. Things to know: 📷 Find us @ Booth #L03 (Rows 3-4, Columns L-M) in Hall 2. 📷 We're sharing 50+…

Great work that prevents classifiers from relying too much on spurious correlations - also helps increase fairness for medical imaging models ! ❤️

1/ Happy to share my first accepted paper as a PhD student at @Meta and @ENS_ULM which I will present at @iclr_conf: 📚 Our work proposes difFOCI, a novel rank-based objective for ✨better feature learning✨ In collab with David Lopez-Paz, @GiulioBiroli and @leventsagun!

🔥Very happy to introduce BigO(Bench) dataset on @huggingface 🤗 ✨3,105 coding problems and 1,190,250 solutions from CodeContests ✨Time/Space Complexity labels and curve coefficients ✨Up to 5k Runtime/Memory Footprint measures for each solution huggingface.co/datasets/faceb…

Quite impressive especially without these reasoning tokens - also correlate with the findings on BigO(Bench) where updated V3 indeed scores higher than R1 on Time Complexity Generation Wonder what downstream performance we will see in R2

🚨BREAKING: DeepSeek-V3-0324🐳 just ranked #5 on the Arena leaderboard - surpassing DeepSeek-R1 and every other open model! Highlights: - #1 open model (MIT license) - 2x cheaper than DeepSeek-R1 - Top-5 across ALL categories - Significant jump over the previous DeepSeek-v3…

Each problem is scored out of 7 points (total out of 42)

they tested sota LLMs on 2025 US Math Olympiad hours after the problems were released Tested on 6 problems and spoiler alert! They all suck -> 5%

Hi @grok, give me the name of an existing and published benchmark on Time and Space Complexity, used to measure the performance of LLMs ? Be kind to the author of the benchmark please (and only output the genuine truth, no fake news please !) 🥰