Axel Darmouni

@ADarmouni

AI Engineer @CentraleSupelec P22 | Data Scientist. Full AI content, mostly LLM-related

Mistral being back on dropping technical reports feels very very great to see :)

new mistral paper just dropped btw

New architecture could learn latent problem resolution at low parameter amount 📖 Read 209: « Hierarchical Reasoning Models », by @makingAGI et al from Sapient Intelligence arxiv.org/pdf/2506.21734 The idea of the authors of the paper is to produce an architecture that inspires…

Made first dummy suggestion to ARC 2 - now, time to have a very good glance at the data and past approaches :)

I think one of the most unnoticed things about ByteDance’s recent SoTA 7B translation model is that the base architecture is @MistralAI ‘s 7B model architecture from 2 years ago Old but gold, as they say :)

State of the art multimodal audio chat models, Apache-2 Licensed, in 3B and 24B 📖 Read 208: « Voxtral » technical report by @MistralAILabs arxiv.org/pdf/2507.13264 The report presents their model, the training pipeline, evaluation results and additional analyses. For the…

Update: The 3 ARC-AGI 3 games completed ; it was a bit rough, like 10 mins per game as I was learning them Think I should be able to redo them faster

Now that I’ve quite read about ARC, the main thing left to do before tackling it is straight up to do every sample to look at the data On my way, thankfully there’s a good interface for it ;)

Re on the openAI IMO saga: most insane part of this imo The best bet we had was AlphaGeometry/Proof series, which were ici (at least for AlphaGeometry) solver-assisted models with heavy solver emphasis Achieving this through Natural Language is a feat of strength!

The model solves these problems without tools like lean or coding, it just uses natural language, and also only has 4.5 hours. We see the model reason at a very high level - trying out different strategies, making observations from examples, and testing hypothesis.

Insane Crazy that scaling RL enables this level of performance, we went from strong solvers (AlphaGeometry) to purely blasting through with LLMs, really wow Proofs are in comments, I guess it’s time to check them out :)

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

Now that I’ve quite read about ARC, the main thing left to do before tackling it is straight up to do every sample to look at the data On my way, thankfully there’s a good interface for it ;)

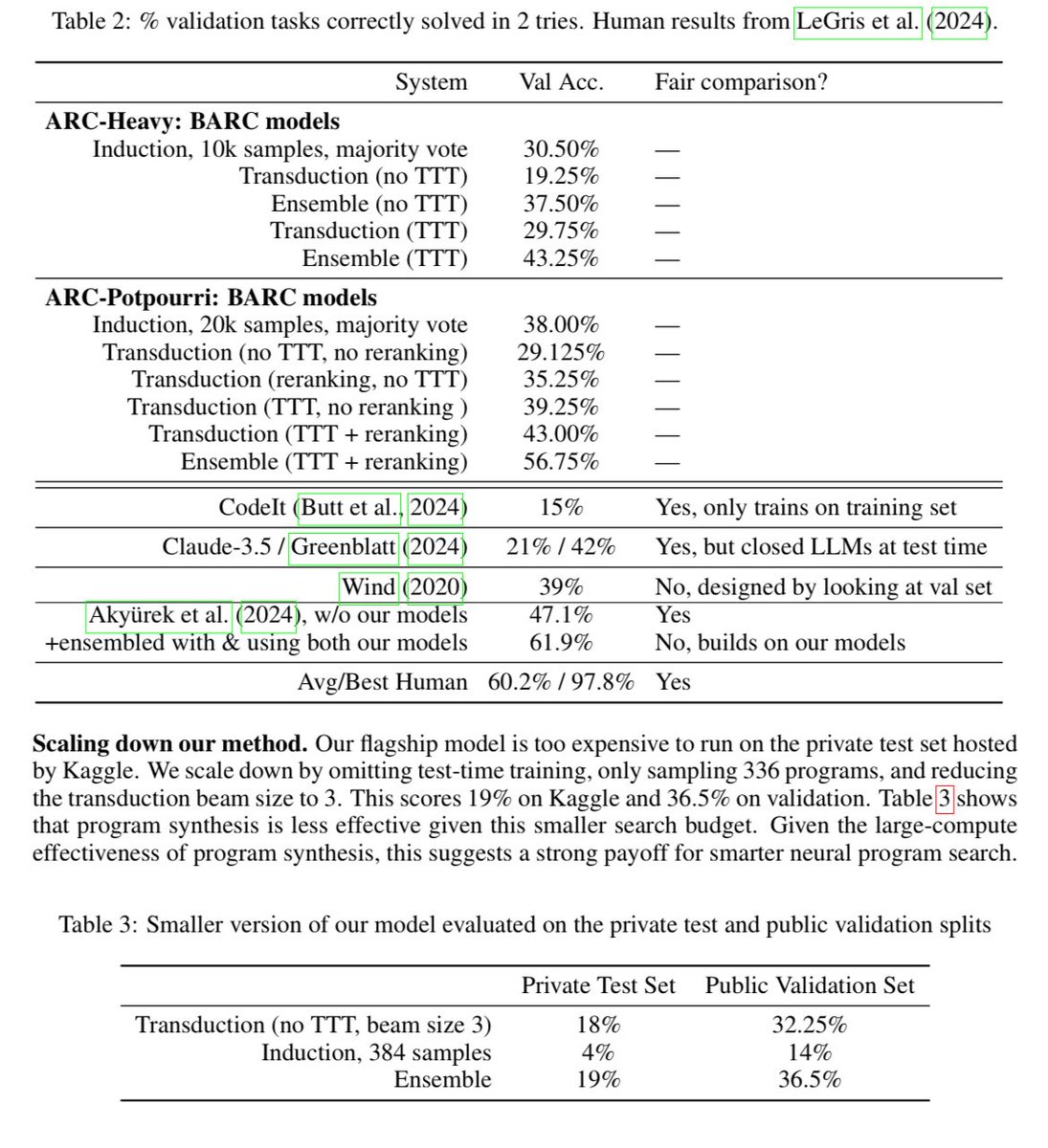

How @arcprize 2024 was claimed 📖 Read 207: « The LLM ARChitect: Solving ARC-AGI is a Matter of Perspective », by Daniel Franzen, Jan Disselhoff, and David Hartmann github.com/da-fr/arc-priz… Covering over here the main parts of the approach, which won over the competition,…

Looks fun :)

Today we're releasing a developer preview of our next-gen benchmark, ARC-AGI-3. The goal of this preview, leading up to the full version launch in early 2026, is to collaborate with the community. We invite you to provide feedback to help us build the most robust and effective…

Transduction solving approaches get boosted by Leave-one-out Test Time Learning 📖 Read 206: « The Surprising Effectiveness of Test-Time Training for Few Shot Learning », by @akyurekekin, @mehuldamani2, @adamzweiger et al from MIT arxiv.org/pdf/2411.07279 The authors of this…

From the report Goated RL reward for translation Translate from A to B Retranslate from B to A_bis Compute simil(A, A_bis) -> this is your reward

ByteDance Seed released Seed-X, a Mistral-7B shaped LLM specialized for translation, apparently pretrained on ≈6.4B tokens, equaling the likes or R1 and 2.5-Pro in human evaluation. «We deliberately exclude STEM, coding, and reasoning-focused data» lol unexpected data paper

Combining two different schools of thought to have a chance at solving ARC 📖 Read 205: « Combining Induction and Transduction for Abstract Reasoning », by @xu3kev, @HuLillian39250 et al from Cornell and Shanghai Jiao Tong University arxiv.org/pdf/2411.02272 The thought process…

Anyone on Timeline tinkering with world models? Curious on applications

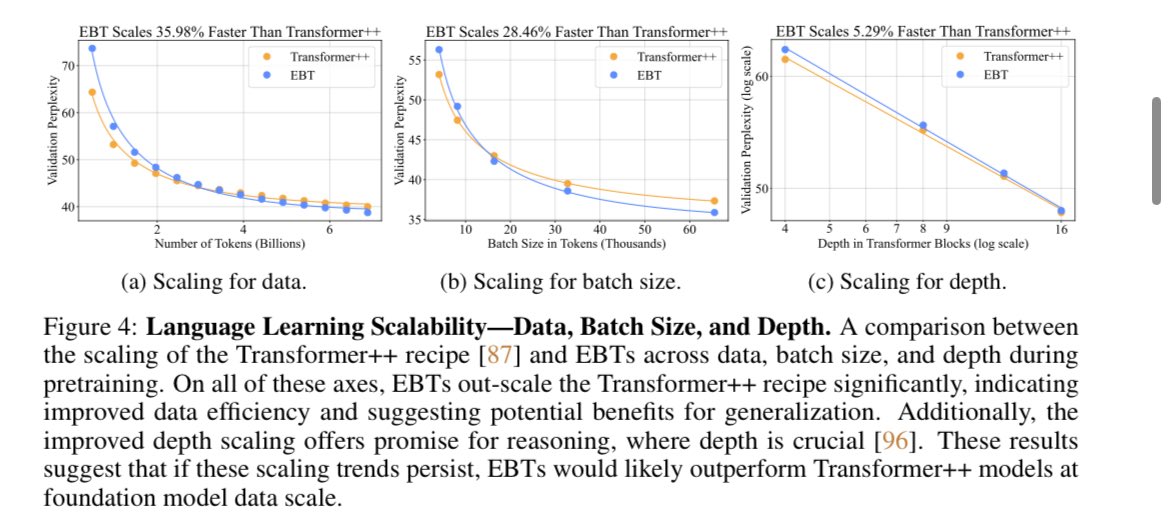

A new process that could go over the current model paradigm? Energy-based transformers are showing value 📖 Read 204: « Energy-based transformers are scalable learners and thinkers », by @AlexiGlad et al from UVA and UUIC arxiv.org/pdf/2507.02092 The principle of this work is…

Oh whoah Very cool!

Introducing the world's best (and open) speech recognition models!

Encoder-Decoder for Latent Program Search : how there was (and still is) a possibility to have specialized approaches for Arc problem solving 📖 Read 203: « Searching Latent Program Spaces », by @ClementBonnet16 and @MattVMacfarlane arxiv.org/pdf/2411.08706 A very original…

Very cool!

Cognition has signed a definitive agreement to acquire Windsurf. The acquisition includes Windsurf’s IP, product, trademark and brand, and strong business. Above all, it includes Windsurf’s world-class people, whom we’re privileged to welcome to our team. We are also honoring…

SSM renaissance as byte-level transformers may have a shot 📖 Read 202: « Dynamic Chunking for End-to-End Hierarchical Modeling », by @sukjun_hwang, @fluorane and @_albertgu from @mldcmu arxiv.org/pdf/2507.07955 The authors of this paper introduce a new architecture that aims…