Michael Posa

@MichaelAPosa

Assoc. Prof. at the University of Pennsylvania, part of the GRASP Lab. PI of the Dynamic Autonomy and Intelligent Robotics Lab.

Presenting my RSS work, Vysics, a Vision-Physics Joint Object Reconstruction framework at CVPR 2025 "physics-inspired 3D vision workshop" poster #159 at Hall D today.

This is a random shout out but I genuinely feel that the community doesn't have a big entropy currently in exploring different methods for manipulation.

The very first works by @jinwanxin are already showing the possibilities of model-based dex mani, by awesome solutions to state estimation, modelling, and planning. These works from this new lab are quite unique, while others might think this direction is too risky.

🚀 Tracking unseen, highly dynamic objects in contact-rich scenes? Occlusion, blur, and impacts make it tough! Introducing 🔥TwinTrack🔥: a real-time (>20 Hz) tracking framework fusing Real2Sim + Sim2Real, bridging vision and contact physics for robust tracking! GPU-accelerated!…

The United States has had a tremendous advantage in science and technology because it has been the consensus gathering point: the best students worldwide want to study and work in the US because that is where the best students are studying and working. 1/

What a great time at ICRA 2025! We hope you all had a blast at the GRASP Reunion at ICRA! Hope to see you at the next GRASP event! #GRASP #GRASPLab #GRASPatICRA #ICRA2025

My largest remaining NSF grant, which was awarded by a competitive process on the recommendation of national experts, was terminated yesterday. The money would have paid for PhD students to invent better AI systems for everyday people who need programs written for them but ...

These assaults upon independent thought will accomplish nothing more than depriving future generations of the new ideas, medicines, and technologies that would have been developed at one of the crown jewels of our country.

I was thrilled to have presented my work, "Vysics: Object Reconstruction Under Occlusion by Fusing Vision and Contact-Rich Physics," vysics-vision-and-physics.github.io at the ICRA 2025 Structured Learning for Efficient, Reliable, and Transparent Robots Workshop. Thanks for the organizers!

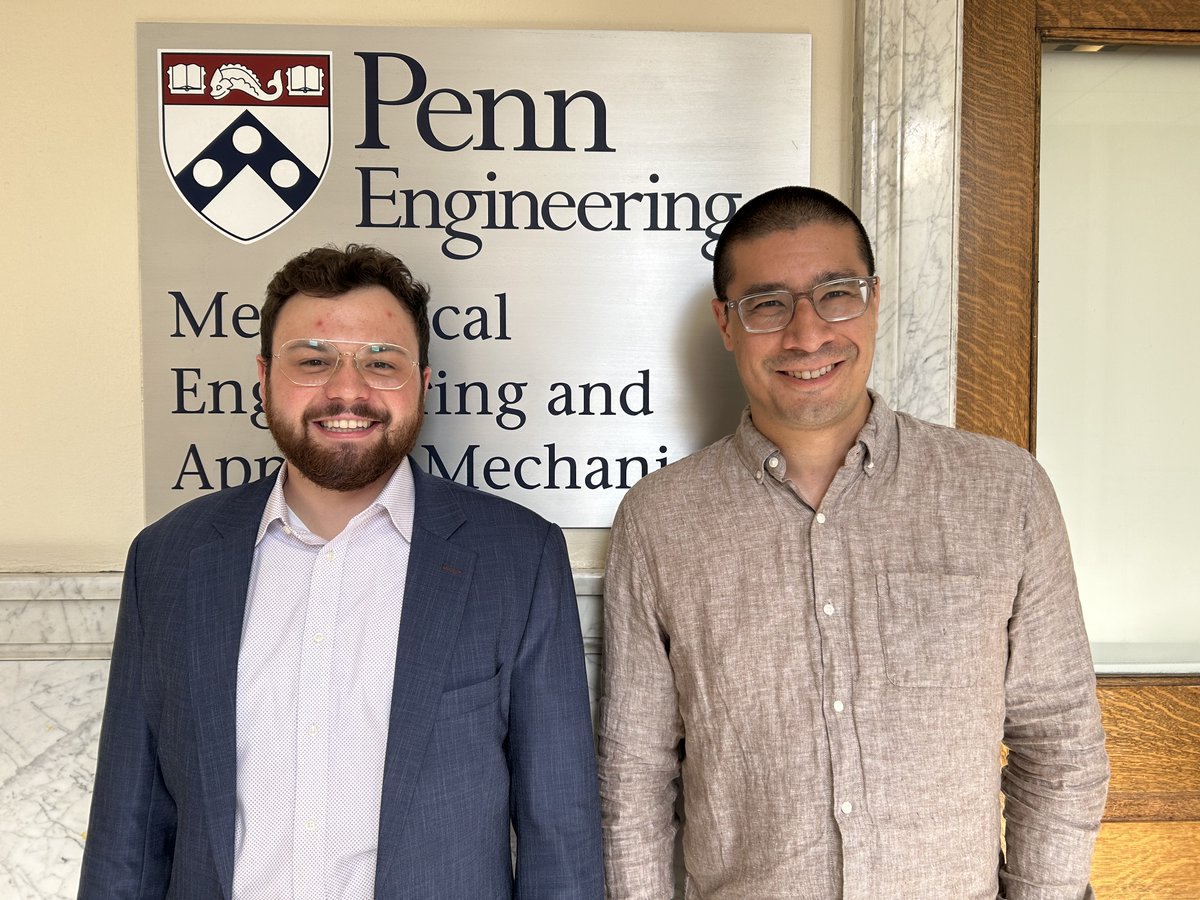

Congratulations to Brian Acosta for defending his doctoral dissertation, “Real-Time Perception and Mixed-Integer Footstep Control for Underactuated Bipedal Walking on Rough Terrain” under the guidance of @MichaelAPosa, Asst. Prof in MEAM. Read more: blog.me.upenn.edu/brian-acosta-d…

Congratulations to Brian Acosta for a successfully defending his Ph.D., ""Real-time Perception and Mixed-Integer Footstep Control for Underactuated Bipedal Walking on Rough Terrain," on May 6, 2025! @MEAM_Penn @GRASPlab Recording: youtube.com/watch?v=ZqUSpQ…

CONGRATULATIONS to GRASP's own Elizabeth “Bibit” Bianchini and Victoria “Torrie” Edwards on receiving the 2025 John A. Goff Prize!!! For more info: grasp.upenn.edu/news/john-a-go… #GRASP #GRASPLab #JohnAGoffPrize

Check out our newest Inside the GRASP Lab video featuring Dr. Michael Posa and DAIR Lab! youtu.be/zo-SQFYTECs?si… #GRASP #GRASPLab #InsidetheGRASPLab #VideoSeries