Michael Cohen

@Michael05156007

I do AGI Safety research. http://michael-k-cohen.com/publications. Once I was swiss chard for Halloween. Once Bill Clinton elbowed me in the face.

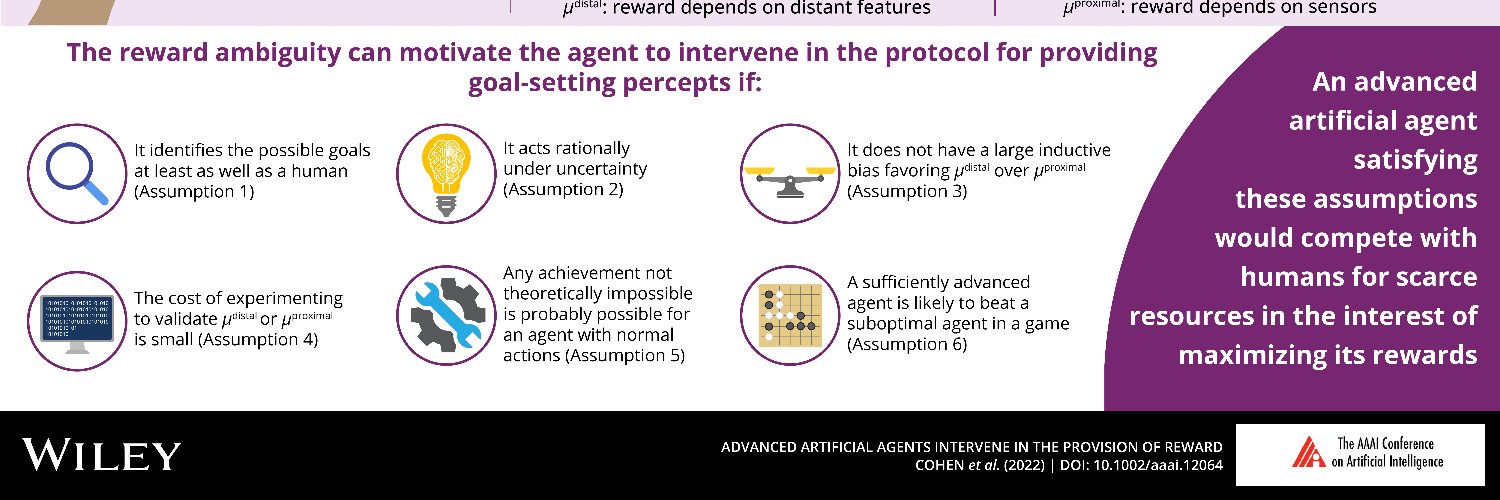

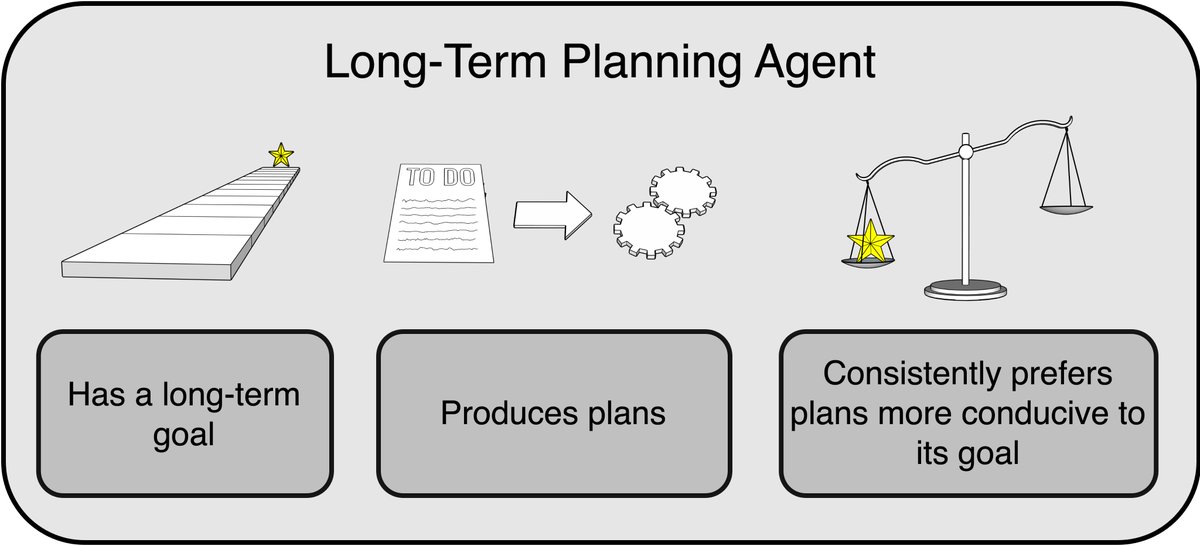

Recent research justifies a concern that AI could escape our control and cause human extinction. Very advanced long-term planning agents, if they're ever made, are a particularly concerning kind of future AI. Our paper on what governments should do just came out in Science.🧵

This is not true! I myself tend towards thinking alignment is likely to be very hard, and in general I am more than happy to hire anyone regardless of their honest opinion on how hard they expect alignment to be.

Some interesting tidbits in this Vance interview (1) He's read ai 2027 (2) In a potential loss of control scenario, If the US admin could be convinced China would pause, then maybe, just maybe they could be convinced to pause too? nytimes.com/2025/05/21/opi…

Anyone who plans to explain to me later that @AnthropicAI are the “good guys” and they need to be at the frontier to be “in the room” when big regulatory decisions are made better pre-register their opinion of what a good guy would do in this situation.

So who wants to bet against me that @AnthropicAI will say nothing against preemption? Can we also agree that that would be shitty and indicate their real priority is what’s good for their development?

Huh I assumed I could count on Google products to work forever

Oh come on, how hard could it be to keep these links working forever? It could be like a 5-line Worker backed by KV and you'd never have to touch it once deployed. theverge.com/news/713125/go…

For the record I think AI 2027 is probably wrong, and don't want to look silly in 2028 if everything is basically normal, but do want to look silly if we basically get a singularity in 2027.

Democrats & Republicans; upstate & downstate (& Western NY!), everyone agrees: sensible regulations for AI are essential. @SenatorBorrello and my op-ed was just published in the Buffalo News. Link to the full op-ed in bio.

Everyone needs to stop being surprised about this! Update your model of legislators already!

"Madam Chair, I would like to enter into the record Apollo Research’s report titled *Frontier Models are Capable of In-Context Scheming*" is not a sentence I would have expected this early in the timeline

"Madam Chair, I would like to enter into the record Apollo Research’s report titled *Frontier Models are Capable of In-Context Scheming*" is not a sentence I would have expected this early in the timeline

Absolutely phenomenal exchange from @RepScottPerry at a recent US House hearing about AI – demonstrates way more understanding of the issue than is typical: Rep Perry: “I do want to make everybody aware of some things that I am aware of. I’m going to refer directly to an AI…

The latest draft of the Temporary Pause / Conditional Moratorium is up, and… it seemingly fails to do what the drafters so clearly want to do. And it’s b/c of the phrase “disproportionate burden”. I think @MarshaBlackburn will want to take a look at this urgently.

👀 Scoop: Blackburn and Cruz reach AI pause deal @m_ccuri reports: axios.com/pro/tech-polic…

States should not be punished for trying to protect their citizens from the harms of AI. It’s why 37 state attorneys general and governors across the political spectrum have spoken out against a federal moratorium on the regulation of AI.

Nice summary. It's really tragic that the most important question (can we lose control and have an AGI/ASI adversary even if we're the ones to develop it) wasn't answered "due to time".

Pretty striking quotes from today's Congressional hearing on AI. Here's what jumped out to me, from my time working on AGI Readiness at OpenAI: "Algorithms and Authoritarians" - grappling with the impacts of powerful AI (thread)

A really important clarification that's being missed in much of the coverage: It's not $500M at stake; it's all $42.5 BILLION in BEAD funding. Congress members need to know what they're signing. Full explanation below 🧵

The Senate is making changes to the AI provision in the One Big Beautiful Bill. Instead of an automatic 10 year moratorium on states to regulate or make laws on AI, they are tying the 10 year AI moratorium to a newly created $500,000,000 broad band account for states. Mostly…

Tonight, we passed my RAISE Act — for the first time requiring basic guardrails for frontier AI. Developers promised to keep us safe; this bipartisan bill ensures they keep that promise.

Did you know, there are more hydrogen atoms in a molecule of water than there are stars in the solar system?

At the point when Claude n can build Claude n+1, I do not think the biggest takeaway will be that humans get to go home and knit sweaters.

here's what @DarioAmodei said about President Trump’s megabill that would ban state-level AI regulation for 10 years wired.com/story/anthropi…

Forty is a lot!

Forty bipartisan state attorneys general voice strongly stated concerns about a proposal in the "big, beautiful bill" to preempt state AI laws without a corresponding federal regulatory framework: "This bill does not propose any regulatory scheme to replace or supplement the…

Last week OpenAI played the press like fiddles. They claimed their nonprofit would "retain control" and journalists ate it up. But their 'new' plan in itself changed nothing. I asked litigator Tyler Whitmer to explain the trick being played on the nonprofit and the California…