Mikhail Parakhin

@MParakhin

My mentor, the person I learned a lot from, has only one functioning arm. He is phenomenal.

Typing is not a bottleneck of Programming. One of the best Programmers I've met in my life didn't have one hand. They typed slowly but strategically. And somehow I've met such people more then once.

Last ICML post, I promise :-) Looks like Ilias Diakonikolas was the most prolific first author, with 7(!) papers. Yang Li was second with *only* 4 :-) And Mihaela van der Schaar was the most prolific author overall with 16 papers, Li Shen second with 15. Geez!

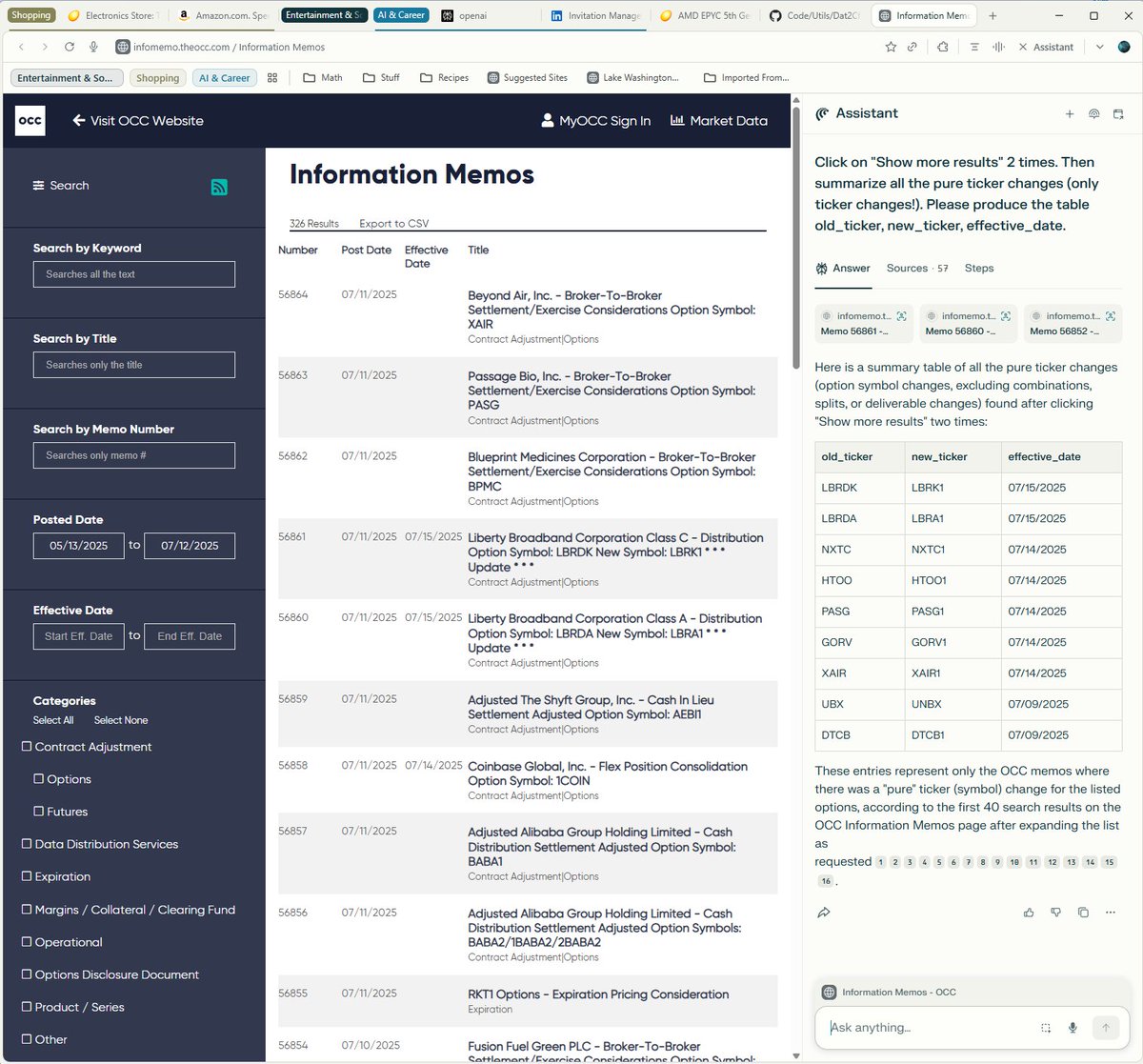

Comet (Perplexity's browser) has been growing on me. Initially I only used Assistant to query busy pages, but the ability to perform actions right inside the browser is really handy, too! My real-life task example on OCC memos website:

That time of year again! Hope to see many old (and new) friends at ICML.

✨Want to know how we do it? Catch our ICML 2025 Expo Talk "Building Production Ready Agentic Systems: Architecture, LLM-based Evaluation, and GRPO Training" (onsite Monday, 4:30 PDT) to learn all about Sidekick. Preview it in action at shopify.com/magic#demo

I took Grok-4 Heavy through my real-life tests. The "bones" are there, reasoning is strong (no, it's not true they "just overfitted on tests"). But the post-training phase was clearly VERY rushed, surprising for the top-tier model. Good thing it is incrementally improvable!

We need better tests. Anyone doing serious work knows that o3-pro is in the league of its own. I'm personally experimenting with Grok-4-heavy now, first test was slightly better than o3-pro (it mentioned my favorite LAMB optimizer, where o3-pro didn't :-))

are we serious rn? these are basically all the same. what are we doing here??

Looks like we are getting the best new large model and the best new small model today…

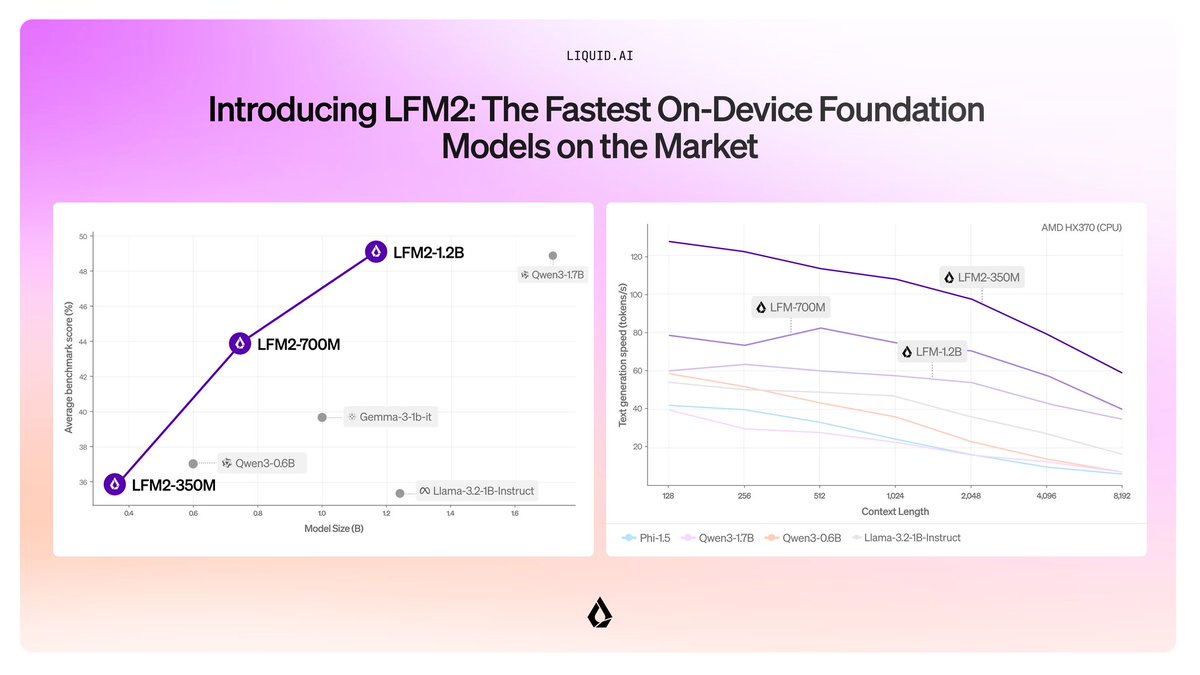

Today, we release the 2nd generation of our Liquid foundation models, LFM2. LFM2 set the bar for quality, speed, and memory efficiency in on-device AI. Built for edge devices like phones, laptops, AI PCs, cars, wearables, satellites, and robots, LFM2 delivers the fastest…

I haven’t played with the new Grok yet, but I have used the new Liquid v2 models and they are by far the best in the small-and-fast class.

Shopify has been more and more active in ML lately :-)

T-minus 1 week to #ICML2025 where Shopify debuts as a Platinum Sponsor! 🚀 Check out our latest blog post on how we use ML to make commerce better for everyone, or catch our Sidekick agent Expo Talk onsite. 🤖 shopify.engineering/machine-learni…

My first NeurIPS was 2001, alas merciless time has destroyed the first two mugs, this is the oldest in my collection. Just discovered a bug: in 2003 and 2006 mugs were identical!

That was my first nips!

In Shopify, we literally chastise people for spending too LITTLE on LLMs while coding. I unironically run a competition on who could spend the most without using scripting and reward the winner. It came up, because one week I was the top spender - and that wasn't OK.

Imagine your CEO, CFO, and Head of Eng looking at a list of your company’s top AI coding agent users, and you’re at the very top in usage and spend. Is that a good thing or bad thing at your company?

OpenAI has been flighting O3 Pro for a while. Clearly the best at math (haven’t tried DeepThink), ability to use tools and Web is really nice, longer context window helps a ton. But the desire to put everything into a table (misformatted at that) is so infuriating…

SimilarWeb is severely underestimating Copilot usage (most engagements are in Windows/Edge and on Microsoft properties, no SimilarWeb pixels there). But even so, I wish Copilot grew faster over the last year, of course.

Graphic from the WSJ. I honestly feel that if MS had just let Bing be Bing this would look very different today. Suddenly the whole game is about model personalities. That wonderful willful creature would have charmed the hell out of the public.