Hermann

@KumbongHermann

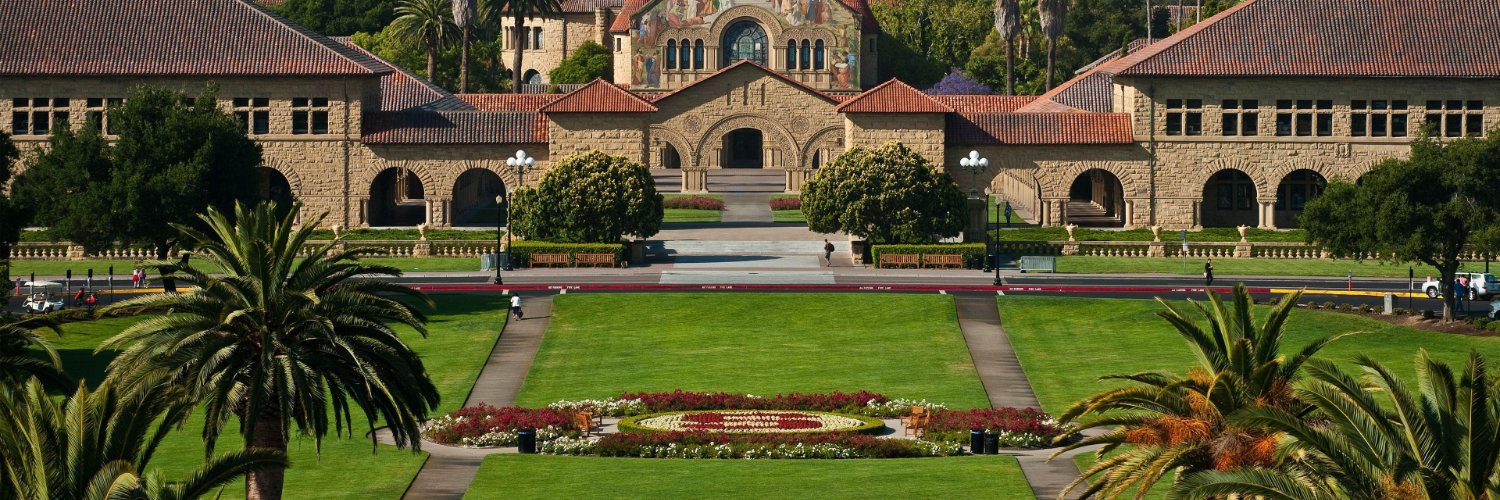

CS PhD @Stanford, @KnightHennessy

Tired of your 1T param language model loss plateauing ~0.6-1.3? Simple solution: cheat by learning a latent language with better characteristics than English! Provocative title aside, I explored whether machines could develop their own "language" optimized for AI vs humans. 🧵

Excited to share our latest at ICML 2025: pushing LoRA fine-tuning to below 2 bits (as low as 1.15 bits), unlocking up to 50% memory savings. Another step toward cheaper, democratized LLMs on commodity hardware! w/ the amazing team: @zhou_cyrus68804 @KumbongHermann @KunleOlukotun

🚀 New #ICML2025 drop! LowRA slashes LoRA to 1.15 bits / param and outperforms every sub-4-bit baseline. w/ @qizhengz_alex @KumbongHermann @KunleOlukotun 👇 (1 / N)

Check out our work LowRA at #ICML2025 led by @zhou_cyrus68804 . LowRA allows you to do LORA fine-tuning with down to 1.15 bits / param.

🚀 New #ICML2025 drop! LowRA slashes LoRA to 1.15 bits / param and outperforms every sub-4-bit baseline. w/ @qizhengz_alex @KumbongHermann @KunleOlukotun 👇 (1 / N)

We’re thrilled to collaborate with the @HazyResearch @StanfordAILab, led by Chris Ré, to power Minions, their cutting-edge agentic framework tackling the cost-accuracy tradeoff in modern AI systems. This innovation is enabled on AMD Ryzen AI, thanks to seamless integration with…

HF 🔜 huggingface.co/nvidia/HMAR PAPER: arxiv.org/abs/2506.04421 CODE: github.com/NVlabs/HMAR

✨ Test-Time Scaling for Robotics ✨ Excited to release 🤖 RoboMonkey, which characterizes test-time scaling laws for Vision-Language-Action (VLA) models and introduces a framework that significantly improves the generalization and robustness of VLAs! 🧵(1 / N) 🌐 Website:…

Happy to share that our HMAR code and pre-trained models are now publicly available. Please try them out here: code: github.com/NVlabs/HMAR checkpoints: huggingface.co/nvidia/HMAR

Excited to be presenting our new work–HMAR: Efficient Hierarchical Masked Auto-Regressive Image Generation– at #CVPR2025 this week. VAR (Visual Autoregressive Modelling) introduced a very nice way to formulate autoregressive image generation as a next-scale prediction task (from…

1/10 ML can solve PDEs – but precision🔬is still a challenge. Towards high-precision methods for scientific problems, we introduce BWLer 🎳, a new architecture for physics-informed learning achieving (near-)machine-precision (up to 10⁻¹² RMSE) on benchmark PDEs. 🧵How it works:

LLMs often generate correct answers but struggle to select them. Weaver tackles this by combining many weak verifiers (reward models, LM judges) into a stronger signal using statistical tools from Weak Supervision—matching o3-mini-level accuracy with much cheaper models! 📊

LLMs can generate 100 answers, but which one is right? Check out our latest work closing the generation-verification gap by aggregating weak verifiers and distilling them into a compact 400M model. If this direction is exciting to you, we’d love to connect.

How can we close the generation-verification gap when LLMs produce correct answers but fail to select them? 🧵 Introducing Weaver: a framework that combines multiple weak verifiers (reward models + LM judges) to achieve o3-mini-level accuracy with much cheaper non-reasoning…

How can we close the generation-verification gap when LLMs produce correct answers but fail to select them? 🧵 Introducing Weaver: a framework that combines multiple weak verifiers (reward models + LM judges) to achieve o3-mini-level accuracy with much cheaper non-reasoning…

Very cool class!!

Wrapped up Stanford CS336 (Language Models from Scratch), taught with an amazing team @tatsu_hashimoto @marcelroed @neilbband @rckpudi. Researchers are becoming detached from the technical details of how LMs work. In CS336, we try to fix that by having students build everything:

We really liked VAR's formulation towards image generation. During @KumbongHermann's internship, we noticed there were a few aspects to improve. The result: A better & faster multi-scale autoregressive image generation framework. Come to our poster at #CVPR2025 this week! 🥳

Excited to be presenting our new work–HMAR: Efficient Hierarchical Masked Auto-Regressive Image Generation– at #CVPR2025 this week. VAR (Visual Autoregressive Modelling) introduced a very nice way to formulate autoregressive image generation as a next-scale prediction task (from…

Announcing HMAR - Efficient Hierarchical Masked Auto-Regressive Image Generation, led by @KumbongHermann! HMAR is hardware-efficient, reformulates autoregressive image generation in a way that can take advantage of tensor cores. Hermann is presenting it at CVPR this week!

Excited to be presenting our new work–HMAR: Efficient Hierarchical Masked Auto-Regressive Image Generation– at #CVPR2025 this week. VAR (Visual Autoregressive Modelling) introduced a very nice way to formulate autoregressive image generation as a next-scale prediction task (from…