Wu Haoning

@HaoningTimothy

PhD Nanyang Technological University🇸🇬, BS @PKU1898, cooking VLMs in @Kimi_Moonshot.

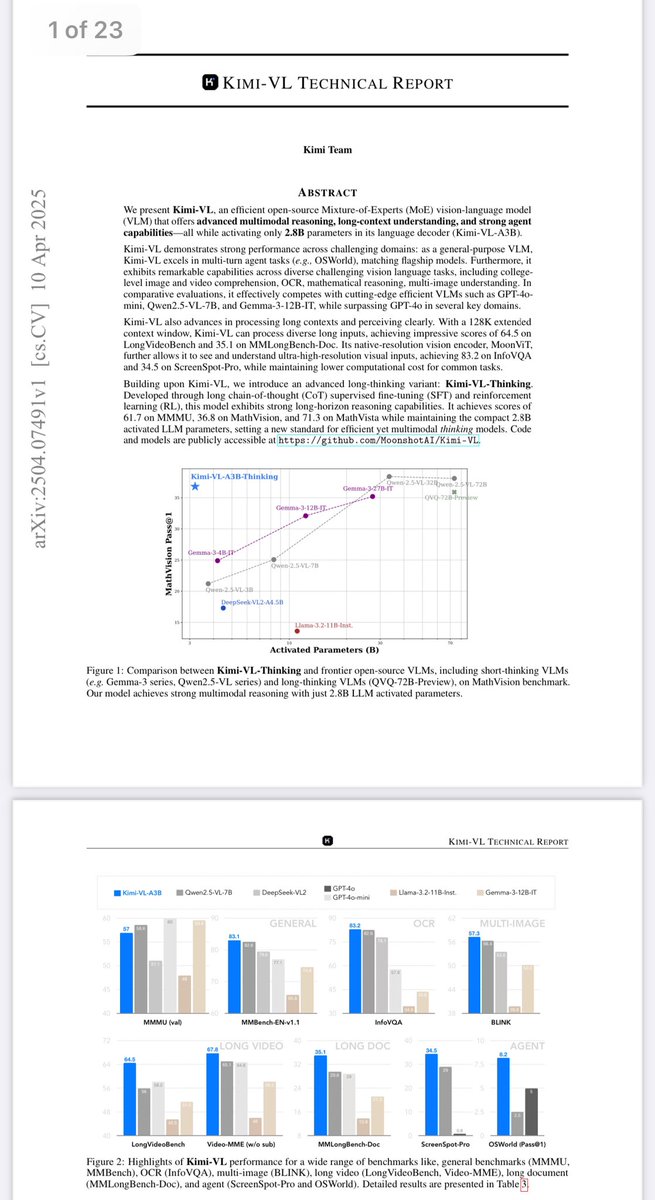

arxiv.org/abs/2504.07491 Kimi-VL’s technical report is out on arxiv!

I joined Kimi the same day R1 released. Explored a lot, witnessed a lot. Dr. 🐻’s blog can reflect thoughts of lots of technical staffs in these months. Towards something we feel proud of, again.

some of my own thoughts and opinions behind k2 bigeagle.me/2025/07/kimi-k…

some of my own thoughts and opinions behind k2 bigeagle.me/2025/07/kimi-k…

🔥MGPO paper and code are finally released! MGPO equips LMMs with o3-style interpretable, multi-turn visual grounding: predicting key regions, cropping sub-images, and reasoning in a task-driven manner. Check out our paper for more details! 📄 Paper: arxiv.org/abs/2507.05920

Wow there is a larger Qwen3

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

Great to see that original Kimi-VLs get 100K+ monthly downloads on HF! And hope the new 2506 also more downloaded! P.S. Any providers would like to host this small model? @novita_labs @replicate @GroqInc @hyperbolic_labs Would love to help with thinking-template related issues!

🚨 BREAKING: @Kimi_Moonshot’s Kimi-K2 is now the #1 open model in the Arena! With over 3K community votes, it ranks #5 overall, overtaking DeepSeek as the top open model. Huge congrats to the Moonshot team on this impressive milestone! The leaderboard now features 7 different…

🚀 Hello, Kimi K2! Open-Source Agentic Model! 🔹 1T total / 32B active MoE model 🔹 SOTA on SWE Bench Verified, Tau2 & AceBench among open models 🔹Strong in coding and agentic tasks 🐤 Multimodal & thought-mode not supported for now With Kimi K2, advanced agentic intelligence…

This (non-reasoning) model shows incredible performance across SWE-bench verified, LCBv6, GPQA-Diamond and more. Shoutout to the @Kimi_Moonshot team for their SOTA work. @crystalsssup @yzhang_cs @HaoningTimothy

Kimi-K2 tops EQ-Bench, the benchmark that measures emotional intelligence.

Sure when I shared it many comments asked why loss decreases so fast in the end; but on the other hand it is a good time for more to know WSD?

Seems like many don’t know abt WSD lol

Yeah it is a prototype (e.g. Moonlight for K2), surely not as smart as mighty K2, but still managed to be smart enough at its A3B scale. (It also has a free HF space @huggingface huggingface.co/spaces/moonsho…)

me after seeing the kimi2 results yesterday

🚀 Hello, Kimi K2! Open-Source Agentic Model! 🔹 1T total / 32B active MoE model 🔹 SOTA on SWE Bench Verified, Tau2 & AceBench among open models 🔹Strong in coding and agentic tasks 🐤 Multimodal & thought-mode not supported for now With Kimi K2, advanced agentic intelligence…

huggingface.co/moonshotai/Kim… Congratssssss to all super-talented colleagues participating in this!!!

THE BIG & BEAUTIFUL THING

> Oh. Is Kimi doc leaking their next generation model? > Probably the largest MoE that I've seen > Kimi K2, 1T total params, 32B active params > Super strong on coding & agent > 128k context length > no VL > support ToolCalls, JSON Mode, search platform.moonshot.cn/docs/pricing/c…

Bruh! Something crazy just happened - found someone using ClaudeCode to connect with Kimi's new model (looks like K2)! Got a test key to try it out and honestly... this combo is absolutely insane! 🤯

We're going to update the SWE-bench leaderboards soon- lots of new submissions, including 3 systems for SWE-bench Multimodal :) We will also release SWE-agent Multimodal. w/ @jyangballin @_carlosejimenez @KLieret

huggingface.co/spaces/moonsho… We have updated the demo of the new thinking model for new features to explore besides common image dialog: - OS-Agent Grounding (P1) - PDF Understanding (P2, P3, P4) --> upload xx.pdf directly - Video Understanding --> upload xx.mp4 directly @mervenoyann

huggingface.co/blog/moonshota… A quick navigation for everyone to infer with Kimi-VL-2506 @Kimi_Moonshot locally. With only 3B act. params and vLLM it can be really fast not only recognizing Ragdolls, solving problems, but also generating deep analysis of axiv papers or long videos!

huggingface.co/blog/moonshota… A quick navigation for everyone to infer with Kimi-VL-2506 @Kimi_Moonshot locally. With only 3B act. params and vLLM it can be really fast not only recognizing Ragdolls, solving problems, but also generating deep analysis of axiv papers or long videos!

Download and try this updated model!

fav open-source multimodal reasoning model just got an update 🔥 Kimi-VL-A3B-Thinking-2506 > smarter with less tokens, small size (only 3B active params!!!) > better accuracy > video reasoning > higher resolution 🤯

Made some humble contributions to this.

Well done @Kimi_Moonshot huggingface.co/moonshotai/Kim…

🧑🏫️ We just released a monster blog on everything VLMs 👀 🔥 New trends & breakthroughs (any-to-any, reasoning, smol, MoEs, VLAs...) 🧠 How the field evolved in the last year (agentic VLMs, video, RAG...) 🤖 Alignment & benchmarks Curious about VLMs? This is your read👇