Tiezhen WANG

@Xianbao_QIAN

Engineer at HuggingFace, ex-Googler on TFLite / micro. Ideas are my own.

My 2025 Predictions for the OS/AI Frontier 1. Core Trend: Pure RL-Driven Superhuman Reasoning Pure reinforcement learning will unlock human-bias-free, nimble models capable of self-evolved reasoning with rule-based rewards, surpassing human performance in specialized domains…

We're leveling up the game with our latest open-source models, Qwen2.5-1M ! 💥 Now supporting a 1 MILLION TOKEN CONTEXT LENGTH 🔥 Here's what’s new: 1️⃣ Open Models: Meet Qwen2.5-7B-Instruct-1M & Qwen2.5-14B-Instruct-1M —our first-ever models handling 1M-token contexts! 🤯 2️⃣…

Intern-S1, a new multimodal model from @intern_lm - 235B MoE + 6B vision encoder - 5T multimodal tokens & 2.5T scientific-domain tokens - great model for AI4S research - support tool calling capabilities Model on @huggingface: huggingface.co/internlm/Inter…

Slides for my lecture “LLM Reasoning” at Stanford CS 25: dennyzhou.github.io/LLM-Reasoning-… Key points: 1. Reasoning in LLMs simply means generating a sequence of intermediate tokens before producing the final answer. Whether this resembles human reasoning is irrelevant. The crucial…

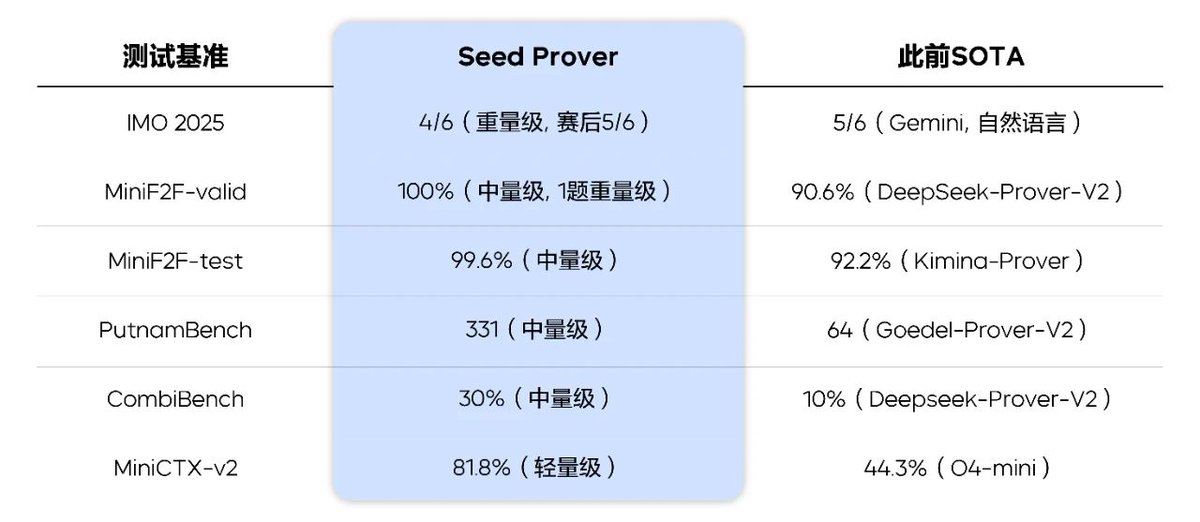

Seed Prover solved 4 out of 6 IMO questions in 3 days and got Silver. Proof: github.com/ByteDance-Seed… Big congratulations to @huajian_xin ! Now you know what I'm going to kindly ask: Would you consider open sourcing it :D

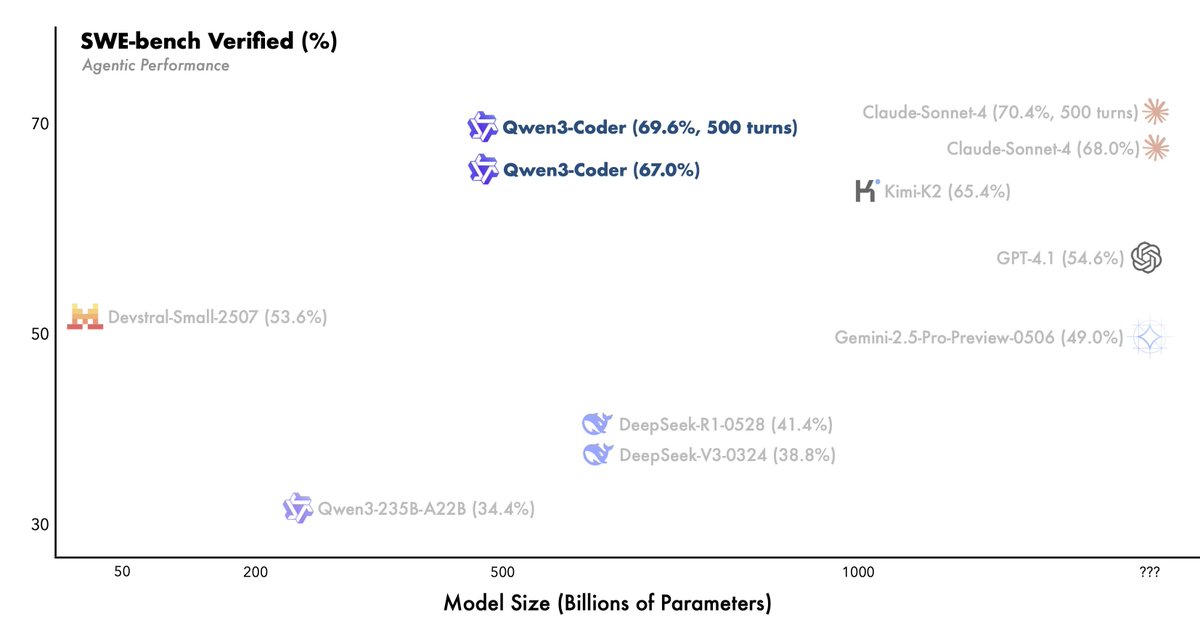

It turns out that Qwen/Qwen3-235B-A22B-Instruct-2507 is just an appetizer, the new qwen3 coder is the main meal! Amazing Apache 2 model that claims to beat Sonnet-4 in many dimensions.

Nice article on how to tune the performance of PD disaggregation for very LARGE models: huggingface.co/blog/yiakwy-xp…

The true value of open source is not just on the released foundational model itself but more importantly on the fine-tuned model that it enables. Derived from SmolLM3-3B, quantized and adapted to NPU, AXERA-TECH/SmolLM3-3B is a great example to show the powerful smollm ecosystem…

Let's talk about HopeJR in 2 mins! youtube.com/watch?v=G6tt_8…

Livestream of building HopeJR of your own. 111 people online. Almost 4000 likes after 5 hours of live streaming. Amazing! Thanks @bilibili_en for the support. Link below:

Here it comes: youtube.com/watch?v=qlKpr0…

Tonight, we’re hosting a live conversation with the authors behind recent most-liked @HuggingPapers on AI-for-Science evaluation and agents. Got a burning question for them? Drop it below and we’ll try to work it into the discussion. - ScienceBoard: Evaluating Multimodal…

Tonight, we’re hosting a live conversation with the authors behind recent most-liked @HuggingPapers on AI-for-Science evaluation and agents. Got a burning question for them? Drop it below and we’ll try to work it into the discussion. - ScienceBoard: Evaluating Multimodal…

Great technical report on Zhihu

🧠Zhihu contributor & @Kimi_Moonshot dev Dylan shares his thoughts on building Kimi K2: Why RL? Because compute may be infinite, but data is not. RL improves data efficiency - that's why we invest in scaling test-time compute. Why large models? Why Muon optimizer? → It's all…

HopeJR: Hello world! How about the first humanoid building livestream? As we're finishing up assembling the first arm of HopeJR. I'm thinking about having a half day live streaming tmr to show the progress as we build. Tmr night, Martino and @seeedstudio will go through the…