Francesco Faccio @ ICLR2025 🇸🇬

@FaccioAI

Senior Research Scientist @GoogleDeepMind Discovery Team. Previously PhD with @SchmidhuberAI. Working on Reinforcement Learning.

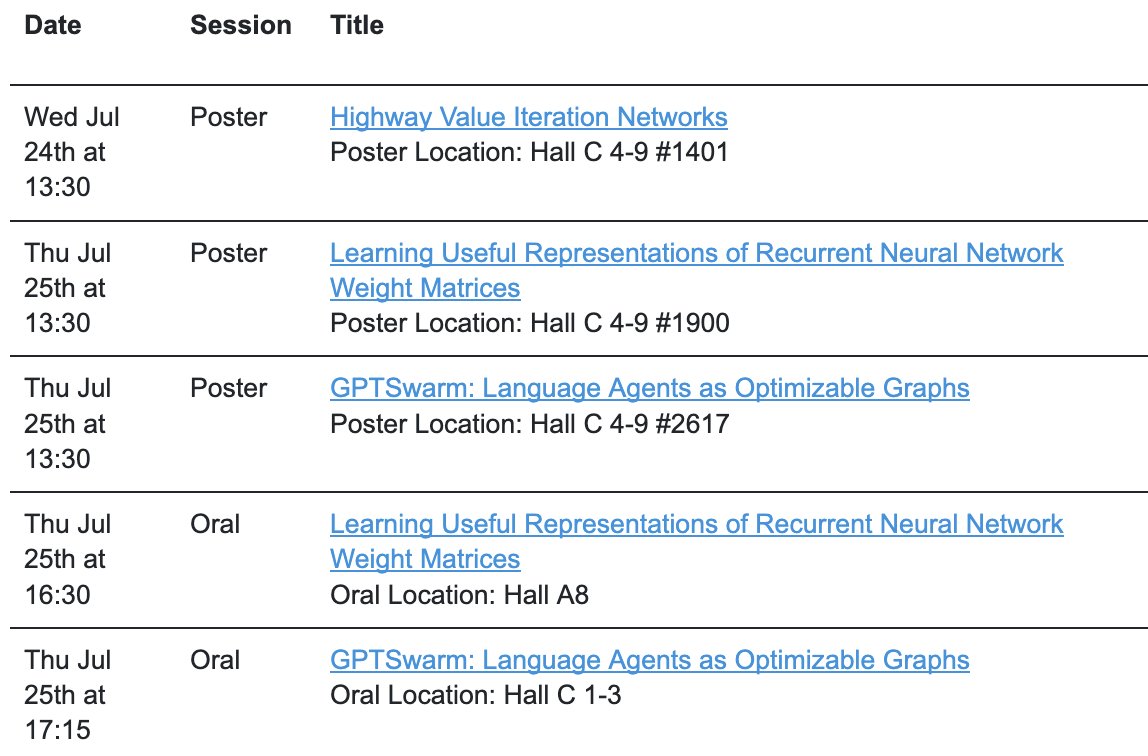

Two of our #ICML papers were selected for an oral presentation (Top 1.5%)! 1. Learning Useful Representations of Recurrent Neural Network Weight Matrices (arxiv.org/abs/2403.11998) 2. GPTSwarm: Language Agents as Optimizable Graphs (arxiv.org/abs/2402.16823)

I'm in Singapore for #ICLR2025! DM me if you’d like to meet and chat about Creativity and Curiosity in AI, AGI, Agents, or exciting opportunities at @GoogleDeepMind. You might even get a free Italian coffee ☕️ :)

If you are working on World Models, you should definitely submit your paper to our #ICLR2025 workshop!

🚨 4 Days Left! 🚨 Our ICLR 2025 Workshop: "World Models: Understanding, Modelling, and Scaling" is calling for submissions! 📢 📅 Submission Deadline: February 10, 2025 (AoE) 🔗 openreview.net/group?id=ICLR.… The workshop covers the widest range of topics on world models, including…

I'm thrilled to announce that I'm joining the Discovery team at @GoogleDeepMind in London as a Senior Research Scientist starting this January! It's incredible what the team has achieved in the past decade and I am so looking forward towards more scientific discoveries with AI.

If you are interested in using AI for scientific discovery, this project might be perfect for you!

I am hiring 3 postdocs at #KAUST to develop an Artificial Scientist for discovering novel chemical materials for carbon capture. Join this project with @FaccioAI at the intersection of RL and Material Science. Learn more and apply: faccio.ai/postdoctoral-p…

I had an amazing time in Riyadh meeting with many industrial partners and presenting our recent advancements! #GlobalAISummit #GainSummit

#KAUST’s experts are joining the @globalaisummit 2024 in Riyadh. Discover how we're driving #AI innovation for a sustainable future and contributing to #SaudiVision2030. #GlobalAISummit #GainSummit

Had a great time presenting at #ICML2024 alongside @idivinci & @LouisKirschAI. But the true highlight was @SchmidhuberAI himself capturing our moments on camera! #AI #DeepLearning

🚀 Overdue launch of my personal website faccio.ai! Check out my latest AI projects. I would like to thank my ancestors for giving me a last name that means "I make" in Italian. So, yes, I make #AI 🤖

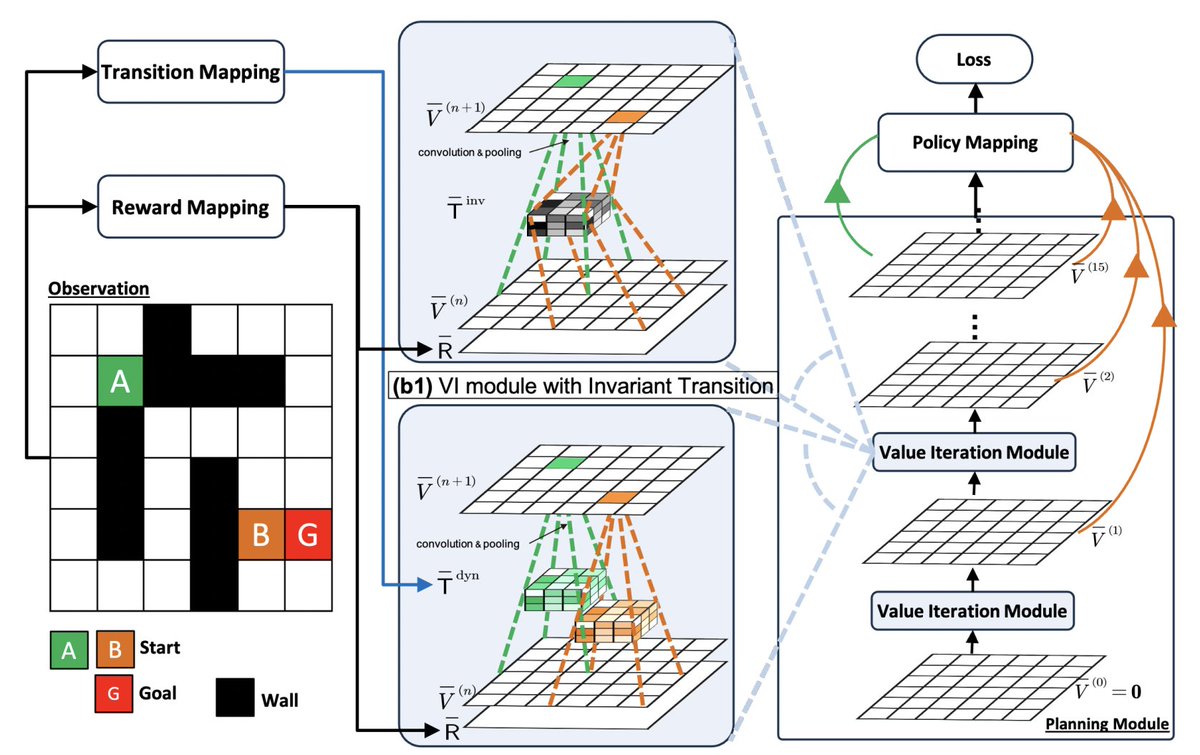

Our paper, "Scaling Value Iteration Networks to 5000 Layers for Extreme Long-Term Planning," was accepted at #EWRL. Congratulations to Yuhui Wang and the team! Paper: arxiv.org/abs/2406.08404 #AI #DeepLearning #RL

Heading to #ICML2024 for a busy week with 3 posters and 2 oral presentations. If you’re interested in discussing collaborations, visiting, or hiring opportunities at @AI_KAUST with @SchmidhuberAI, feel free to connect!

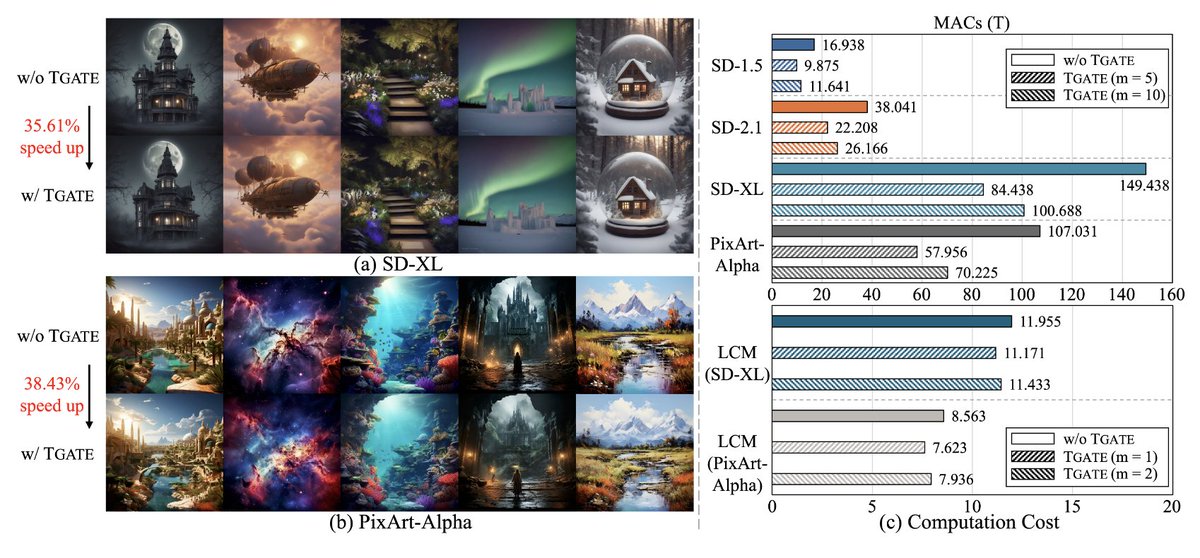

🚀Want to cut inference times by up to 50% and save money when using Transformer/CNN/Consistency-based diffusion models? Check out our latest work on Faster Diffusion Through Temporal Attention Decomposition led by @HaoZhe65347, featuring @SchmidhuberAI. Paper:…

Can neural networks with 5000 layers improve long-term planning? 🤖 Check out our latest research with @SchmidhuberAI, @oneDylanAshley, and team: arxiv.org/abs/2406.08404 #AI #DeepLearning #RL

Recently, I had the pleasure of giving a talk at Microsoft Research Asia in Beijing on 'Learning to Extract Information from Neural Networks.' Met lots of brilliant minds there! #MSFTResearch

Check out our most recent work!

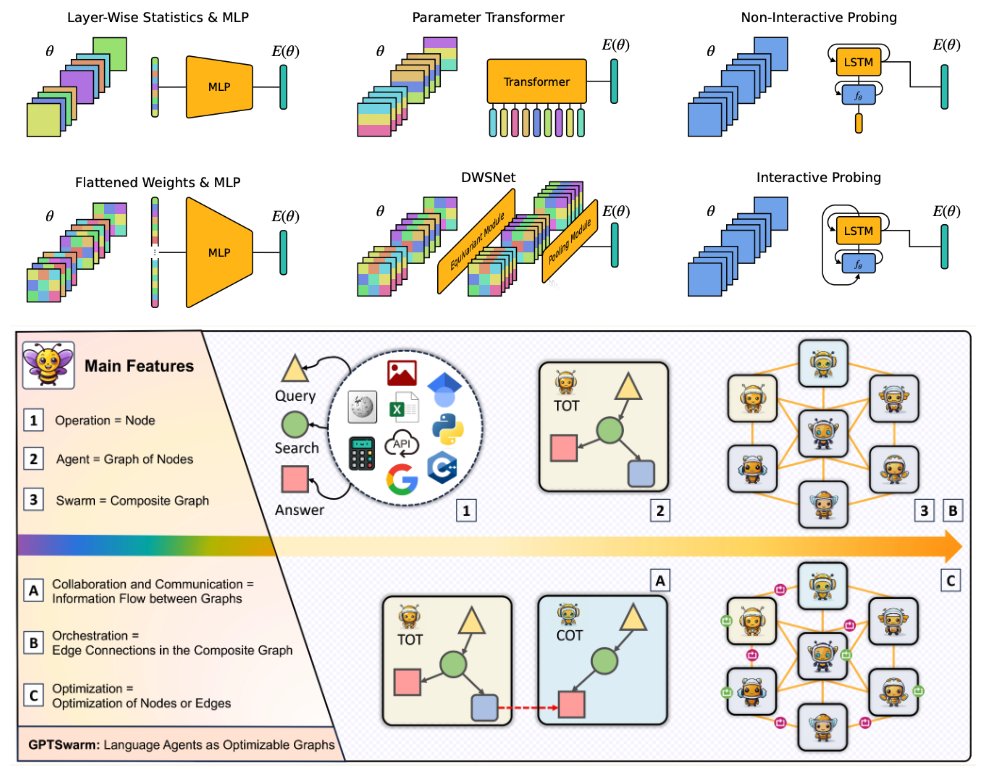

Our #GPTSwarm models Large Language Model Agents and swarms thereof as computational graphs reflecting the hierarchical nature of intelligence. Graph optimization automatically improves nodes and edges. arxiv.org/abs/2402.16823 github.com/metauto-ai/GPT… gptswarm.org

It was great to be part of this work and to present it at the #NeurIPS23 R0-FoMo workshop. Congrats to @MingchenZhuge, @oneDylanAshley, and all the other authors!

Best paper award for "Mindstorms in Natural Language-Based Societies of Mind" at #NeurIPS2023 WS Ro-FoMo. Up to 129 foundation models collectively solve practical problems by interviewing each other in monarchical or democratic societies arxiv.org/abs/2305.17066

Excited to announce the 𝐁𝐞𝐬𝐭 𝐏𝐚𝐩𝐞𝐫 𝐀𝐰𝐚𝐫𝐝𝐬 (2 papers) and 𝐇𝐨𝐧𝐨𝐫𝐚𝐛𝐥𝐞 𝐌𝐞𝐧𝐭𝐢𝐨𝐧𝐬 (2 more papers) for our NeurIPS workshop R0-FoMo: Robustness of Few-shot & Zero-shot Learning in Foundation Models 🎉 Please join us in congratulating the authors 👏

Excited to present “Contrastive Training of Complex-valued Autoencoders for Object Discovery“ at #NeurIPS2023. TL;DR -- We introduce architecture changes and a new contrastive training objective that greatly improve the state-of-the-art synchrony-based model. Explainer thread 👇: