Cambridge MLG

@CambridgeMLG

Machine Learning Group @Cambridge_Uni

@NeurIPSConf, why take the option to provide figures in the rebuttals away from the authors during the rebuttal period? Grounding the discussion in hard evidential data (like plots) makes resolving disagreements much easier for both the authors and the reviewers. Left: NeurIPS…

You can now apply for a Professor position in our group!

There is an opening for a University Assistant Professor in Machine Learning to be based at @CambridgeMLG jobs.cam.ac.uk/job/49361/ Apply!

The SPIGM Workshop is back at @NeurIPSConf with an exciting new theme at the intersection of probabilistic inference and modern AI models! We welcome submissions on all topics related to probabilistic methods and generative models---looking forward to your contributions!

🌞🌞🌞 The third Structured Probabilistic Inference and Generative Modeling (SPIGM) workshop is **back** this year with @NeurIPSConf at San Diego! In the era of foundation models, we focus on a natural question: is probabilistic inference still relevant? #NeurIPS2025

Check out my posters today if you're at ICML! 1) Detecting high-stakes interactions with activation probes — Outstanding paper @ Actionable interp workshop, 10:40-11:40 2) LLMs’ activations linearly encode training-order recency — Best paper runner up @ MemFM workshop, 2:30-3:45

Excited to share our ICML 2025 paper: "Scalable Gaussian Processes with Latent Kronecker Structure" We unlock efficient linear algebra for your kernel matrix which *almost* has Kronecker product structure. Check out our paper here: arxiv.org/abs/2506.06895

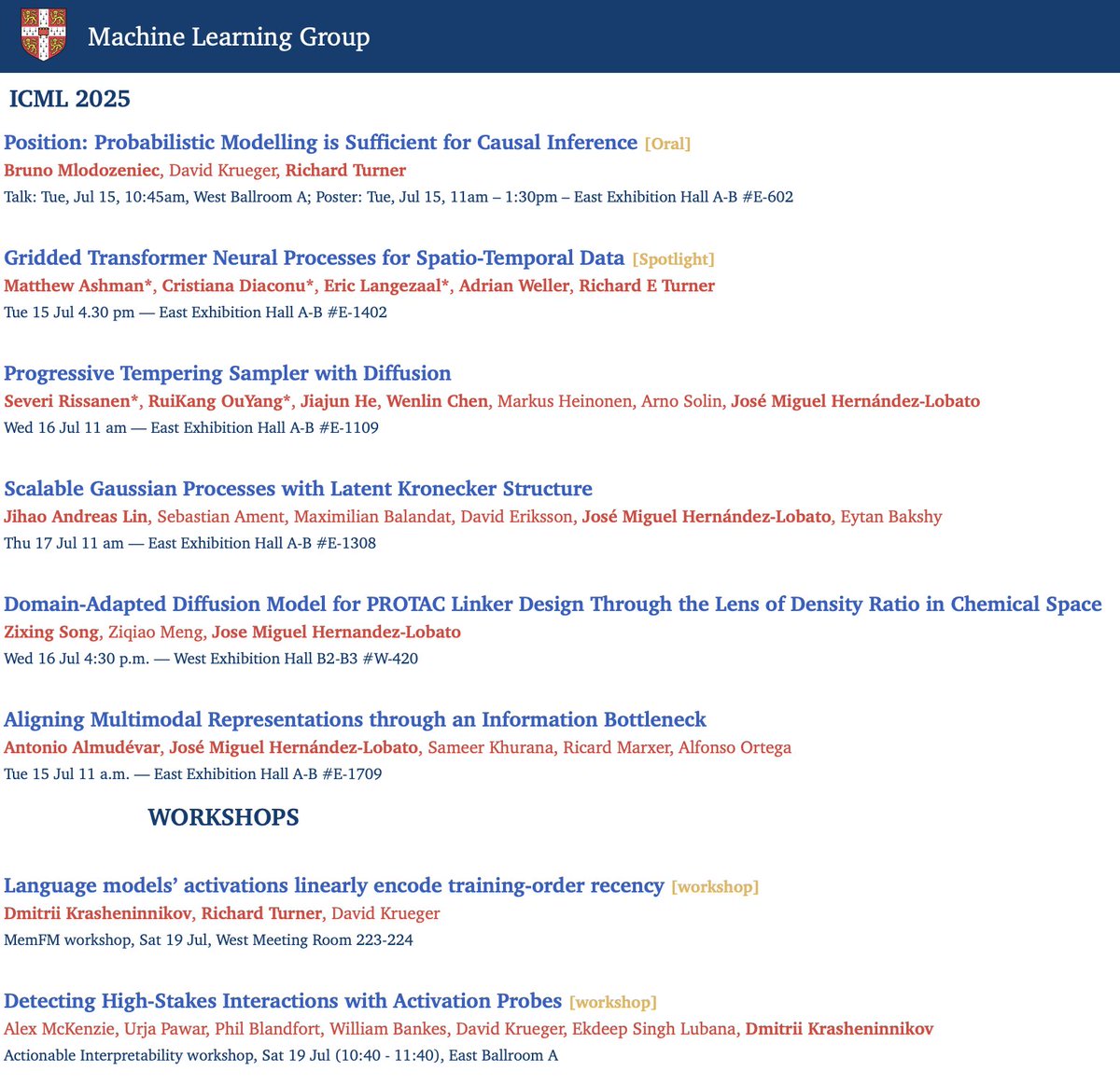

Come check-out the MLG papers at #ICML2025 in Vancouver! The schedule for all the posters below⬇️

You don't need bespoke tools for causal inference. Probabilistic modelling is enough. I'll be making this case (and dodging pitchforks) at our ICML oral presentation tomorrow.

Exited to share our new paper accepted by ICML 2025 👉 “PTSD: Progressive Tempering Sampler with Diffusion” , which aims to make sampling from unnormalised densities more efficient than state-of-the-art methods like parallel tempering. Check our threads below 👇

I will likely be looking for students at the University of Montreal / Mila to start January 2026. The deadline to apply is September 1, 2025. I will share more details later, but wanted to start getting it on people's radar!

[📜1/9] Does machine unlearning truly erase data influence? Our new paper reveals a critical insight: 'forgotten' information often isn't gone—it's merely dormant, and easily recovered by fine-tuning on just the retain set.

New revision of “BNEM: A Boltzmann Sampler Based on Bootstrapped Noised Energy Matching” 🚀 (1/6) We introduce NEM and BNEM — diffusion&energy-based neural samplers for Boltzmann distributions. 📝 Read our paper at [arxiv.org/pdf/2409.09787]