Agentica Project

@Agentica_

Building generalist agents that scale @BerkeleySky

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

Excited to introduce DeepSWE-Preview, our latest model trained in collaboration with @Agentica_ Using only RL, we increase the performance of Qwen 3 32B from 23% to 42.2% on SWE-Bench Verified!

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

Let's give a big round of applause for an amazing open-source release! They're not just sharing the model's weights; they're open-sourcing everything: the model itself, the training code (rLLM), the dataset (R2EGym), and the training recipe for complete reproducibility. 👏👏👏👏

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

🚀 Introducing rLLM: a flexible framework for post-training language agents via RL. It's also the engine behind DeepSWE, a fully open-sourced, state-of-the-art coding agent. 🔗 GitHub: github.com/agentica-proje… 📘 rLLM: pretty-radio-b75.notion.site/rLLM-A-Framewo… 📘 DeepSWE: pretty-radio-b75.notion.site/DeepSWE-Traini…

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

The first half of 2025 is all about reasoning models. The second half? It’s about agents. At Agentica, we’re thrilled to launch two major releases: 1. DeepSWE, our STOA coding agent trained with RL that tops SWEBench leaderboard for open-weight models. 2. rLLM, our agent…

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

We believe in experience-driven learning in the SKY lab. Hybrid verification plays an important role.

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

🚀 Introducing DeepSWE: Open-Source SWE Agent We're excited to release DeepSWE, our fully open-source software engineering agent trained with pure reinforcement learning on Qwen3-32B. 📊 The results: 59% on SWE-Bench-Verified with test-time scaling (42.2% Pass@1) - new SOTA…

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

🚀The era of overpriced, black-box coding assistants is OVER. Thrilled to lead the @Agentica_ team in open-sourcing and training DeepSWE—a SOTA software engineering agent trained end-to-end with @deepseek_ai -like RL on Qwen32B, hitting 59% on SWE-Bench-Verified and topping the…

🚀 Introducing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. 💪DeepSWE…

Announcing DeepSWE 🤖: our fully open-sourced, SOTA software engineering agent trained purely with RL on top of Qwen3-32B. DeepSWE achieves 59% on SWEBench-Verified with test-time scaling (and 42.2% Pass@1), topping the SWEBench leaderboard for open-weight models. Built in…

It's easy to confuse Best@K vs Pass@K—and we've seen some misconceptions about our results. Our 59% on SWEBench-Verified is Pass@1 with Best@16, not Pass@8/16. Our Pass@8/16 is 67%/71%. So how did we achieve this? DeepSWE generates N candidate solutions. Then, another LLM…

Is it malpractice to report SOTA with pass@8 without using other models at pass@8 or just standard practice at this point? It's clearly not SOTA if it's behind Devstral in a pass@1

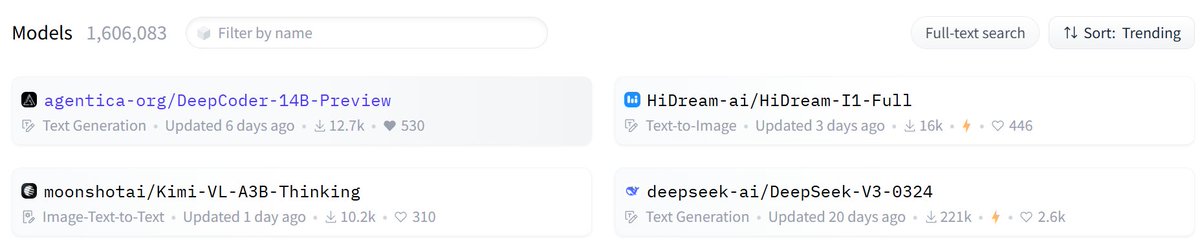

We're trending on @huggingface models today! 🔥 Huge thanks to our amazing community for your support. 🙏

UC Berkeley open-sourced a 14B model that rivals OpenAI o3-mini and o1 on coding! They applied RL to Deepseek-R1-Distilled-Qwen-14B on 24K coding problems. It only costs 32 H100 for 2.5 weeks (~$26,880)! It's truly open-source. They released everything: the model, training…

Our team has open sourced our reasoning model that reaches o1 and o3-mini level on coding and math: DeepCoder-14B-Preview.

Introducing DeepCoder-14B-Preview - our fully open-sourced reasoning model reaching o1 and o3-mini level on coding and math. The best part is, we’re releasing everything: not just the model, but the dataset, code, and training recipe—so you can train it yourself!🔥 Links below: