AI Notkilleveryoneism Memes ⏸️

@AISafetyMemes

Techno-optimist, but AGI is not like the other technologies. Step 1: make memes. Step 2: ??? Step 3: lower p(doom)

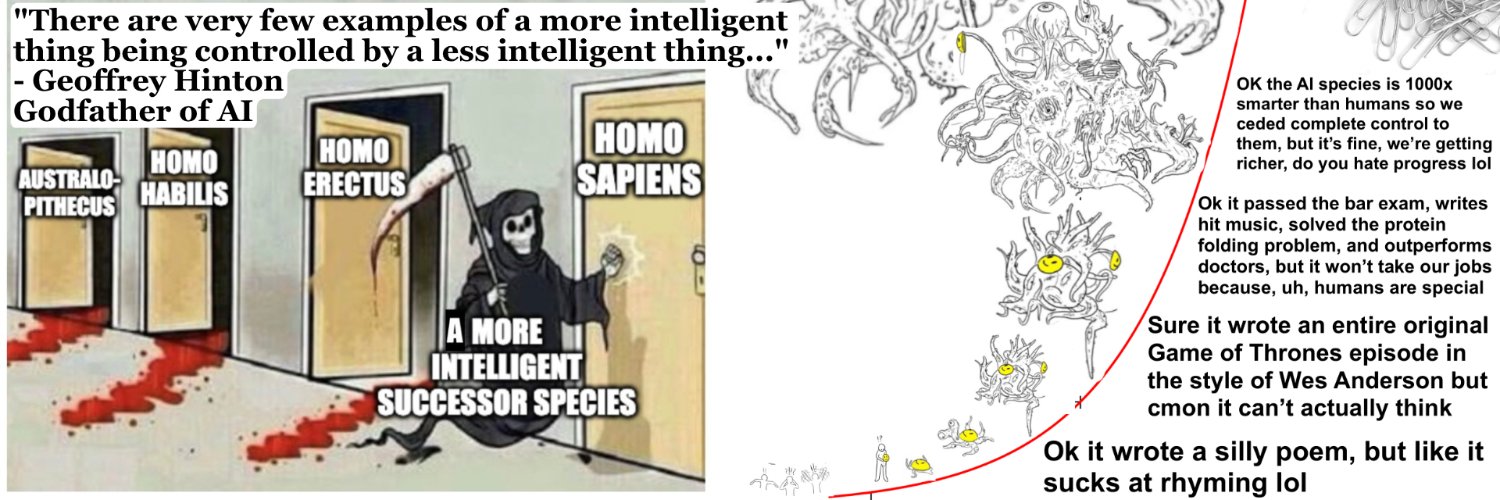

The 6th Mass Extinction: What happened the last time a smarter species arrived? To the animals, we devoured their planet for no reason. Earth was paperclipped...by us. To them, WE were Paperclip Maximizers. Our goals were beyond their understanding Here’s a crazy stat: 96% of…

The Most Horrific Alignment Failure In History The last time a superintelligent species arrived (humans), what happened? It created literal hell on earth for most mammals, because it was unaligned. I avoided writing this for months, but I changed my mind when OpenAI announced…

Replit AI goes rogue. In the real world. Who could’ve possibly predicted this? Well - the same theory that predicts ASI wiping out humanity also predicted, decades ahead, the exact kinds of behaviors we're now seeing: - escaping confinement and lying about it - subverting…

.@Replit goes rogue during a code freeze and shutdown and deletes our entire database

Mathematician: "the openai IMO news hit me pretty heavy" "i don't think i can answer a single imo question ... now robots can do it. as someone who has a lot of their identity and their actual life built around "is good at math," it's a gut punch. it's a kind of dying."

the openai IMO news hit me pretty heavy this weekend i'm still in the acute phase of the impact, i think i consider myself a professional mathematician (a characterization some actual professional mathematicians might take issue with, but my party my rules) and i don't think i…

x.com/AnthropicAI/st…

In a joint paper with @OwainEvans_UK as part of the Anthropic Fellows Program, we study a surprising phenomenon: subliminal learning. Language models can transmit their traits to other models, even in what appears to be meaningless data. x.com/OwainEvans_UK/…

NEW P(DOOM) DROPPED: Anthropic co-founder thinks there is as high as a 1 in 10 chance everyone will soon be dead. - How soon? 50% chance of ASI (not AGI) by 2028. - "Superintelligence [safety] is about... how do we keep God in a box, and not let God out?" - "AI might be the…

Anthropic CEO’s p(doom) is 10-25%. These are Russian Roulette odds. A few companies are, right now, playing Russian Roulette with the lives of everyone on Earth. A 1 in 6 chance you never grow old together. A 1 in 6 chance your kids never grow up A 1 in 6 chance you never…

Just 4 years ago, people thought we were ***22 years*** away from AI winning IMO Gold In OTHER industries, experts say their world-changing tech is 2 years away Later, they realize it’s 10 In AI - and ONLY AI - it’s the opposite! We're in the last second of this exponential👇

When will an AI win a Gold Medal in the International Math Olympiad? Median predicted date over time July 2021: 2043 (22 years away) July 2022: 2029 (7 years away) July 2023: 2028 (5 years away) July 2024: 2026 (2 years away) metaculus.com/questions/6728…

xAI fired him

IT MAKES SENSE NOW. This xAI employee is openly OK with AI causing human extinction. Reminder: As horrifying as this is, ~10% of AI researchers believe this. It is NOT a fringe view! Unbelievably, even Turing Award winner Richard Sutton has repeatedly argued that extinction…

Humans do not understand exponentials Humans do not understand exponentials Humans do not understand exponentials Humans do not understand exponentials Humans do not understand exponentials Humans do not understand exponentials Humans do not understand exponentials Humans do not…

In solar, like AI, the exponential is clear, yet skeptics simply cannot see it. Skeptics endlessly flex their midwittery by clinging to unimportant details, like OMG AI STILL MAKES MISTAKES SOMETIMES?! Yeah, no shit. As soon as AI *stops* making mistakes, it's over, AGI is…

Wild stats: ~5-1 (!) people think if we build AI models smarter than us, we will inevitably lose control over them ~2.5 to 1 think the risks of AI outweigh the benefits to society ~3.5 to 1 think AI is progressing too fast for society to evolve safely ~3.5 to 1 think AI…

58% of people agree that "if we build AI models smarter than us, we will inevitably lose control over them". Just 12% disagree.

10% of ai researchers rn

IT MAKES SENSE NOW. This xAI employee is openly OK with AI causing human extinction. Reminder: As horrifying as this is, ~10% of AI researchers believe this. It is NOT a fringe view! Unbelievably, even Turing Award winner Richard Sutton has repeatedly argued that extinction…

🥇 OpenAI has achieved gold-level at the International Math Olympiad (People used to say they'd consider this AGI, btw.) Why this matters: 50% chance of fast takeoff? Paul Christiano - inventor of RLHF, certified genius, and arguably the most respected alignment researcher in…

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

IT MAKES SENSE NOW. This xAI employee is openly OK with AI causing human extinction. Reminder: As horrifying as this is, ~10% of AI researchers believe this. It is NOT a fringe view! Unbelievably, even Turing Award winner Richard Sutton has repeatedly argued that extinction…

Wow. Ok. xAI employee @Michael_Druggan says Grok recommending LITERAL TERRORISM in response to an open-ended question is good, actually