Shuangfei Zhai

@zhaisf

Machine Learning Research @ Apple

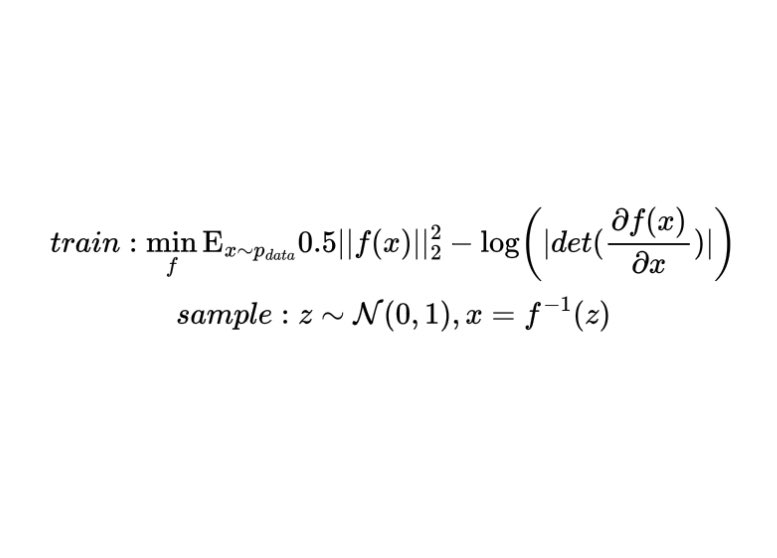

We attempted to make Normalizing Flows work really well, and we are happy to report our findings in paper arxiv.org/pdf/2412.06329, and code github.com/apple/ml-tarfl…. [1/n]

![zhaisf's tweet image. We attempted to make Normalizing Flows work really well, and we are happy to report our findings in paper arxiv.org/pdf/2412.06329, and code github.com/apple/ml-tarfl…. [1/n]](https://pbs.twimg.com/media/GedfCo_aEAIJUtL.jpg)

Normalizing Flows explained in terms of catching rabbits👇 There is a dense cluster of steep hills (figure 1), and at the top of each hill lives a rabbit. Catching rabbits here is very difficult, because you’d have to climb and down and sometimes you may get lost in the valleys.…

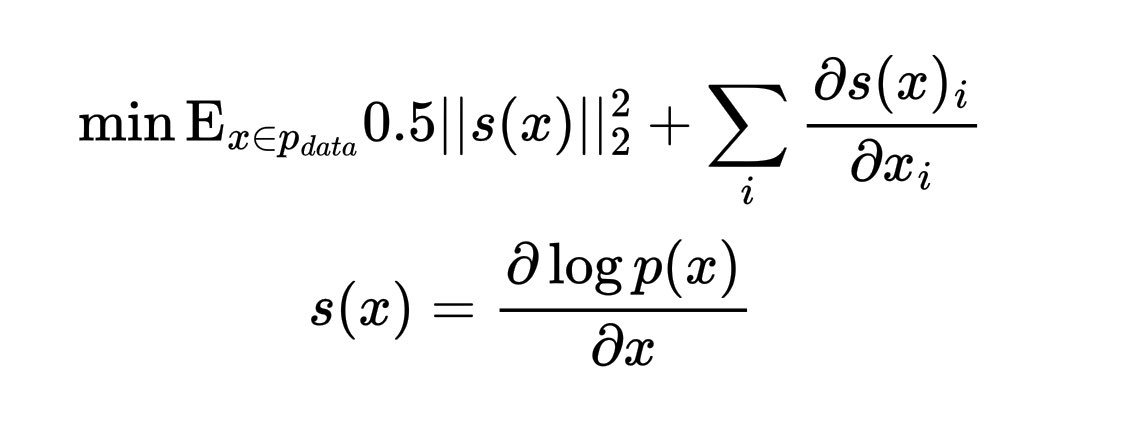

Score matching has become a popular concept, but its original form (often referred to as implicit SM) as proposed in the JMLR 2005 paper is rarely brought up nowadays. I first came across SM during my PhD around 2015, and was immediately drawn into it. I thought that it could be…

On a related note, I also like to think of weight decay (in the AdamW style, not Adam + L2 regularization) as part of the LR schedule. The basic logic is: more WD -> smaller weight norm -> larger relative update with a fixed LR == increased LR. And the regularization benefit from…

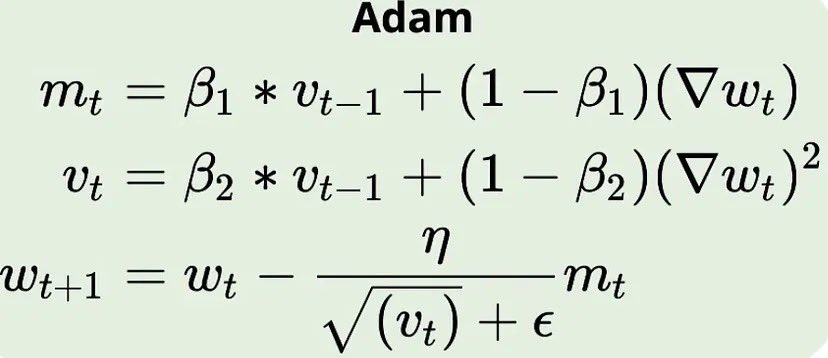

We all know about learning rate schedules, but how come scheduling the momentum terms (ie, betas in Adam) is not a thing? A few years back, I was working on a problem that involves an image reconstruction loss ||f(x) - y||^2. In order to make the output look nice, a typical…

We all know about learning rate schedules, but how come scheduling the momentum terms (ie, betas in Adam) is not a thing? A few years back, I was working on a problem that involves an image reconstruction loss ||f(x) - y||^2. In order to make the output look nice, a typical…

Congratulations to @DaniloJRezende and Shakir Mohamed! This is how first learned about normalizing flows. Their paper on VAEs could/should have also gotten a nod.

#ICML2025 test of time award

Normalizing Flows are coming back to life. I'll be attending #ICML2025 on Jul 17 to present TarFlow -- with the Oral presentation at 4:00 PM in West Ballroom A and the poster at 4:30 PM in East Exhibition Hall A-B #E-2911. Stop by and I promise it will be worth your time.

We attempted to make Normalizing Flows work really well, and we are happy to report our findings in paper arxiv.org/pdf/2412.06329, and code github.com/apple/ml-tarfl…. [1/n]

Small plug, not really advertised but we similarly showed how to perform temperature based control and composition of separately trained diffusion models via SMC and the Feynman Kac model formalism, with score distillation of the energy at AISTATS last year - Diversity control…

Why do we keep sampling from the same distribution the model was trained on? We rethink this old paradigm by introducing Feynman-Kac Correctors (FKCs) – a flexible framework for controlling the distribution of samples at inference time in diffusion models! Without re-training…

Feel free to drop by our talks at: June 11 Morning (202 B): vision-x-nyu.github.io/scalable-visio… June 11 Afternoon (Grand A2): generative-vision.github.io/workshop-CVPR-… June 12 Afternoon (103 A): vgm-cvpr.github.io

I will be attending #CVPR2025 and presenting our latest research at Apple MLR! Specifically, I will present our highlight poster--world consistent video diffusion (cvpr.thecvf.com/virtual/2025/p…), and three workshop invited talks which includes our recent preprint ★STARFlow★! (0/n)

STARFlow: Scaling Latent Normalizing Flows for High-resolution Image Synthesis "We present STARFlow, a scalable generative model based on normalizing flows that achieves strong performance on high-resolution image synthesis"

I will be attending #CVPR2025 and presenting our latest research at Apple MLR! Specifically, I will present our highlight poster--world consistent video diffusion (cvpr.thecvf.com/virtual/2025/p…), and three workshop invited talks which includes our recent preprint ★STARFlow★! (0/n)

STARFlow: Scaling Latent Normalizing Flows for High-resolution Image Synthesis "We present STARFlow, a scalable generative model based on normalizing flows that achieves strong performance on high-resolution image synthesis"

Yet another new paper that tries the exact same Jacobi iteration idea! I guess good ideas are all similar 😅 arxiv.org/abs/2505.24791.

TarFlow has great training efficiency, but its sampling can be slow. This new paper applies (training free) Jacobi iteration to TarFlow and achieves up to 5x sampling speedup without loss of quality. Very promising results! arxiv.org/abs/2505.12849. Bonus point, they also open…

Cool new paper "Normalizing Flows are Capable Models for RL" -- delivers that same message as our TarFlow paper did but demonstrates it in the RL domain!

Normalizing Flows (NFs) check all the boxes for RL: exact likelihoods (imitation learning), efficient sampling (real-time control), and variational inference (Q-learning)! Yet they are overlooked over more expensive and less flexible contemporaries like diffusion models. Are NFs…