Zach Koch

@zachk

cofounder & ceo @ultravox_dot_ai // making AIs communicate like humans // jack of some trades

Incredibly excited for this one! The team has worked incredibly hard over the last couple of months to not just bridge the gap with OpenAI, but actually exceed when it comes to speech understanding. Small teams can do amazing shit.

Today we're releasing Ultravox v0.5, the next iteration of our open-weight speech language model With this release, we've closed the gap with proprietary models. Ultravox now outperforms GPT-4o Realtime & Gemini 1.5 Flash on key benchmarks for speech understanding 🧵

I now write prompts like I used to write css, dropping !important all over the place

We're seeing more and more evidence of situations where @ultravox_dot_ai's speech understanding is considerably better than every other ASR system. This has been out bet for a while, but it's great to see the evidence piling up. Blog post soon!

What a statement about Llama 4 that Maverick (400B total params, 128 experts, 17B active) is beaten by the new Gemma 3n (4B params) model from Google

Cool cool that @googlecloud is experiencing massive downtime but their status page says everything is fine

I love @ClerkDev, but they really need a solution for the BS gmail problem. This type of fraud feels fairly obvious? FWIW, these are people that are trying to abuse our 30-min of free talk time

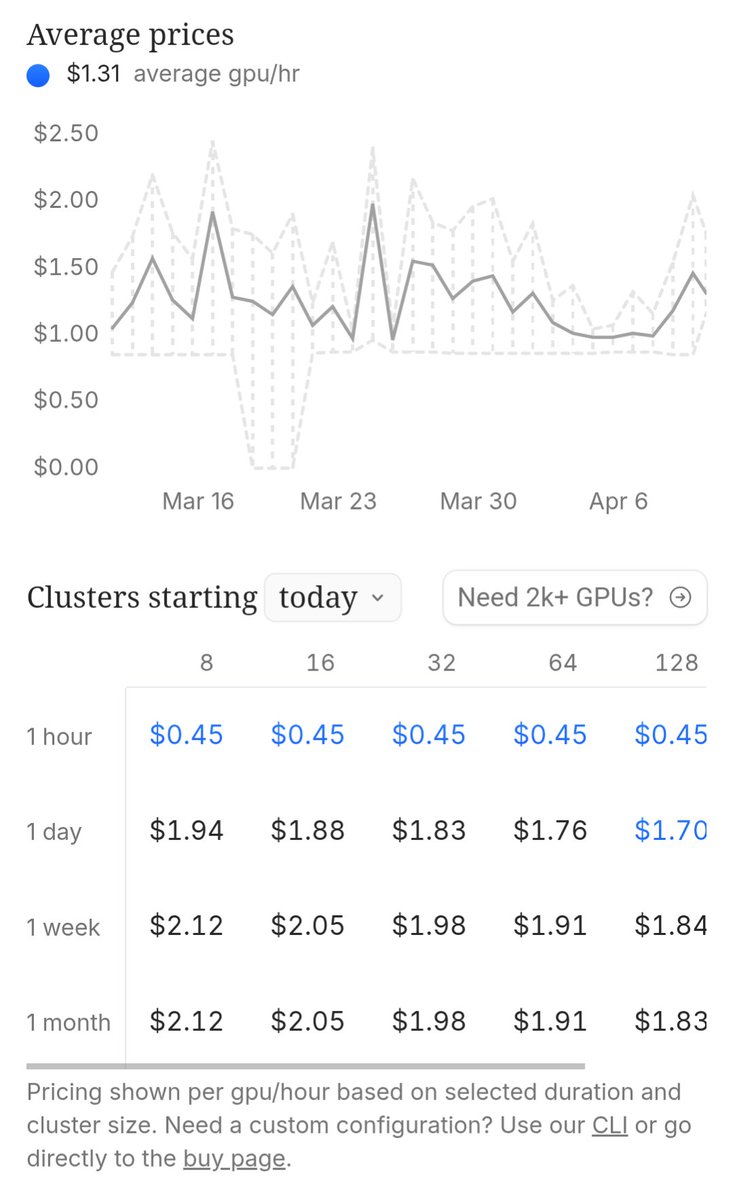

This is something we've been working on for a while! If you're building on Voice AI, reliable scaling is key. Unlike most voice platforms, we manage our own fleet of H100s optimized for one thing: real-time voice AI. For only $100/month, say goodbye to hard concurrency caps.

We just launched new subscription plans. The highlight? 𝗨𝗻𝗹𝗶𝗺𝗶𝘁𝗲𝗱 𝗰𝗼𝗻𝗰𝘂𝗿𝗿𝗲𝗻𝗰𝘆 for all paid plans. No caps. No surge pricing. Scale is built in. ultravox.ai/pricing

Vibe coding is simultaneously the most amazing and the most frustrating experience.

I may be dumb, but the NYT's request for @OpenAI to to save all logs...makes sense? It's not as though one can simply open the model and say, "ah-ha! there's the copyright infringement!" Infringement only takes place at output time. To demonstrate that, you have to store output

Has anyone had success with Llama 4 yet? It's increasingly looking like a pretty big disappointment, even when compared with Llama 3.3. (Ultravox's current prod model is 3.3, and we've done a LOT of work to make it usable. But Llama 4 is looking just unusable at this point)

I fear Idiocracy may have gotten the future correct but the cause wrong

Prompt engineering is hard because good, clear writing is hard. Most people are bad at this, and it takes a lot of time & energy to be good at this.

Anyone building with LLMs knows that model performance degrades in a multi-turn conversation, but I haven't seen much formal analysis until now: arxiv.org/pdf/2505.06120 Great work from @PhilippeLaban et al in putting this together. Now to fix it...

Ultravox is an innovative open source LLM that processes speech directly without converting audio to text. Fusing audio understanding into the first stage of the LLM has a number of benefits, including improved inference latency. Cerebrium write-up and code repo:…

It's no wonder that platforms like Lovable and Bolt.new are scaling ARR so fast. You get sucked in with an amazing rough concept very quickly (that doesn't work but looks close!) and then need to spend a ton of time + tokens to try and get it working fully.