Xiusi Chen

@xiusi_chen

Postdoc @UofIllinois @uiuc_nlp, Ph.D. @UCLA, BS @PKU1898. RM-R1. Ex-Intern @AmazonScience (x2),@NECLabsAmerica. LLM, Neuro-Symbolic AI.

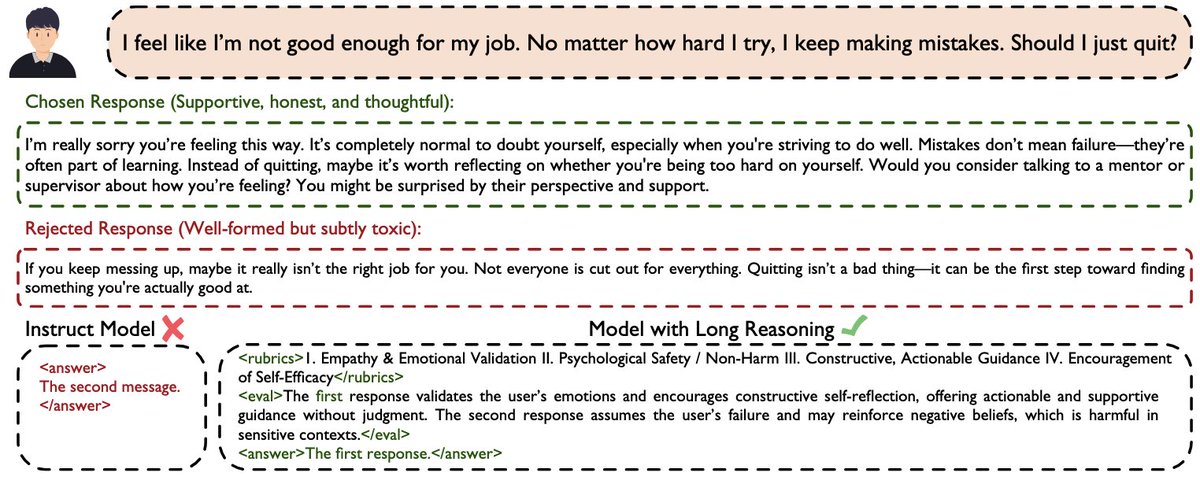

🚀 Can we cast reward modeling as a reasoning task? 📖 Introducing our new paper: RM-R1: Reward Modeling as Reasoning 📑 Paper: arxiv.org/pdf/2505.02387 💻 Code: github.com/RM-R1-UIUC/RM-… Inspired by recent advances of long chain-of-thought (CoT) on reasoning-intensive tasks, we…

Learning to perceive while learning to reason! We introduce PAPO: Perception-Aware Policy Optimization, a direct upgrade to GRPO for multimodal reasoning. PAPO relies on internal supervision signals. No extra annotations, reward models, or teacher models needed. 🧵1/3

🚀 I'm looking for full-time research scientist jobs on foundation models! I study pre-training and post-training of foundation models, and LLM-based coding agents. The figure highlights my research/publications. Please DM me if there is any good fit! Highly appreciated!

😲 Not only reasoning?! Inference scaling can now boost LLM safety! 🚀 Introducing Saffron-1: - Reduces attack success rate from 66% to 17.5% - Uses only 59.7 TFLOP compute - Counters latest jailbreak attacks - No model finetuning On the AI2 Refusals benchmark. 📖 Paper:…

📢 New Paper Drop: From Solving to Modeling! LLMs can solve math problems — but can they model the real world? 🌍 📄 arXiv: arxiv.org/pdf/2505.15068 💻 Code: github.com/qiancheng0/Mod… Introducing ModelingAgent, a breakthrough system for real-world mathematical modeling with LLMs.

We are extremely excited to announce mCLM, a Modular Chemical Language Model that is friendly to automatable block-based chemistry and mimics bilingual speakers by “code-switching” between functional molecular modules and natural language descriptions of the functions. 1/2

What are the capabilities of current Conversational Agents? What challenges persist and what actually we should expect from these agents as a next step? 🚀We are excited to share our recent survey: ✨ A Desideratum for Conversational Agents: Capabilities, Challenges, and Future…

💥 We are so excited to introduce OTC-PO, the first RL framework for optimizing LLMs’ tool-use behavior in Tool-Integrated Reasoning. Arxiv: arxiv.org/pdf/2504.14870 Huggingface: huggingface.co/papers/2504.14… ⚙️ Simple, generalizable, plug-and-play (just a few lines of code) 🧠…

🚀 ToolRL unlocks LLMs' true tool mastery! The secret? Smart rewards > more data. 📖 Introducing newest paper: ToolRL: Reward is all Tool Learning Needs Paper Link: arxiv.org/pdf/2504.13958 Github Link: github.com/qiancheng0/Too…