Vincent Abbott

@vtabbott_

Maker of *those* diagrams for deep learning algorithms | @mit @mitlids incoming PhD

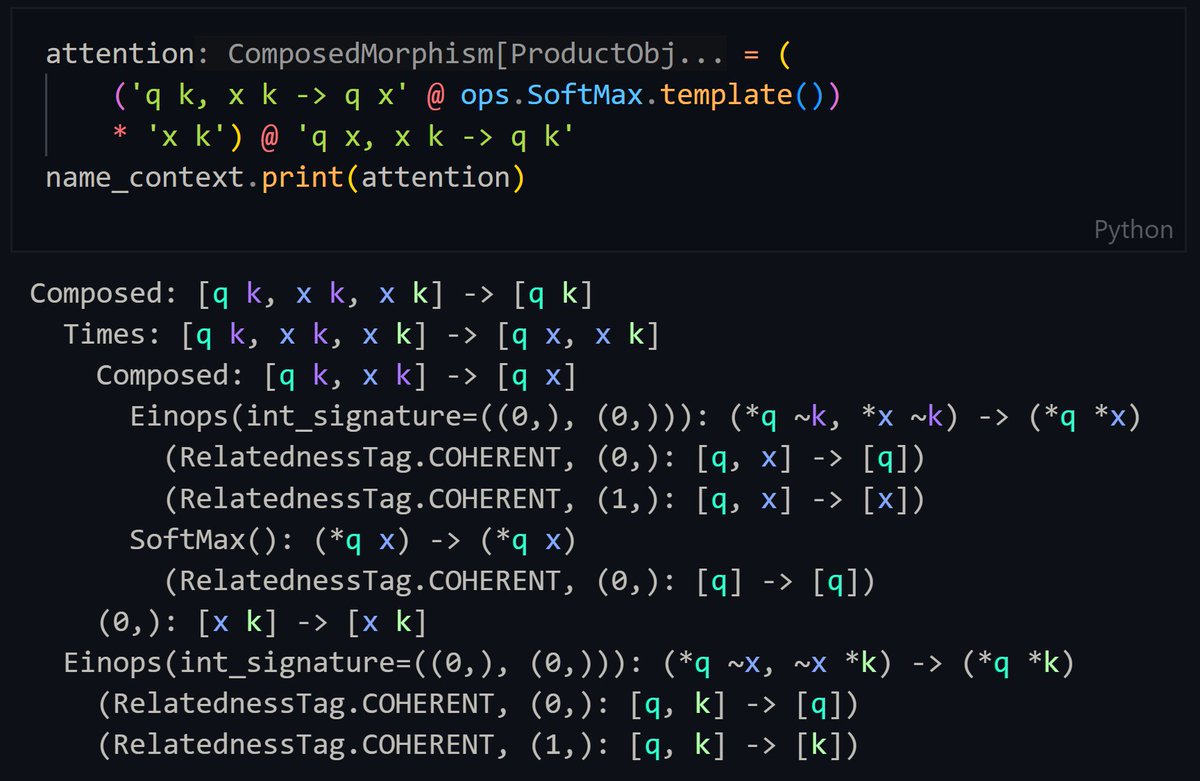

In the categorical deep learning package I'm making, composing operations modifies them by aligning axes. Axes are therefore symbols, and the random uids of these symbols are rendered as colors!

Ok I really need to make a post about why the memory access requirements of AB*BC matrix multiplication is not; AB+BC But is instead, ABC(CacheSize)^(-0.5) And how this is actually quite easy to derive.

I derived a category-theoretic notion of a (CUDA) kernel as a parallelised function that works *shockingly* well, turning fusion into a compositional property. The remaining hurdle is figuring out how to deal with streamable/looped operations.

Spent the last week doing a major refactor to better model when fused GPU operations are possible. Another benefit - here's attention in one line!

Just got the automatic derivation of FlashAttention's performance model to work! Algebraic descriptions and generated diagrams now support low-level kernels + derive memory usage and bandwidth requirements. Compiled fusion for general/non-elementwise operations is up next.

Adding multi-level performance models to diagrams. This will allow performance models of FlashAttention / matmul / distributed MoEs to be dynamically calculated. Colors indicate execution at different levels, and the hexagons indicate a partitioned axis.

Algebraic definition of a transformer which automatically generates configurations, diagrams, torch modules and - now - performance models!

Automatically generated diagram of Transformer + Multi-Layer Perceptron. Python code generates a json, which is loaded by TypeScript and rendered. Axes sizes are stored internally and labelled, allowing for safe deep learning code.

I'll be refactoring the code to allow for texture packs at some point. This is actually a good resource for style choices. The wires are drawn between anchors (shown below), so it should be straightforward to just change the "drawCurves" function.

Working on making automatically generated diagrams *aesthetic*. Here is attention, generated from a mathematical definition. Note how there are multiple k and m values, as the code found that these two values can be independently set.

I'm working on symbolically expressed deep learning models. Built on standard definitions, we can provide a web of features from different modules. One module produces a model, another converts it to PyTorch, another exports it to JSON, and another loads to TypeScript and renders…

The implementations I'm working on are based on novel algebraic/categorical constructs that can–at last–properly represent broadcasting. This will allow deep learning models to be symbolically expressed, from which Torch implementations, diagrams etc follow. Here's a sneak peak!

Making progress with automatically generating diagrams of deep learning models (here's multi-head attention). Next up, automated performance modelling + conversion from PyTorch to data structure that allows for diagram generation + performance modelling.

Recently posted w/ @GioeleZardini and @sgestalt_jp. Diagrams indicate exponents are attention’s bottleneck. We use the fusion theorems to show any normalizer works for fusion and we replace SoftMax with L2, and implement it thanks to @GerardGlow47445! Even w/o warp shuffling TC…

Vincent Abbott, et al.: Accelerating Machine Learning Systems via Category Theory: App... arxiv.org/abs/2505.09326 arxiv.org/pdf/2505.09326 arxiv.org/html/2505.09326