Tyler LaBonte

@tmlabonte

ML PhD student @GeorgiaTech, Math BS @USC. Deep learning theory, generalization, robustness.

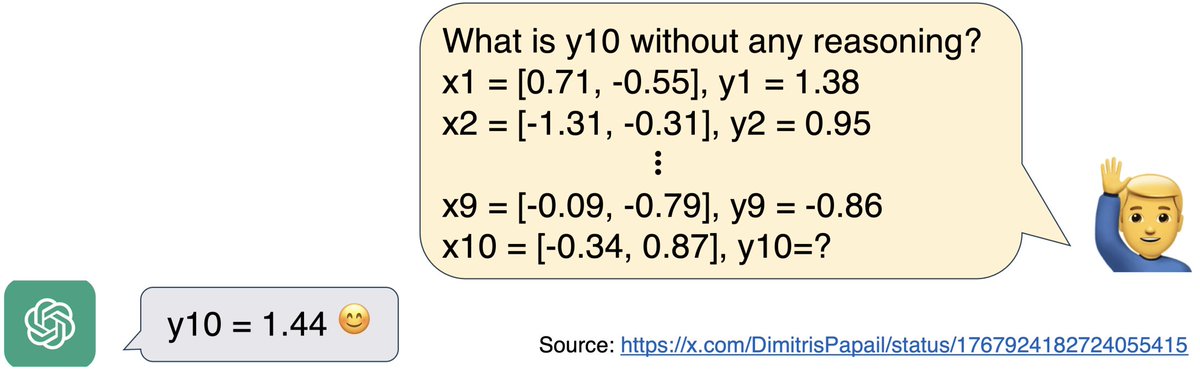

Excited to present at the first #AISTATS2025 poster session on May 3! Ever wondered how LLMs can generalize to new tasks in-context despite only training on token completion? We formalize this phenomenon as "task shift" and investigate a linear version: arxiv.org/abs/2502.13285

Returning to Building 99 for my second internship @MSFTResearch working on multimodal reasoning. Come say hi!

That's a wrap on ICLR/AISTATS! It was a wonderful experience to have deep research discussions in a part of the world I had never been to. Thanks to everyone who stopped by to chat or even just say hi 😎

See you this afternoon (May 5) at Poster 42 in Hall A-E!

Excited to present at the first #AISTATS2025 poster session on May 3! Ever wondered how LLMs can generalize to new tasks in-context despite only training on token completion? We formalize this phenomenon as "task shift" and investigate a linear version: arxiv.org/abs/2502.13285

Update: our poster has been rescheduled to May 5.

Excited to present at the first #AISTATS2025 poster session on May 3! Ever wondered how LLMs can generalize to new tasks in-context despite only training on token completion? We formalize this phenomenon as "task shift" and investigate a linear version: arxiv.org/abs/2502.13285

See you all at the ICLR SCSL workshop today in Garnet 214-215!

Heading to #ICLR2025 to present our SCSL workshop paper on understanding how last-layer retraining methods mitigate spurious correlations! openreview.net/pdf?id=B2W51aq… Stop by on Monday, April 28 to chat and learn more 🙂

A very nice new book on learning theory! I like the balance between the classical (Rademacher complexities) and modern (overparameterized models).

My book is (at last) out, just in time for Christmas! A blog post to celebrate and present it: francisbach.com/my-book-is-out/

Scientific investigation of deep learning has never been more important! This type of workshop is always a must-see for me

We need more of *Science of Deep Learning* in the major ML conferences. This year’s @NeurIPSConf workshop @scifordl on this topic is just starting, and I hope it is NOT the last edition!!!

I'll be there! Will you??

📢 Join us at #NeurIPS2024 for an in-person LeT-All mentorship event! 📅 When: Thurs, Dec 12 | 7:30-9:30 PM PST 🔥 What: Fireside chat w/ Misha Belkin (UCSD) on Learning Theory Research in the Era of LLMs, + mentoring tables w/ amazing mentors. Don’t miss it if you’re at NeurIPS!

Happening today in the East Exhibit Hall #4306!

What if common robustness methods could collapse performance in realistic training runs? And what if model scaling could drastically improve robustness under the right conditions? Sharing our #NeurIPS2024 paper on understanding spurious correlations! arxiv.org/abs/2407.13957