Tali Dekel

@talidekel

Associate Professor @ Weizmann Institute Research Scientist @ Google DeepMind

Thank you @ldvcapital for the recognition and for supporting women in Tech!

Since 2012, we have been showcasing brilliant women whose work in #VisualTech and #AI is reshaping business and society. We are thrilled to feature 120+ women driving today's tech innovations 🚀 ldv.co/blog/women-spe…

Thank you @SagivTech for the opportunity to take part in @IMVC2024, and for keeping our Israeli Vision community live and kicking during these challenging times!

Best Paper Award @ SIGGRAPH'25 🥳

So much is already possible in image generation that it's hard to get excited. TokenVerse has been a refreshing exception! Disentangling complex visual concepts (pose, lighting, materials, etc.) from a single image — and mixing them across others with plug-and-play ease!

The rollout continues. Veo3 is now available to Gemini Pro users!

Veo 3 dropped about 100 hours ago, and it's been on 🔥🔥🔥 ever since Now, we’re excited to announce: + 71 new countries have access + Pro subscribers get a trial pack of Veo 3 on the web (mobile soon) + Ultra subscribers get the highest # of Veo 3 gens w/ refreshes How to try…

Insane! They certainly convinced me 🤣

Prompt Theory (Made with Veo 3) What if AI-generated characters refused to believe they were AI-generated?

So much is already possible in image generation that it's hard to get excited. TokenVerse has been a refreshing exception! Disentangling complex visual concepts (pose, lighting, materials, etc.) from a single image — and mixing them across others with plug-and-play ease!

Excited to share that "TokenVerse: Versatile Multi-concept Personalization in Token Modulation Space" got accepted to SIGGRAPH 2025! It tackles disentangling complex visual concepts from as little as a single image and re-composing concepts across multiple images into a coherent…

#CVPR2025 🚀

Understanding the inner workings of foundation models is key for unlocking their full potential. While the research community has explored this for LLMs, CLIP, and text-to-image models, it's time to turn our focus to VLMs. Let's dive in! 🌟 vision-of-vlm.github.io

"Giraffe wearing a neck warmer" 2024: entirely generated video by Veo. 2022: text-driven editing using test time optimization and CLIP by Text2LIVE. quite a stride!

Today we launched our Pika 2.0 model. Superior text alignment. Stunning visuals. And ✨Scene Ingredients✨that allow you to upload images of yourself, people, places, and things—giving you more control and consistency than ever before. It’s almost like twelve days worth of gifts…

Understanding the inner workings of foundation models is key for unlocking their full potential. While the research community has explored this for LLMs, CLIP, and text-to-image models, it's time to turn our focus to VLMs. Let's dive in! 🌟 vision-of-vlm.github.io

🔍 Unveiling new insights into Vision-Language Models (VLMs)! In collaboration with @OneViTaDay & @talidekel, we analyzed LLaVA-1.5-7B & InternVL2-76B to uncover how these models process visual data. 🧵 vision-of-vlm.github.io

Working on layered video decomposition for a few years now, I'm super excited to share these results! Casual videos to *fully visible* RGBA layers, even under significant occlusions! Kudos @YaoChihLee, @erika_lu_, Sarah Rumbley, @GeyerMichal, @jbhuang0604, and @forrestercole

Excited to introduce our new paper, Generative Omnimatte: Learning to Decompose Video into Layers, with the amazing team at Google DeepMind! Our method decomposes a video into complete layers, including objects and their associated effects (e.g., shadows, reflections).

Creating a panoramic video out of a casual panning video requires a strong generative motion prior, which we finally have! Kudos @JingweiMa2 and team!

We are excited to introduce "VidPanos: Generative Panoramic Videos from Casual Panning Videos" VidPanos converts phone-captured panning videos into (fully playing) video panoramas, instead of the usual (static) image panoramas. Website: vidpanos.github.io Paper:…

Very cool stuff @pika_labs !!

I think that's my favorite squish it example so far 📊😆

Unlike images, getting customized video data is challenging. Check out how we can customize a pre-trained text-to-video model *without* any video data!

Introducing✨Still-Moving✨—our work from @GoogleDeepMind that lets you apply *any* image customization method to video models🎥 Personalization (DreamBooth)🐶stylization (StyleDrop) 🎨 ControlNet🖼️—ALL in one method! Plus… you can control the amount of generated motion🏃♀️ 🧵👇

Self-supervised representation learning (DINO) + test time optimization=DINO-tracker! Achieving SOTA tracking results across long range occlusions. Congrats @tnarek99 @assaf_singer and @OneViTaDay on the great work! 🦖🦖

Excited to present DINO-Tracker (accepted to #ECCV2024)! A novel self-supervised method for long-range dense tracking in video, which harnesses the powerful visual prior of DINO. Project page: dino-tracker.github.io. [1/4] @assaf_singer @OneViTaDay @talidekel

Thank you @WeizmannScience for featuring our research!

Weizmann’s Dr. @TaliDekel, among the world’s leading researchers in generative AI, focuses on the hidden capabilities of existing large-scale deep-learning models. Her research with Google led to the development of the recently unveiled Lumiere >> bit.ly/reimaging-imag…

Hi friends — I'm delighted to announce a new summer workshop on the emerging interface between cognitive science 🧠 and computer graphics 🫖! We're calling it: COGGRAPH! coggraph.github.io June – July 2024, free & open to the public (all career stages, all disciplines) 🧶

Space-Time Diffusion Features for Zero-Shot Text-Driven Motion Transfer -- accepted to #CVPR2024! Code is available: diffusion-motion-transfer.github.io w/ @DanahYatim @RafailFridman @omerbartal @yoni_kasten

Text2video models are getting interesting!📽️ Check out how we leverage their space-time features in a zero-shot manner for transferring motion across objects and scenes! diffusion-motion-transfer.github.io Led by @DanahYatim @RafailFridman,@yoni_kasten @talidekel [1/3]

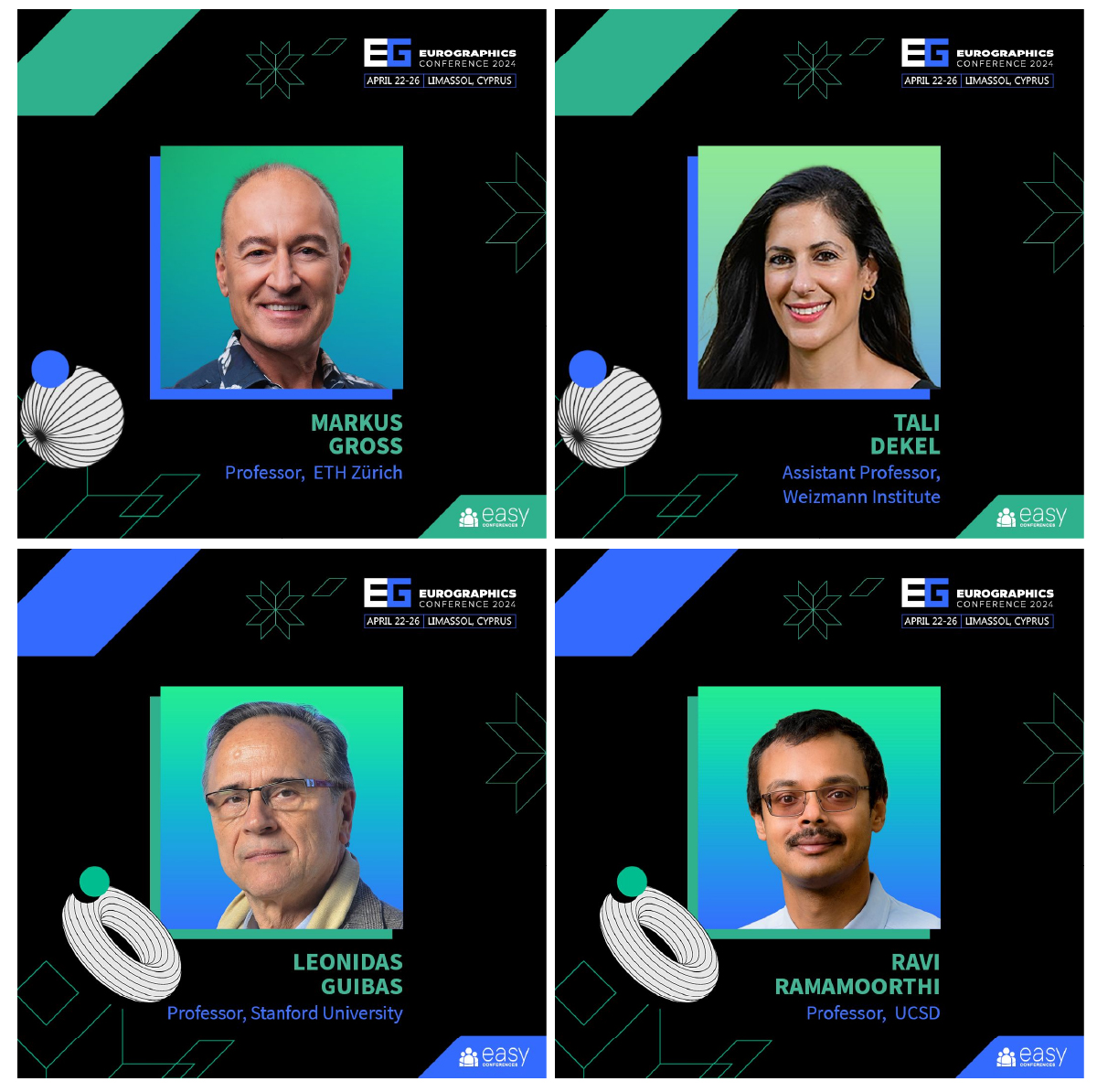

Honored and very excited to speak at EUROGRAPHICS this year next to these legendary researchers! I'll speak about my research journey in the realm of video generation: going from *single-video* models to *all-video* models. eg2024.cyens.org.cy/speakers/

Looking forward to it!

AI for Content Creation workshop is back at #CVPR2024. 21st March paper deadline @ ai4cc.net. Plus stellar speakers: @AaronHertzmann @liuziwei7 @robrombach @talidekel @Jimantha @Diyi_Yang late breaking speakers and special guest panel !!!