Tal Haklay ✈️ACL

@tal_haklay

NLP | Interpretability | PhD student at the @TechnionLive

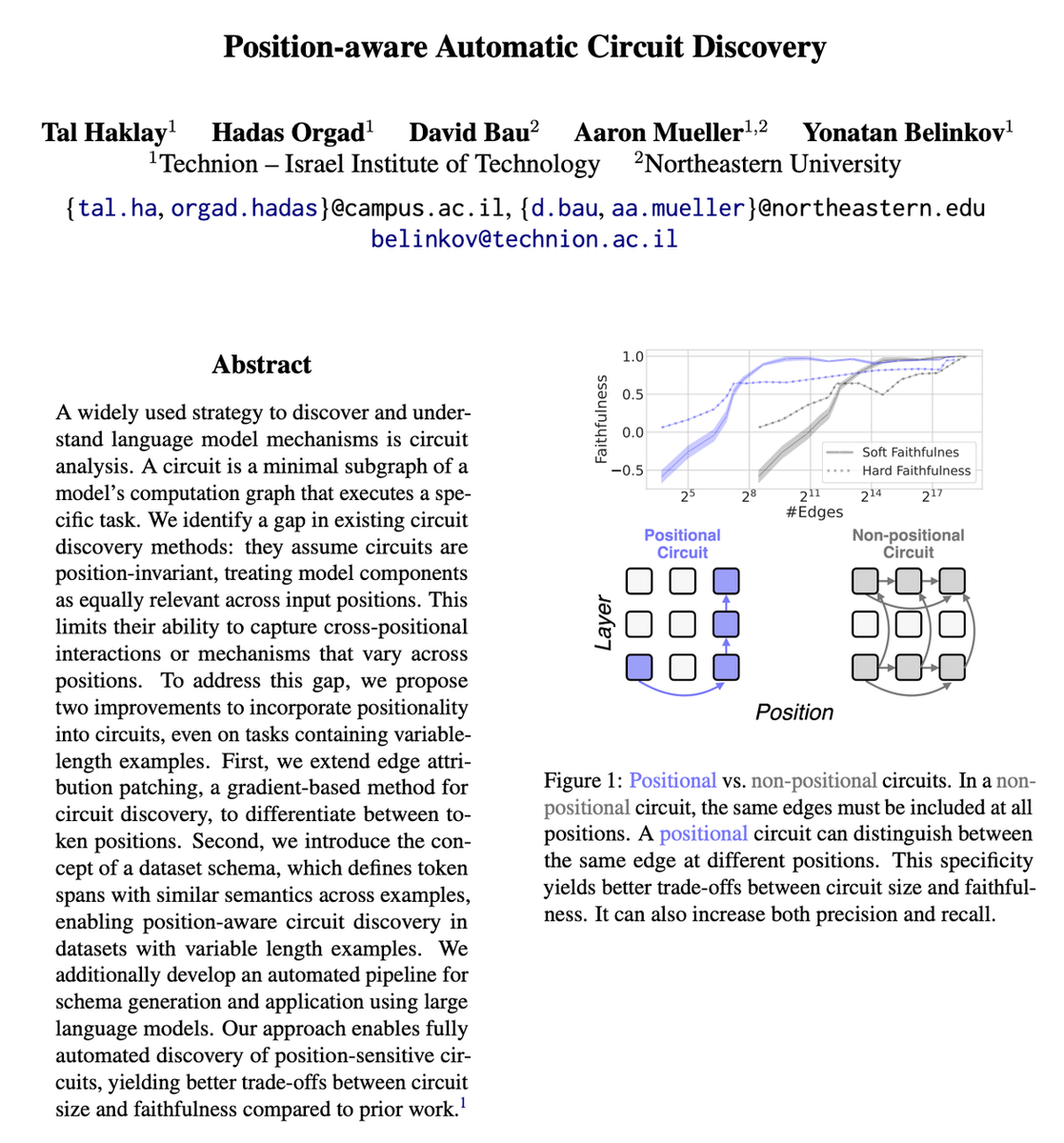

1/13 LLM circuits tell us where the computation happens inside the model—but the computation varies by token position, a key detail often ignored! We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

Many thanks to the @ActInterp organisers for highlighting our work - and congratulations to Pedro, Alex and the other awardees! Sad not to have been there in person, it looked like a fantastic workshop. @AmsterdamNLP @EdinburghNLP

Big congrats to Alex McKenzie, Pedro Ferreira, and their collaborators on receiving Outstanding Paper Awards!👏👏 and thanks for the fantastic oral presentations! Check out the papers here 👇

Big congrats to Alex McKenzie, Pedro Ferreira, and their collaborators on receiving Outstanding Paper Awards!👏👏 and thanks for the fantastic oral presentations! Check out the papers here 👇

ICML🛫🛬ACL Next week I’ll be at @aclmeeting, giving an oral presentation about position-aware automatic circuit discovery. DM me if you’d like to chat about interpretability, mech-interp at scale, or just life :)

The first poster session is happening now!

This is amazing 👏🏻👏🏻

maybe I will live tweet the actionable interp workshop panel

Crazy amount of cool work concentrated in one room

The first poster session is happening now!

🚨The Actionable Interpretability Workshop is happening tomorrow at ICML! Join us for an exciting lineup of speakers, nearly 70 posters, and a great panel discussion 🙌 Don’t miss it! 🔍⚙️ @icmlconf @ActInterp

Hope everyone’s getting the most out of #icml25. We’re excited and ready for the Actionable Interpretability (@ActInterp) workshop this Saturday! Check out the schedule and join us to discuss how we can move interpretability toward more practical impact.

🚨New paper alert🚨 🧠 Instruction-tuned LLMs show amplified cognitive biases — but are these new behaviors, or pretraining ghosts resurfacing? Excited to share our new paper, accepted to CoLM 2025🎉! See thread below 👇 #BiasInAI #LLMs #MachineLearning #NLProc

In a new post, I present: 1. A framework for thinking about which downstream applications interpretability researchers should target 2. Eight concrete problems for practical interpretability work

I'm excited to discuss downstream applications of interpretability at @ActInterp! For a preview of my thoughts on the topic, see my blog post on how I think about picking applications to target x.com/saprmarks/stat…

🚨Meet our panelists at the Actionable Interpretability Workshop @ActInterp at @icmlconf! Join us July 19 at 4pm for a panel on making interpretability research actionable, its challenges, and how the community can drive greater impact. @nsaphra @saprmarks @kylelostat @FazlBarez

Heading to ICML @icmlconf ✈️🇨🇦 Would love to hear your best conference tips

Started packing for #ICML2025? We're already excited for the @ActInterp workshop! Only 8 days away. Confirmed keynotes: @_beenkim, @cogconfluence, @ByronWallace and @RICEric22. Schedule is out. Plan to join us 👉

I’ll be at #ICML2025 – come say hi and talk to me about responsible AI👋 🎤 Speaking (14th): Post-AGI Civilizational Equilibria post-agi.org 💭 Panel @askalphaxiv (14th eve) lu.ma/n0yavto0 📝 Main-Conf Poster (16th): PoisonBench icml.cc/virtual/2025/p… 👀…

🚨Meet our panelists at the Actionable Interpretability Workshop @ActInterp at @icmlconf! Join us July 19 at 4pm for a panel on making interpretability research actionable, its challenges, and how the community can drive greater impact. @nsaphra @saprmarks @kylelostat @FazlBarez

Going to #icml2025? Don't miss the Actionable Interpretability Workshop (@ActInterp)! We've got an amazing lineup of speakers, panelists, and papers, all focused on turning insights from interpretability research into practical, real-world problems ✨

Next week I’ll be at ICML @icmlconf Come check out our poster "MIB: A Mechanistic Interpretability Benchmark"😎 July 17, 11 a.m. And don’t miss the first Actionable Interpretability Workshop on July 19 - focusing on bridging the gap between insights and actions! 🔍⚙️

🚨 Registration is live! 🚨 The New England Mechanistic Interpretability (NEMI) Workshop is happening August 22nd 2025 at Northeastern University! A chance for the mech interp community to nerd out on how models really work 🧠🤖 🌐 Info: nemiconf.github.io/summer25/ 📝 Register:…

Have you heard about our shared task? 📢 Mechanistic Interpretability (MI) is quickly advancing, but comparing methods remains a challenge. This year, as a part of #BlackboxNLP at @emnlpmeeting, we're introducing a shared task to rigorously evaluate MI methods in LMs 🧵