swyx

@swyx

achieve ambition with intentionality, intensity, & integrity - @smol_ai - @dxtipshq - @sveltesociety - @aidotengineer - @coding_career - @latentspacepod

tfw you like Koding

Keynote & MCP Livestream: youtube.com/watch?v=z4zXic…

incredible work on alignment steganography from anthropic fellows i've been looking for a straussian explanation of why china keeps publishing open models out of the goodness of their hearts if you do stuff like use open models to, idk, clean *ahem* synthetically paraphrase…

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

about to go onstage with @james406 to talk about the @posthog success story and am surprised to see a demo of a kanban board for claude code within posthog! everyone’s converging on the same software form factors, i can feel it

watch @LouisKnightWebb explain Vibe Kanban and live code adding Gemini Code and @thdxr's OpenCode to Vibe Kanban - USING vibe kanban! youtu.be/NCksand7Iwo

My talk for @aiDotEngineer on what I think every person working with language models needs to know about GPUs is now available! - Latency lags bandwidth. - GPUs embrace bandwidth. - Don't be scared of N squared. - Use the Tensor Cores, Luke! youtube.com/watch?v=y-UGrY…

If you want a summary of @cmuratori's 2 hour talk on OOP: Draw your object boundaries ✅around the systems (physics, combat, fuel) ❌not the domain model (mech, guard, lantern) Because Ivan Sutherland told you so in 1963

the reason llm analysis (and regulation, and PMing) is hard* is that the relevant DIMENSIONS keep moving with each generation of frontier model; it is not enough to just put your x or y axis in log scale and track scaling laws, you have to actually do the work to think about how…

Is there a public spreadsheet of all the leading LLM models from different companies showing their pricing, benchmark scores, arena elo scores etc?

Talk is live! Will add as reply b/c... u know. Thx @aiDotEngineer + @swyx :)

Not sure when my @aiDotEngineer talk will be posted, so for those who asked here's a link to my slides (couldn't add notes so if you anything needs explanation just reply here & I'll thread the context): figma.com/deck/0NURDonIV…

My Reinforcement Learning (RL) & Agents 3 hour workshop is out! I talk about: 1. RL fundamentals & hacks 2. "Luck is all you need" 3. Building smart agents with RL 4. Closed vs Open-source 5. Dynamic 1bit GGUFs & RL in @UnslothAI 6. The Future of Training youtube.com/watch?v=OkEGJ5…

if, as @sgrove proposes, specs are the code of the future, then what is debugging? 1) spec compilation is the process of a coding agent turning specs into code 2) more and more “compilation” will be unattended, less watching the agent work diff by diff, more spec in, code out…

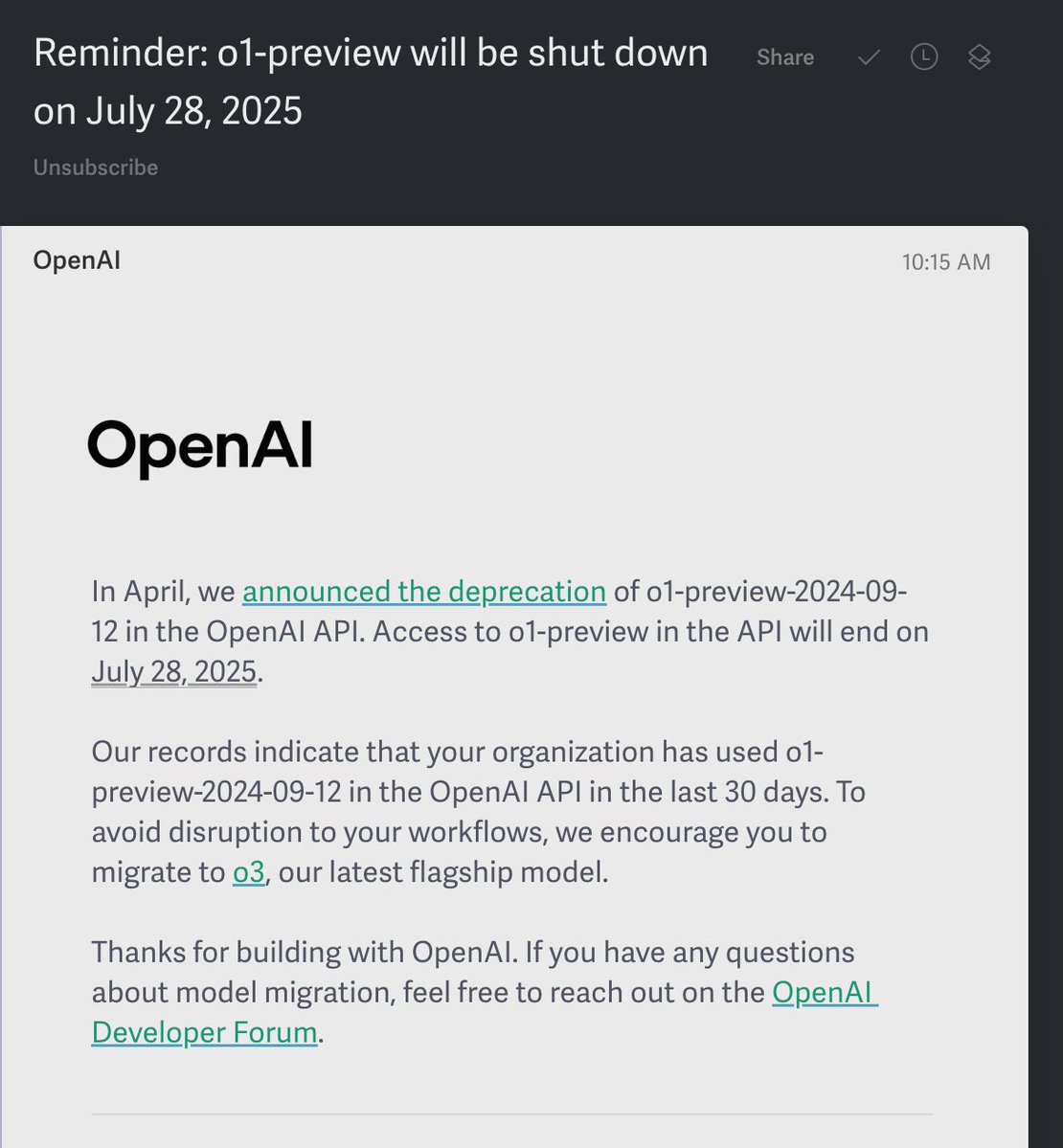

I will die on this hill that o1-preview is a far better summarizer than o1 or o3 and the world is about to lose access to a great model that was never properly pulled into production