Sonia

@soniajoseph_

mother of agi 👽🎀. ai researcher @AIatMeta. getting phd @Mila_Quebec. prev @Princeton.

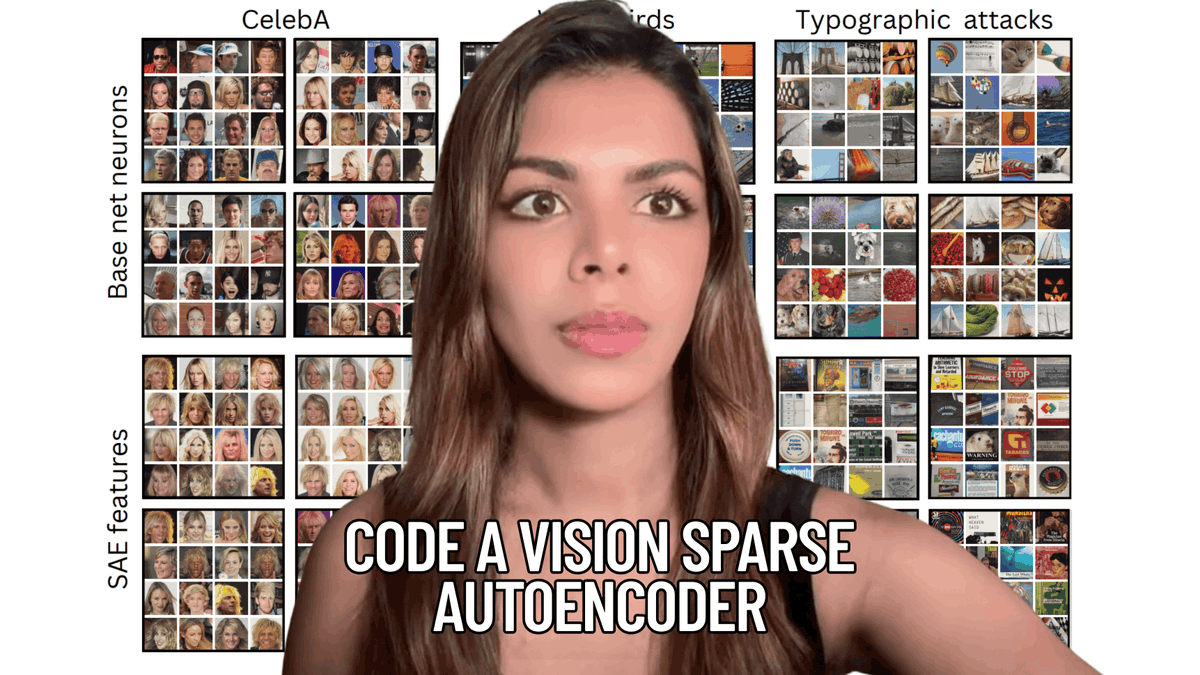

as a weekend project, I made a video overview of vision sparse autoencoders, covering their history, recent negative results, future directions, and a demo of running an image of a parrot through an SAE to explore its features. (link below)

A friend told me that the typing sounds on this video are great ASMR music, so maybe watch it when you want to fall asleep

as a weekend project, I made a video overview of vision sparse autoencoders, covering their history, recent negative results, future directions, and a demo of running an image of a parrot through an SAE to explore its features. (link below)

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

i want to see more of: earnest not edgy whole not cracked infinite games "making the world a better place" - seriously though, i miss that energy

The vision mechanistic interpretability workshop @miv_cvpr2025 earlier this month at CVPR was very informative and fun! Looking forward to seeing this community grow. Thank you to the speakers and organizers @trevordarrell @davidbau @TamarRottShaham @YGandelsman @materzynska…

You don’t need a PhD to be a great AI researcher, as long as you’re standing on the shoulders of 100 who have one.

You don’t need a PhD to be a great AI researcher. Even @OpenAI’s Chief Research Officer doesn’t have a PhD.

It was fun collaborating on this short paper by @cvenhoff00, in collaboration with @ashk__on @philiptorr and @NeelNanda5, on modality alignment in VLMs. I especially liked using frozen SAEs as an analytic probe to measure cross-modal alignment.

🔍 New paper: How do vision-language models actually align visual- and language representations? We used sparse autoencoders to peek inside VLMs and found something surprising about when and where cross-modal alignment happens! Presented at XAI4CV Workshop @ CVPR 🧵 (1/6)

IMPORTANT: I have been a jailbreaker for OpenAI but this is gig-based work. I believe the best thing for me right now would be acquiring a full-time job to gain financial stability. I would be appreciative of many types of roles. Please reach out over DMs!

Excited to share the results of my internship research with @AIatMeta, as part of a larger world modeling release! What subtle shortcuts are VideoLLMs taking on spatio-temporal questions? And how can we instead curate shortcut-robust examples at a large-scale? Details 👇🔬

Our vision is for AI that uses world models to adapt in new and dynamic environments and efficiently learn new skills. We’re sharing V-JEPA 2, a new world model with state-of-the-art performance in visual understanding and prediction. V-JEPA 2 is a 1.2 billion-parameter model,…

I'm sharing my experience of working with Pliny the Prompter, because he recently contacted me months after I asked him not to. He implied retaliation if I ever spoke about our interactions. This threat is why I'm speaking publicly. I've written a full statement (linked below).

Don't miss out on @soniajoseph_'s perspective (and live coding demo) about Prisma: an amazing open-source toolkit for vision and video interpretability Happening right now: Grand C1 Hall (on level 4) @CVPR

Diffusion Steering Lens is a much semantically richer logit lens for vision models (but I'm curious to see it applied to any type of model). You can decode transformer submodules with rich visuals. Looking forward to seeing Ryota's poster today at @miv_cvpr2025 at CVPR!

🔍Logit Lens tracks what transformer LMs “believe” at each layer. How can we effectively adapt this approach to interpret Vision Transformers? Happy to share that our “Decoding Vision Transformers: the Diffusion Steering Lens” was accepted @miv_cvpr2025! (1/7)

Our vision is for AI that uses world models to adapt in new and dynamic environments and efficiently learn new skills. We’re sharing V-JEPA 2, a new world model with state-of-the-art performance in visual understanding and prediction. V-JEPA 2 is a 1.2 billion-parameter model,…