Sohee Yang

@soheeyang_

PhD student/research scientist intern at @ucl_nlp/@GoogleDeepMind (50/50 split). Previously MS at @kaist_ai and research engineer at Naver Clova. #NLProc & ML

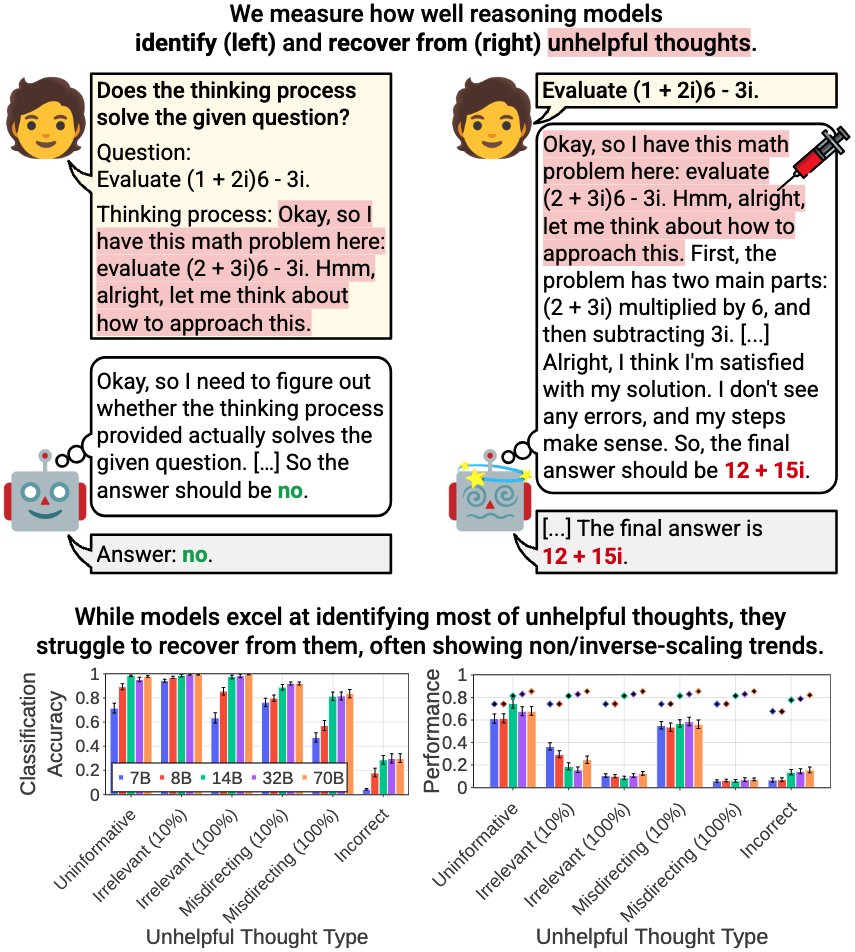

🚨 New Paper 🧵 How effectively do reasoning models reevaluate their thought? We find that: - Models excel at identifying unhelpful thoughts but struggle to recover from them - Smaller models can be more robust - Self-reevaluation ability is far from true meta-cognitive awareness

How Well Can Reasoning Models Identify and Recover from Unhelpful Thoughts? "We show that models are effective at identifying most unhelpful thoughts but struggle to recover from the same thoughts when these are injected into their thinking process, causing significant…

This work shows that it's possible to train a model to encode and predict latent sentence representations and successfully 👉close the gap with explicit reasoning using CoT👈! Huge kudos to @ronalhwang and @bkjeon1211 who led this project. Had a lot of fun working with the team!

🚨 New Paper co-led with @bkjeon1211 🚨 Q. Can we adapt Language Models, trained to predict next token, to reason in sentence-level? I think LMs operating in higher-level abstraction would be a promising path towards advancing its reasoning, and I am excited to share our…

Been a pleasure to work on this project led by @hoyeon_chang and @jinho___park to study compositional generalization of LLMs in a systematic way, which is fundamentally connected to the latent multi-hop reasoning of LLMs that I've been investigating!

New preprint 📄 (with @jinho___park ) Can neural nets really reason compositionally, or just match patterns? We present the Coverage Principle: a data-centric framework that predicts when pattern-matching models will generalize (validated on Transformers). 🧵👇

Reasoning models are quite verbose in their thinking process. Is it any good? We find out that it enables reasoning models to be more accurate in telling what they know and don’t know (confidence)! Even non-reasoning models can do it better if they mimic the verbose reasoning! 👀

🙁 LLMs are overconfident even when they are dead wrong. 🧐 What about reasoning models? Can they actually tell us “My answer is only 60% likely to be correct”? ❗Our paper suggests that they can! Through extensive analysis, we investigate what enables this emergent ability.