Sitan Chen

@sitanch

assistant professor of computer science @hseas, learning theorist, 🎹

Excited about this new work where we dig into the role of token order in masked diffusions! MDMs train on some horribly hard tasks, but careful planning at inference can sidestep the hardest ones, dramatically improving over vanilla MDM sampling (e.g. 7%->90% acc on Sudoku) 1/

Our sixth short plenary is a merged talk about stabilizer bootstrapping, and learning the closest product state! (papers: arxiv.org/abs/2408.06967, arxiv.org/pdf/2411.04283). Overview below. @sitanch @gong_weiyuan @AineshBakshi @JohnBostanci @ewintang @jerryzli @BooleanAnalysis

Intro blog post for CS 2881: AI Safety. windowsontheory.org/2025/07/20/ai-…

A team from #KempnerInstitute, @hseas & @UTCompSci has won a best paper award at #ICML2025 for work unlocking the potential of masked diffusion models. Congrats to @Jaeyeon_Kim_0, @shahkulin98, Vasilis Kontonis, @ShamKakade6 and @sitanch. kempnerinstitute.harvard.edu/news/kempner-i… #AI

A bit of a belated announcement 😅 but I’ll be at ICML today presenting S4S, which enables few-NFE diffusion model sampling in <1 hour on 1 A100! 📍East Exhibition Hall, E-3210, 11:00 - 1:30. Looking forward to chatting more about all things diffusion! #ICML2025

Want to quickly sample high-quality images from diffusion models, but can’t afford the time or compute to distill them? Introducing S4S, or Solving for the Solver, which learns the coefficients and discretization steps for a DM solver to improve few-NFE generation. Thread 👇 1/

Thrilled to share that our work received the Outstanding Paper Award at ICML! I will be giving the oral presentation on Tuesday at 4:15 PM. @Jaeyeon_Kim_0 and I both will be at the poster session shortly after the oral presentation. Please attend if possible!

Excited about this new work where we dig into the role of token order in masked diffusions! MDMs train on some horribly hard tasks, but careful planning at inference can sidestep the hardest ones, dramatically improving over vanilla MDM sampling (e.g. 7%->90% acc on Sudoku) 1/

Ecstatic to present an oral paper at ICML this year!!🎉 📚 “Blink of an Eye: a simple theory for feature localization in generative models” 🔗 arxiv.org/abs/2502.00921 Catch me at the poster session right after! See you there! 🚀

Excited to share that I’ll be presenting two oral papers in this ICML—see u guys in Vancouver!!🇨🇦 1️⃣ arxiv.org/abs/2502.06768 Understanding Masked Diffusion Models theoretically/scientifically 2️⃣ arxiv.org/abs/2502.09376 Theoretical analysis on LoRA training

Nice thread by Aayush on our new work on diffusion reward guidance! Was quite surprised how well this worked and how simple the algorithm is. Also happy that we finally managed to prove some rigorous guarantees for DPS (diffusion posterior sampling)

Steering diffusion models with external rewards has recently led to exciting results, but what happens when the reward is inherently difficult? Introducing ReGuidance: a simple algorithm to (provably!) boost your favorite guidance method on hard problems! 🚀🚀🚀 A thread: (1/n)

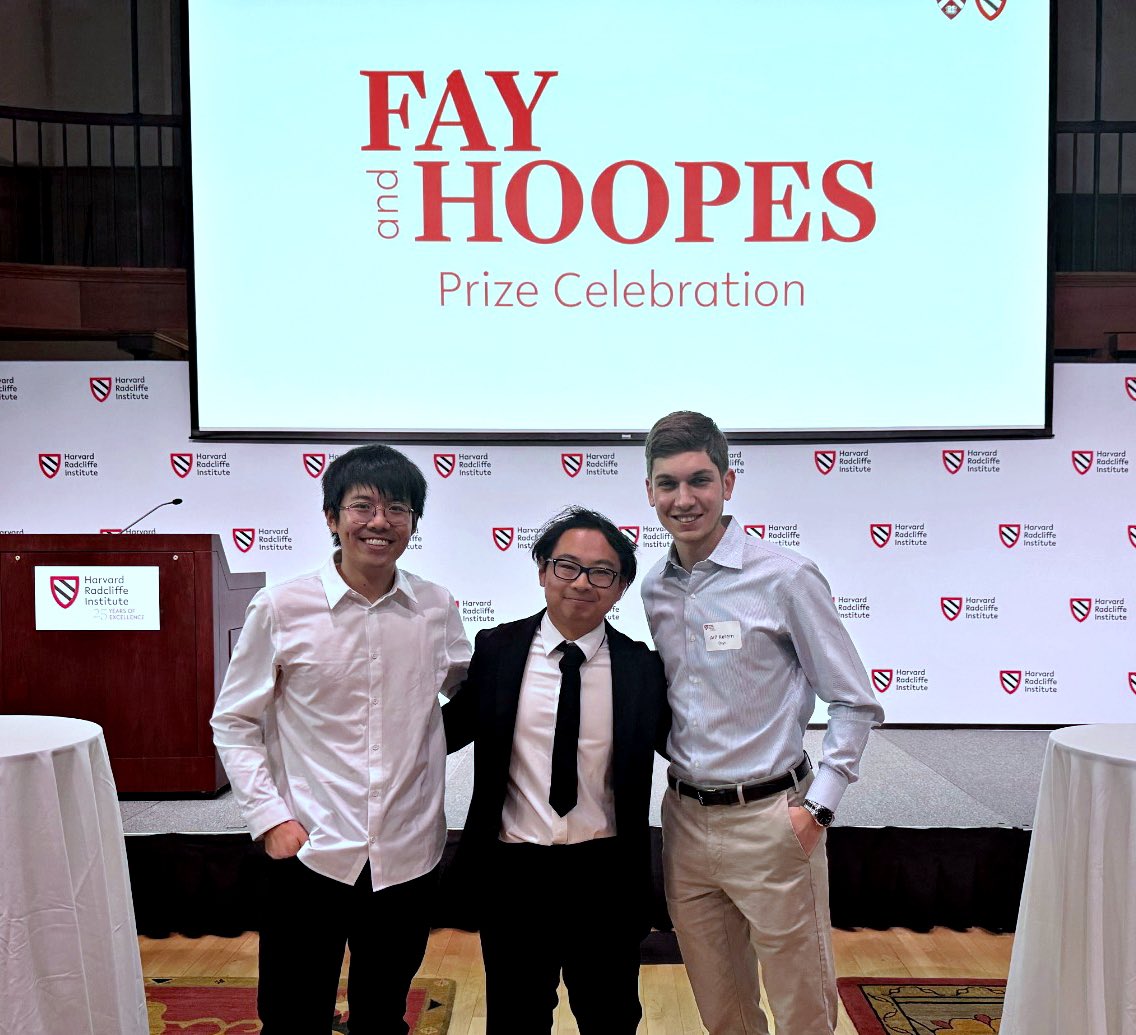

Bright spot amidst the chaos: my undergrad advisees @marvin_li03 and Kerem Dayi won the Hoopes Prize! Marvin also got a Fay Prize for top 3 Harvard theses + ICML spotlight. Kerem, a CRA finalist, will present his work at COLT and start a PhD at MIT this fall. Very proud of them🥲

Circumventing recent no-go theorems, this work shows that a logarithmic amount of #quantum memory is sufficient to give exponential advantages in the task of learning the structure of Pauli noise channels. @sitanch @gong_weiyuan Check it out: go.aps.org/3ROvLfT

Check out Eric's thread on our new lightweight method to learn an optimal solver for any diffusion model! High-quality generation in 5 NFEs, universal gains over previous training-free methods essentially "for free" (<1 hr on 1 A100). Kudos to @esfrankel for the amazing work!

Want to quickly sample high-quality images from diffusion models, but can’t afford the time or compute to distill them? Introducing S4S, or Solving for the Solver, which learns the coefficients and discretization steps for a DM solver to improve few-NFE generation. Thread 👇 1/

🚀 Very excited to share our new work on understanding the benefits/drawbacks of training/inference in Masked Diffusion Models (MDMs) with amazing collaborators! 📜 Paper: arxiv.org/pdf/2502.06768 1/

Excited about this new work where we dig into the role of token order in masked diffusions! MDMs train on some horribly hard tasks, but careful planning at inference can sidestep the hardest ones, dramatically improving over vanilla MDM sampling (e.g. 7%->90% acc on Sudoku) 1/

check my first work at Harvard! We deepen our understand on Masked Diffusion Model, decomposing it into ‘training (Section 3) and ‘inference (Section 4)’. Special thanks for amazing collaborators @shahkulin98, Vasilis, @ShamKakade6, and @sitanch !!! arxiv.org/pdf/2502.06768

Excited about this new work where we dig into the role of token order in masked diffusions! MDMs train on some horribly hard tasks, but careful planning at inference can sidestep the hardest ones, dramatically improving over vanilla MDM sampling (e.g. 7%->90% acc on Sudoku) 1/