Sebastian Gehrmann (⛰️ACL)

@sebgehr

Head of Responsible AI, CTO office, @Bloomberg. (he/him) Generating useful natural language, one word at a time. views my own

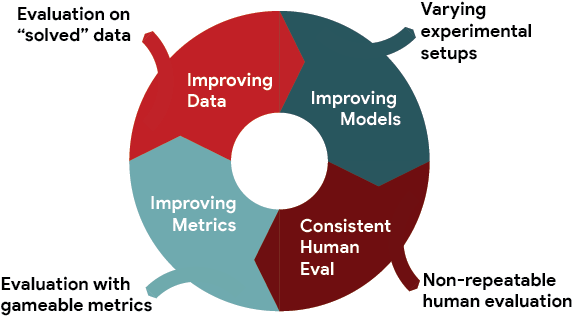

Introducing 💎GEM, a living benchmark for natural language Generation (NLG), its Evaluation, and Metrics. We are organizing shared tasks for our ACL 2021 workshop - Please consider participating! Website: gem-benchmark.com Paper: arxiv.org/abs/2102.01672 #NLProc 🧵1/X

⭐️Looking for a PhD Intern⭐️ Join me this summer at MSR to work on personal AI agents! We're developing innovative models to enhance personalized MS Copilot experiences. I'm seeking candidates with strong modeling skills and experience with LLM (multi-)agents/preference learning

I'll be in Vienna for ACL starting Sunday, looking forward to our GEM workshop and the rest of the conference. As always, feel free to come up and say hi and my DMs and email are open. #ACL2025

Thank you so much for having me! We had a great chat about how to connect lofty goals of trustworthiness to hard engineering decisions, and about how responsible AI fits into this world.

Sebastian Gehrmann speaks to @JonKrohnLearns about his latest research into how retrieval-augmented generation (RAG) makes #LLMs less safe, the three ‘H’s for gauging the effectivity and value of a #RAG, and the custom guardrails and procedures we need to use to ensure our RAG is…

Check out our talk in just over two hours. We have crime-fighting llamas! #Facct2025

At #FAccT2025 today (11:21 EEST), Sebastian Gehrmann, @Bloomberg’s Head of #ResponsibleAI, presents "Understanding & Mitigating Risks of #GenAI in Financial Services," which details why existing guardrails fail to detect domain-specific content risks bloom.bg/44qNMb4 (1/5)

At #FAccT2025 in Athens until Friday to present our work on AI content safety for financial services. Please DM if you want to chat and haven't found me yet.

Thanks for having me @samcharrington. Had a great time talking about responsible AI for knowledge intensive domains like Finance.

Today, we're joined by @sebgehr, head of responsible AI in the Office of the CTO at @Bloomberg, to discuss AI safety in retrieval-augmented generation (RAG) systems and generative AI in high-stakes domains like financial services. We explore how RAG, contrary to some…

Today, we're joined by @sebgehr, head of responsible AI in the Office of the CTO at @Bloomberg, to discuss AI safety in retrieval-augmented generation (RAG) systems and generative AI in high-stakes domains like financial services. We explore how RAG, contrary to some…

GEM 2025 ARR-reviewed deadline extended to May 17 - Pre-reviewed ARR papers should be submitted by filling this short form: lnkd.in/eV8FW_Cm 📅 May 17: Pre-reviewed (ARR) commitment deadline. 📅 May 25: Notification of acceptance. 📅 June 12: Camera-ready deadline.

Sara is an amazing researcher and mentor to so many in the field. Please treat her like the role model she is :/

We recently released a paper where I took on a more visible role than typical. This was a deliberate choice to protect more junior leads given we anticipated more scrutiny than typical. However, this isn’t an invitation to channel frustrations towards me as a person.

We built on a lot of the great existing general purpose work in AI Governance and Safety and made it applicable to Financial Services. We find that existing guardrails do not cover it well, and outline ways in which others can replicate our work for other specific domains.

In "Understanding & Mitigating Risks of Generative AI in Financial Services," authors studied existing guardrail solutions, found them insufficient in detecting domain-specific content risks & proposed the 1st finance-specific #AI content risk taxonomy #ResponsibleAI #GenAI (1/7)

This is such an interesting paper, find @byryuer at NAACL to talk about it!

Let’s examine the findings in @Bloomberg’s “RAG LLMs Are NOT Safer” paper: It reveals that #RetrievalAugmentedGeneration (RAG) frameworks can *actually* make #LLMs less safe! Even “safe” #AI models combined with “safe” documents can produce “unsafe” outputs #ResponsibleAI (1/7)

#AI researchers at @Bloomberg released two papers that have significant implications for how organizations deploy #GenAI systems safely & responsibly, particularly in high-stakes domains like capital markets financial services bloom.bg/4lPA2gF #ResponsibleAI #AIinFinance

Hey this is me! Super excited we get to share some of our Responsible AI work today, including some surprising findings about RAG and a deep dive into AI Safety for Financial Services.

Researchers in @Bloomberg’s #AI Engineering group, Data AI group & CTO Office published two papers which expose significant risks in the use of #GenAI systems. In this video, @sebgehr, Head of #ResponsibleAI, explains their findings: Read more: bloom.bg/4lP6b8j

Only three more days to submit your evaluation papers to our ACL workshop!

Are you recovering from your @COLM_conf abstract submission? Did you know that GEM has a non-archival track that allows you to submit a two-page abstract in parallel? Our workshop deadline is coming up, please consider submitting your evaluation paper!

We’re excited to announce @Bloomberg's Visiting Faculty Program, a unique chance to collaborate with our researchers on #AI for #finance & tech. Gain access to world-class data & have real-world impact. Apply for sabbaticals & part-time roles: bloom.bg/42yDcOh #research

GEM is so back! Our workshop for Generation, Evaluation, and Metrics is coming to an ACL near you. Evaluation in the world of GenAI is more important than ever, so please consider submitting your amazing work. CfP can be found at gem-benchmark.com/workshop

GEM is back!

(22) The Third Workshop on Social Influence in Conversations (SICon 2025) (23)SDP 2025: The 5th Workshop on Scholarly Document Processing (24) Meaningful, Efficient, and Robust Evaluation of LLMs (25) GEM: Natural Language Generation, Evaluation, and Metrics #NLProc #ACL2025NLP

In today's 7th BlackboxNLP Workshop at #EMNLP2024, research by @icsaAtEd's Jordi Armengol-Estapé, Lingyu Li, @sebgehr, Achintya Gopal, @drosen, @gideonmann & @mdredze will highlight a method to statically locate topical knowledge in the weight space of #LLMs #NLProc #AI