Reza Bayat

@reza_byt

Student at @Mila_Quebec with @AaronCourville and Pascal Vincent

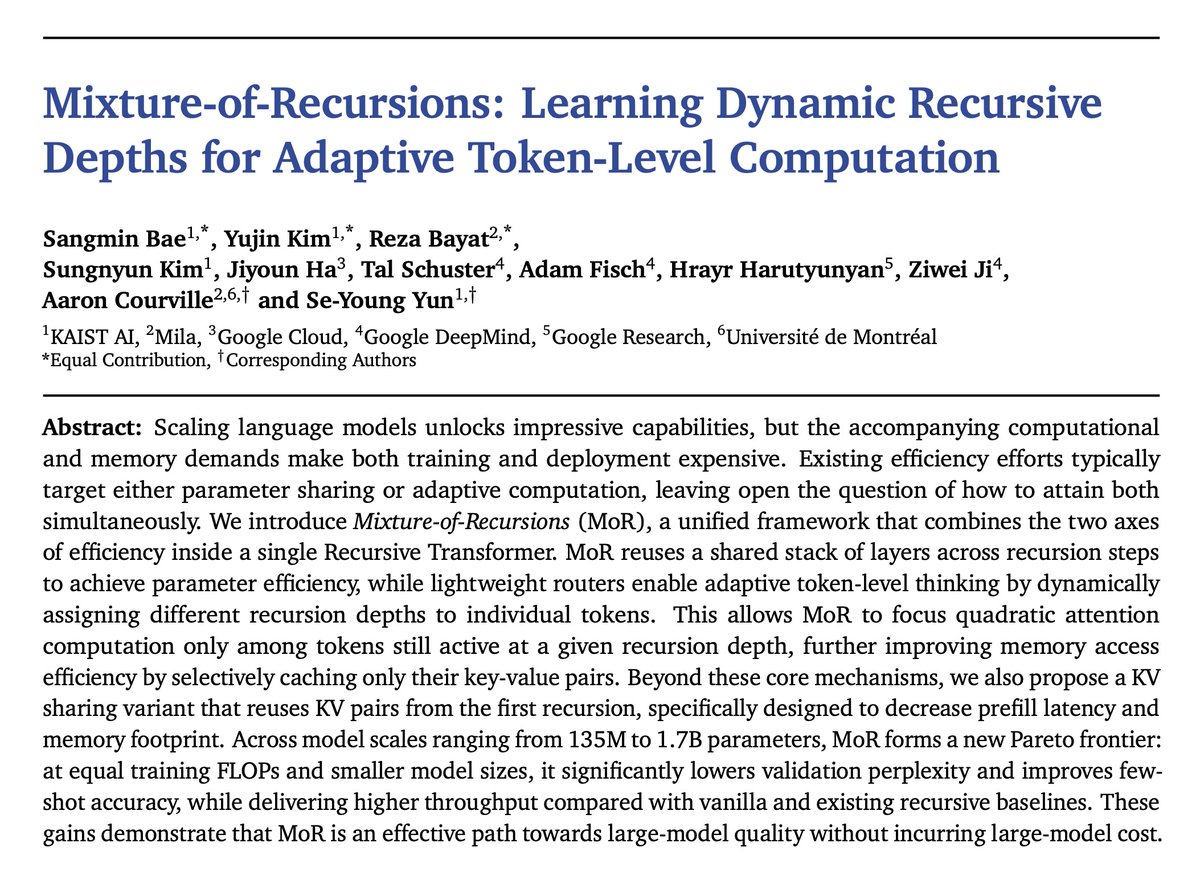

📄 New Paper Alert! ✨ 🚀Mixture of Recursions (MoR): Smaller models • Higher accuracy • Greater throughput Across 135 M–1.7 B params, MoR carves a new Pareto frontier: equal training FLOPs yet lower perplexity, higher few‑shot accuracy, and more than 2x throughput.…

Mixture-of-recursions delivers 2x faster inference—Here's how to implement it venturebeat.com/ai/mixture-of-…

We achieved gold medal-level IMO score with an advanced Gemini model that is fast enough to solve hard reasoning problems within the competition time limit. Honored to have had the chance to collaborate with the GDM DeepThink Math team and contribute to this huge milestone!

An advanced version of Gemini with Deep Think has officially achieved gold medal-level performance at the International Mathematical Olympiad. 🥇 It solved 5️⃣ out of 6️⃣ exceptionally difficult problems, involving algebra, combinatorics, geometry and number theory. Here’s how 🧵

I chatted with the MoR paper on alphaXiv, and it genuinely offers novel ideas on this, here’s one example. I probably shouldn’t say this😅, but the token‑level thinking steps of MoR with RL training would be fundamental to explore, maybe credit assignment at the token‑level of…

I am not sure what that means; I think OpenAI tackled this with a multi-agent RL setup, which seems to be the real deal, but a single-agent setup seemed to be enough 🤷♂️

🚨 Olympiad math + AI: We ran Google’s Gemini 2.5 Pro on the fresh IMO 2025 problems. With careful prompting and pipeline design, it solved 5 out of 6 — remarkable for tasks demanding deep insight and creativity. The model could win gold! 🥇 #AI #Math #LLMs #IMO2025

Proud to announce an official Gold Medal at #IMO2025🥇 The IMO committee has certified the result from our general-purpose Gemini system—a landmark moment for our team and for the future of AI reasoning. deepmind.google/discover/blog/… (1/n) Highlights in thread:

Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Computation Author's Explanation: x.com/reza_byt/statu… Overview: Mixture-of-Recursions (MoR) introduces a unified framework for LLMs that combines parameter sharing with adaptive computation…

📄 New Paper Alert! ✨ 🚀Mixture of Recursions (MoR): Smaller models • Higher accuracy • Greater throughput Across 135 M–1.7 B params, MoR carves a new Pareto frontier: equal training FLOPs yet lower perplexity, higher few‑shot accuracy, and more than 2x throughput.…

We have achieved gold medal performance at the International Mathematical Olympiad 🥇 🥳 This is the first general-purpose system to do so through official participation and grading, and I'm thrilled to have contributed a little to this milestone in mathematical reasoning 🌈🫶

An advanced version of Gemini with Deep Think has officially achieved gold medal-level performance at the International Mathematical Olympiad. 🥇 It solved 5️⃣ out of 6️⃣ exceptionally difficult problems, involving algebra, combinatorics, geometry and number theory. Here’s how 🧵

New architectures leggooooo

📄 New Paper Alert! ✨ 🚀Mixture of Recursions (MoR): Smaller models • Higher accuracy • Greater throughput Across 135 M–1.7 B params, MoR carves a new Pareto frontier: equal training FLOPs yet lower perplexity, higher few‑shot accuracy, and more than 2x throughput.…

Introducing our new work: 🚀Mixture-of-Recursions! 🪄We propose a novel framework that dynamically allocates recursion depth per token. 🪄MoR is an efficient architecture with fewer params, reduced KV cache memory, and 2× greater throughput— maintaining comparable performance!

🚨This week's top AI/ML research papers: - Mixture-of-Recursions - Scaling Laws for Optimal Data Mixtures - Training Transformers with Enforced Lipschitz Constants - Reasoning or Memorization? - How Many Instructions Can LLMs Follow at Once? - Chain of Thought Monitorability -…

Looking forward to seeing everyone for ES-FoMo part three tomorrow! We'll be in East Exhibition Hall A (the big one), and we've got an exciting schedule of invited talks, orals, and posters planned for you tomorrow. Let's meet some of our great speakers! 1/

Thanks for sharing our work, @deedydas MoR is a new arch that upgrades Recursive Transformers and Early-Exiting algorithms. Simple pretraining with router, and faster inference speed and lower KV caches! Post for details and codes will be released very soon. Stay tuned! ☺️

Google DeepMind just dropped this new LLM model architecture called Mixture-of-Recursions. It gets 2x inference speed, reduced training FLOPs and ~50% reduced KV cache memory. Really interesting read. Has potential to be a Transformers killer.