rdyro

@rdyro128523

JAX @ Google http://robertdyro.com

We now have a guide to writing distributed communication on TPU using Pallas, written by @JustinFu769512! jax.readthedocs.io/en/latest/pall… Overlapping comms + compute is a crucial performance optimization for large scale ML. Write your own custom overlapped kernels in Python!

A nice and concise R1 inference jax:tpu port by @rdyro128523. Good for both reading and running. Watch the repo for more.

Deepseek R1 inference in pure JAX! Currently on TPU, with GPU and distilled models in-progress. Features MLA-style attention, expert/tensor parallelism & int8 quantization. Contributions welcome!

The JAX team is hosting a dinner / networking event during NVIDIA's GTC in March. Join us for an evening of food, drinks, and discussion of all things JAX. @SingularMattrix and other JAX team member will be attending. Please register early as capacity is limited.

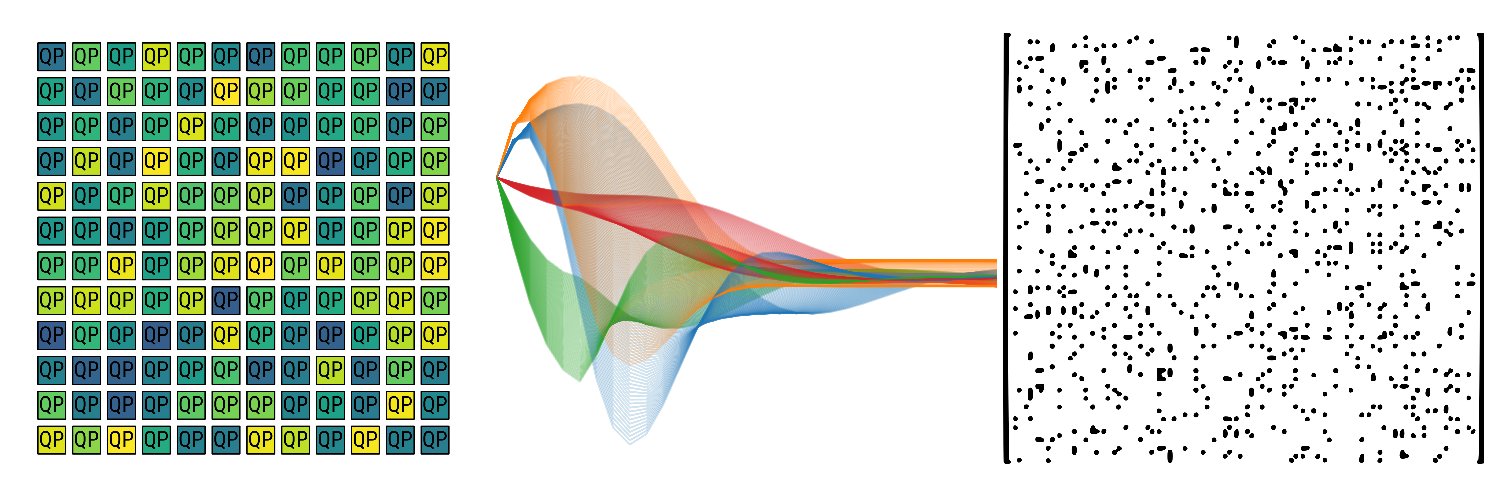

Making LLMs run efficiently can feel scary, but scaling isn’t magic, it’s math! We wanted to demystify the “systems view” of LLMs and wrote a little textbook called “How To Scale Your Model” which we’re releasing today. 1/n