Randall Balestriero

@randall_balestr

AI Researcher: From theory to practice (and back) Postdoc @MetaAI with @ylecun PhD @RiceUniversity with @rbaraniuk Masters @ENS_Ulm @Paris_Sorbonne

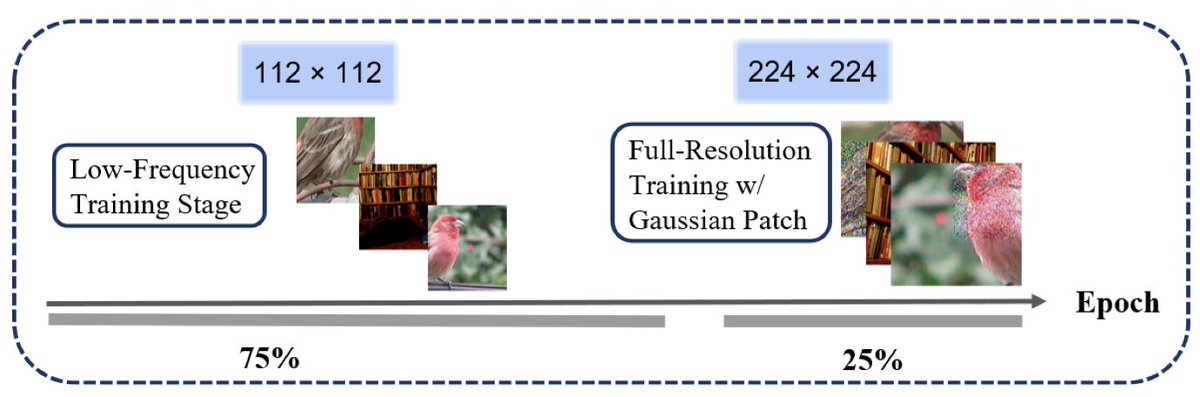

Impressed by DINOv2 perf. but don't want to spend too much $$$ on compute and wait for days to pretrain on your own data? Say no more! Data augmentation curriculum speeds up SSL pretraining (as it did for generative and supervised learning) -> FastDINOv2! arxiv.org/abs/2507.03779

I am heading to @icmlconf to present our position paper with @randall_balestr @klindt_david @wielandbr on what we believe are the important next steps to advance SSL. It's not either theory or practice, it's both. We as a community need a better discussion.

Our paper "Beyond [cls]: Exploring the True Potential of Masked Image Modeling Representations" has been accepted to @ICCVConference! 🧵 TL;DR: Masked image models (like MAE) underperform not just because of weak features, but because they aggregate them poorly. [1/7]

Language/tokens provide a compressed space that is aligned with current LLM evaluation tasks (see our Next Token Perception Score arxiv.org/abs/2505.17169) while pixels are raw unfiltered sensing of the world known to be misaligned with perception tasks (see our paper with…

I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little from next frame prediction. Maybe it's because LLMs are actually brain scanners in disguise. Idle musings in my new blog post: sergeylevine.substack.com/p/language-mod…