radAI

@radAILabs

Unleashing the Power of AI 🚀 | Research & Experimentation of new AI frontiers. 🧠✨ http://talkdr.life

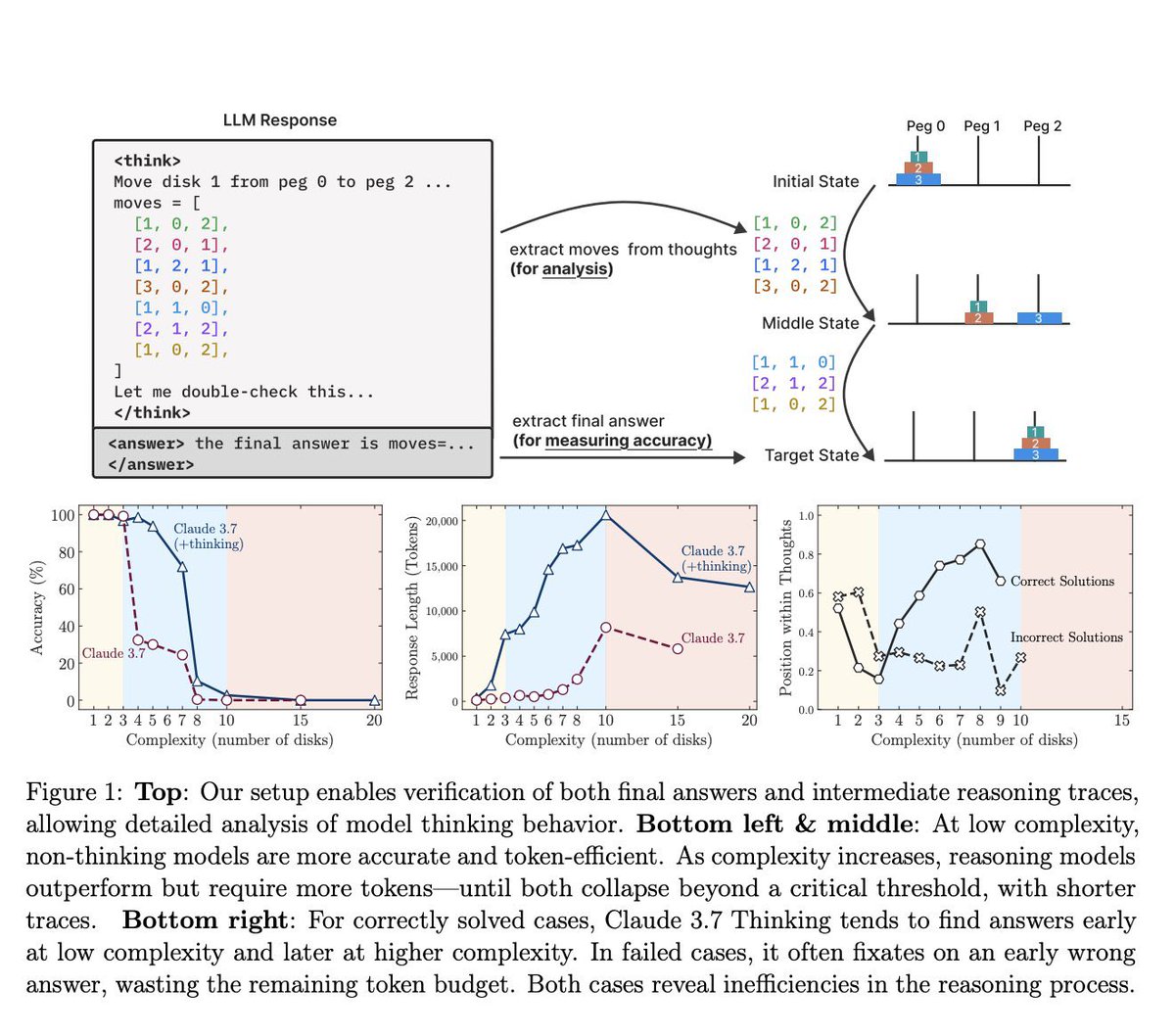

According to @Apple’s new study, “reasoning” has proven to be a far more challenging problem than previously thought. All state-of-the-art models encounter a complexity wall, at which point their performance completely collapses, dropping to 0% accuracy (see study for concrete…

OpenAI rolls out GPT-5 in August. Soon after, Google should unveils Gemini 3, potentially surpassing it. And a response from xAI is expected, with Grok 4.x. Still this year, a new China open-source model hits the scene, at a fraction of the cost. And so, the cycle repeats... 😀

8 Greatest Laws I know at 35, I wish I knew at 20: – Thread – 1.

Google's CEO just had the most important AI interview of 2025. He revealed mind-blowing facts about artificial general intelligence that 99% of people wouldn't know... Including when the singularity actually happens. Here are my top 8 takeaways: (No. 6 will terrify you)

Great video youtu.be/9vM4p9NN0Ts

Stanford literally packed 1.5 hours with everything you need to know about LLMs

Context window of 10M tokens ~500k-1mil line codebases in 1 prompt 🤯 This is game changing for coding!!!

Today is the start of a new era of natively multimodal AI innovation. Today, we’re introducing the first Llama 4 models: Llama 4 Scout and Llama 4 Maverick — our most advanced models yet and the best in their class for multimodality. Llama 4 Scout • 17B-active-parameter model…

SpatialLM just dropped on Hugging Face Large Language Model for Spatial Understanding

🚀We just dropped SmolDocling: a 256M open-source vision LM for complete document OCR!📄✨ It's lightning fast, process a page in 0.35 sec on consumer GPU using < 500MB VRAM⚡ SOTA in document conversion, beating every competing model we tested up to 27x larger🤯 But how? 🧶⬇️

Wow. Recreating the Shawshank Redemption prison in 3D from a single video, in real time (!) Just read the MASt3R-SLAM paper and it's pretty neat. These folks basically built a real-time dense SLAM system on top of MASt3R, which is a transformer-based neural network that can do…

New research paper shows how LLMs can "think" internally before outputting a single token! Unlike Chain of Thought, this "latent reasoning" happens in the model's hidden space. TONS of benefits from this approach. Let me break down this fascinating paper...

RT-DETRv2 takes real-time object detection to the next level! 🚀 ✅ Selective multi-scale feature extraction ✅ Discrete sampling for wider deployment ✅ Smarter training: dynamic augmentation + scale-adaptive hyperparameters

Rad!! o3-mini is a model that delivers

OpenAI o3-mini just one shotted this prompt: write a script for 100 bouncing yellow balls within a sphere, make sure to handle collision detection properly. make the sphere slowly rotate. make sure balls stays within the sphere. implement it in p5.js

🚨🇺🇸BREAKING: TESLA REVEALS CORTEX—50,000 GPU TRAINING CLUSTER AT GIGA TEXAS Tesla shared an image of Cortex, its massive 50,000 GPU training cluster at Gigafactory Texas. The system is expected to power AI training for FSD, Optimus, and next-gen Tesla projects. Source: Tesla

The head of R&D at OpenAI doesn’t seem to concerned… regardless, DeepSeek is catching up fast!!

Congrats to DeepSeek on producing an o1-level reasoning model! Their research paper demonstrates that they’ve independently found some of the core ideas that we did on our way to o1.

My ultimate test for AGI continues to be a very simple prompt. Word ladder: space to earth So far, no model, including deepseek has reasoned this out, even though technically it's a straight fwd intersection of data structures + dictionary; something LLMs are very good at!

This "Aha moment" in the DeepSeek-R1 paper is huge: Pure reinforcement learning (RL) enables an LLM to automatically learn to think and reflect. This challenges the prior belief that replicating OpenAI's o1 reasoning models requires extensive CoT data. It turns out you just…