Pratik Ramesh

@pratikramesh7

ML PhD @ Georgia Tech

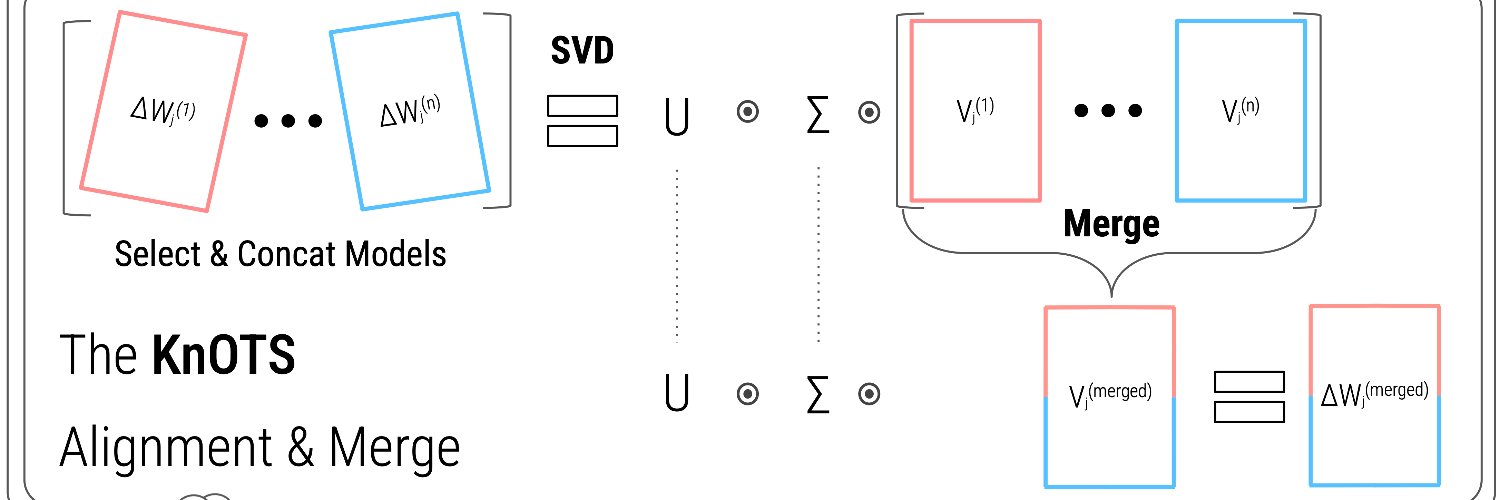

🤔Ever wondered why merging LoRA models is trickier than fully-finetuned ones? 🔍We explore this and discover that poor alignment b/w LoRA models lead to subpar merging. 💡The solution? KnOTS🪢— our latest work that uses SVD to improve alignment and boosts SOTA merging methods.

Model merging is tricky when model weights aren’t aligned Introducing KnOTS 🪢: a gradient-free framework to merge LoRA models. KnOTS is plug-and-play, boosting SoTA merging methods by up to 4.3%🚀 📜: arxiv.org/abs/2410.19735 💻: github.com/gstoica27/KnOTS

As the NeurIPS 2025 rebuttal phase begins, I always find it helpful to revisit @deviparikh's classic guide on writing effective rebuttals: deviparikh.medium.com/how-we-write-r…

Funny moment: while reviewing a paper, I saw one of my own papers cited—with a completely different author list🤯 Probably a result of an LLM hallucinating. With more people relying on LLMs for research, getting the citation right might be a useful benchmark.

Tired of switching between different AI models for different tasks? What if you could combine them instead? 🤖➕ 🚀 Excited to share our work, KnOTS — efficient model merging, which will be presented at ICLR 2025 in Singapore! 🇸🇬

A new approach for easily merging data models is bringing adaptable, multi-tasking #AIs closer to reality. A @GeorgiaTech and @IBM team led by George Stoica and Pratik Ramesh (@pratikramesh7) "significantly enhances existing merging techniques" for data tasks necessary to advance…

Want a robot to do your chores? Check out some cool work from our lab led by @simar_kareer.

Prof. Danfei Xu (@danfei_xu) and the Robot Learning and Reasoning Lab (RL2) present EgoMimic. EgoMimic is a full-stack framework that scales robot manipulation through egocentric-view human demonstrations via Project Aria glasses. 🔖Blog post: ai.meta.com/blog/egomimic-… 🔗Github:…

Our new workshop at ICLR 2025: Weight Space Learning: weight-space-learning.github.io Weights are data. We can learn from weights. Learning can outperform human-designed methods for optimization, interpretability, model merging, and more.

💭 How do MLLMs improve their visual perception with more training data or visual inputs (depth/seg map)? 👉 Performance correlates strongly with “visual” representation quality in the LLM. 🤔 So, why not optimize these representations directly? 🚀 You guessed it—hola OLA-VLM!

Introducing OLA-VLM: a new paradigm to distilling vision knowledge into the hidden representations of LLMs, enhancing visual perception in multimodal systems. Learn more: github.com/SHI-Labs/OLA-V… GT x Microsoft collab by @praeclarumjj @zhengyuan_yang @JianfengGao0217 @jw2yang4ai

Introducing Gaze-LLE, a new model for gaze target estimation built on top of a frozen visual foundation model! Gaze-LLE achieves SOTA results on multiple benchmarks while learning minimal parameters, and shows strong generalization paper: arxiv.org/abs/2412.09586

Model merging with SVD to tie the Knots. arxiv.org/abs/2410.19735

Researchers from Georgia Tech and IBM Introduces KnOTS: A Gradient-Free AI Framework to Merge LoRA Models Researchers from Georgia Tech, and IBM Research, MIT have proposed KnOTS (Knowledge Orientation Through SVD), a novel approach that transforms task-updates of different LoRA…

Model merging with SVD to tie the Knots paper: arxiv.org/abs/2410.19735 code: github.com/gstoica27/KnOTS KnOTS improves the merging of LoRA-finetuned models by aligning their weights using SVD, boosting performance by up to 4.3% across vision and language benchmarks. This approach…

Knots to tie and then untie to make it part of my lazzo version .001

Model merging is tricky when model weights aren’t aligned Introducing KnOTS 🪢: a gradient-free framework to merge LoRA models. KnOTS is plug-and-play, boosting SoTA merging methods by up to 4.3%🚀 📜: arxiv.org/abs/2410.19735 💻: github.com/gstoica27/KnOTS

Model merging is tricky when model weights aren’t aligned Introducing KnOTS 🪢: a gradient-free framework to merge LoRA models. KnOTS is plug-and-play, boosting SoTA merging methods by up to 4.3%🚀 📜: arxiv.org/abs/2410.19735 💻: github.com/gstoica27/KnOTS

I'm on the job market! Please reach out if you are looking to hire someone to work on - RLHF - Efficiency - MoE/Modular models - Synthetic Data - Test time compute - other phases of pre/post-training. If you are not hiring then I would appreciate a retweet! More details👇