Paul Groth

@pgroth

professor - university of amsterdam. thinking: data, links, remixing, knowledge, provenance, espresso. My opinions. Mastodon: @[email protected]

📢📢We have a new data systems faculty position @ITUkbh @dasyaITU. Application deadline: Nov 28. For more information, see the link below. Reach out to me if you have any questions. candidate.hr-manager.net/ApplicationIni…

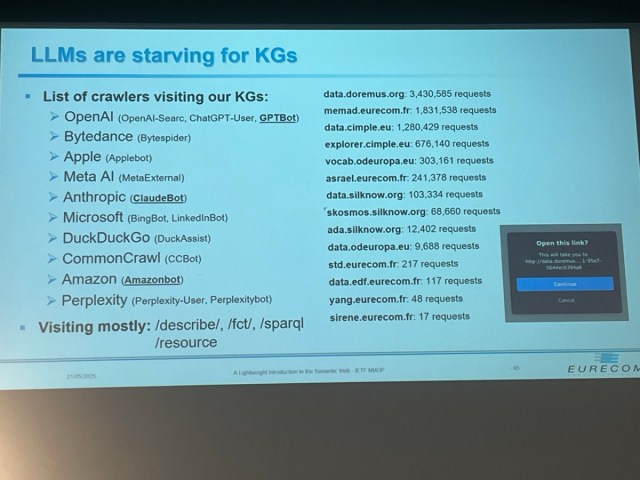

Trip Report: ESWC 2025 #eswc2025 thinklinks.wordpress.com/2025/06/08/tri…

Also the Satellite proceedings for #ESWC2024 are out! Thanks to the whole team @eswc_conf and the General Chair @albertmeronyo Part I: link.springer.com/book/10.1007/9… Part II: link.springer.com/book/10.1007/9… #semanticweb #computerscience #knowledgegraphs #llm

📢 New course alert 📢 I am currently teaching a course on "Language Models and Structured Data" at Institut Polytechnique de Paris. Topics: Language Models, LoRa, Quantization, RAG, Graphs, Tabular Data, Text2SQL Zenodo: zenodo.org/records/146733…

I spent 60+ hours finding 78 tacit knowledge videos. After going viral last year, my LW post is the Schelling point for sharing the type of vid Richard is talking about. If curious, check out the vids and pls share videos of this type in the comments! x.com/RichardMCNgo/s…

Hypothesis: the world's most valuable data is screen captures of outlier competent people going about their work. But very little of this data is recorded, let alone made publicly available. You should seriously consider recording all work you do, even if just for personal use.

Buckle up because we're crashing into the new year with my annual database retrospective: License change blowbacks! @databricks vs. @SnowflakeDB gangwar! @DuckDB shotgun weddings! Buying a college quarterback with database money for your new lover! cs.cmu.edu/~pavlo/blog/20…

In our new @PNASNews paper, across 21 experiments with 23,000+ participants, we identify a critical distortion that shapes decisions involving tradeoffs: we find that people systematically overweight quantified information in such decisions. Paper: pnas.org/doi/10.1073/pn… 🧵

We have an opening for a PhD student investigating concept drift in sensor rich environments. Come work with the awesome @vdegeler werkenbij.uva.nl/en/vacancies/p…

Really proud of @James_G_Nevin - a fantastic PhD student. Was fun to supervise him together with @mhlees . We know that data handling (i.e. data integration, cleaning, etc) can have lots of downstream impacts. Here's evidence.

Congratulations to Dr. @James_G_Nevin who successfully defended his PhD thesis The Ramifications of Data Handling for Computational Models. Check it out: hdl.handle.net/11245.1/d3da6b… A collaboration with @UvA_CSL in the @UvA_IvI co-supervised @mhlees @pgroth

Brilliant and engaging talk by Teresa Liberatore at #EKAW2024: Influence Beyond Similarity—A Contrastive Learning Approach to Object Influence Retrieval. Insightful ideas and impactful research!

We're at #EKAW2024 this week across the street @CWInl . We have two papers: one on object influence retrieval & the other on the impact of entity linking. We also have multiple workshop contributions as well. Info at: indelab.org/news/ @ekawconference

Tomorrow the #EKAW2024 party will really kick off, but the proceedings are already available! Click on the link at event.cwi.nl/ekaw2024/ for temporary free access! #semweb #knowledgeengineering #knowledgemanagement #languagemodels #conference @ekawconference

Fascinating talk by @ioanamanol on dealing with all the data models for data journalism at @iswc_conf #ISWC2024 Very cool use of gittables to retrieve names for entities (cc @MadelonHulsebos)

We're excited to be at #iswc2024 this week. Come talk to us about our work on knowledge graphs, LLMs, tables, and knowledge engineering: @pgroth @bradleypallen @LiseStork @JanCKalo . @iswc_conf @lm_kbc

This is the best paper written so far about the impact of AI on scientific discovery

🚨 What’s the best way to select data for fine-tuning LLMs effectively? 📢Introducing ZIP-FIT—a compression-based data selection framework that outperforms leading baselines, achieving up to 85% faster convergence in cross-entropy loss, and selects data up to 65% faster. 🧵1/8

We have an amazing community around AI and data science here @UvA_Amsterdam @ai4science_lab @sobedsc @BibliotheekUvA

Recap of our 2024 #DataScience Day - 5 events across 5 sites at the university. dsc.uva.nl/content/news/2…

✨#cikm2024 👉CYCLE: Cross-Year Contrastive Learning in Entity-Linking ⏲️Talk: 14:30 – 14:45, Oct 23 (Wed), 4FP29 📍Location: Room 130 😊Big thanks to my collaborators @Congfeng_Cao, @KlimZaporojets and @pgroth! If you're interested, come check out our talk for a discussion!

✨#ecai2024 👉TIGER: Temporally Improved Graph Entity Linker ⏲️Talk: 11:30 – 11:45 AM, Oct 23 (Wed), No. M511 📍Location: Galicia Conference and Exhibition Centre, Hall A 😊Big thanks to my collaborators @Congfeng_Cao @pgroth! If you're interested, come check out our talk!