Paul Soulos

@paulsoulos

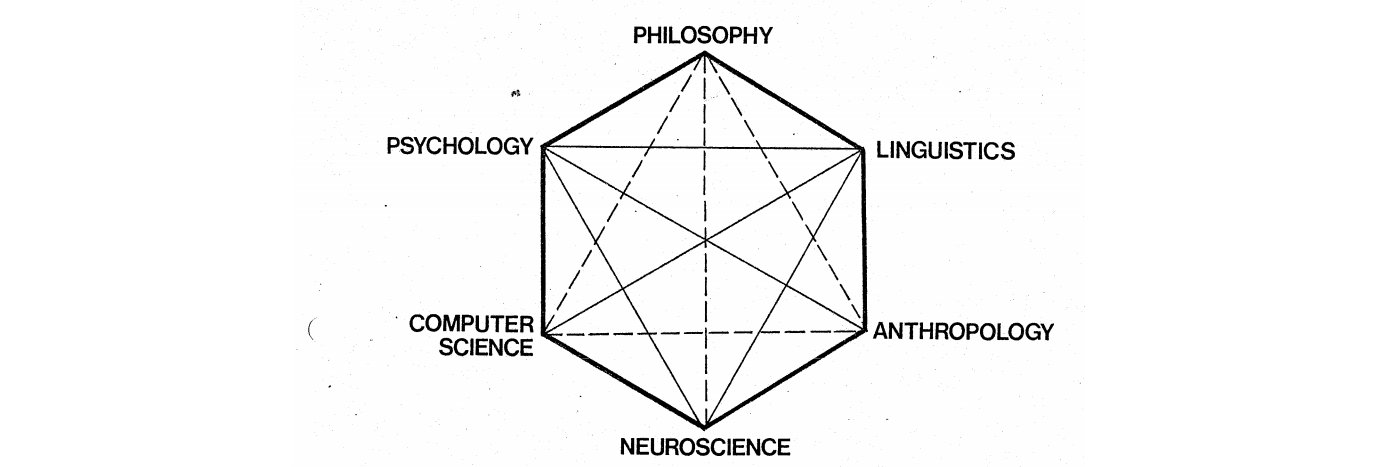

Technical Advisor to the CEO @MicrosoftAI. Previously Neurosymbolic PhD @JhuCogsci, intern @ibmresearch and @msftresearch, and software @fitbit and @Google.

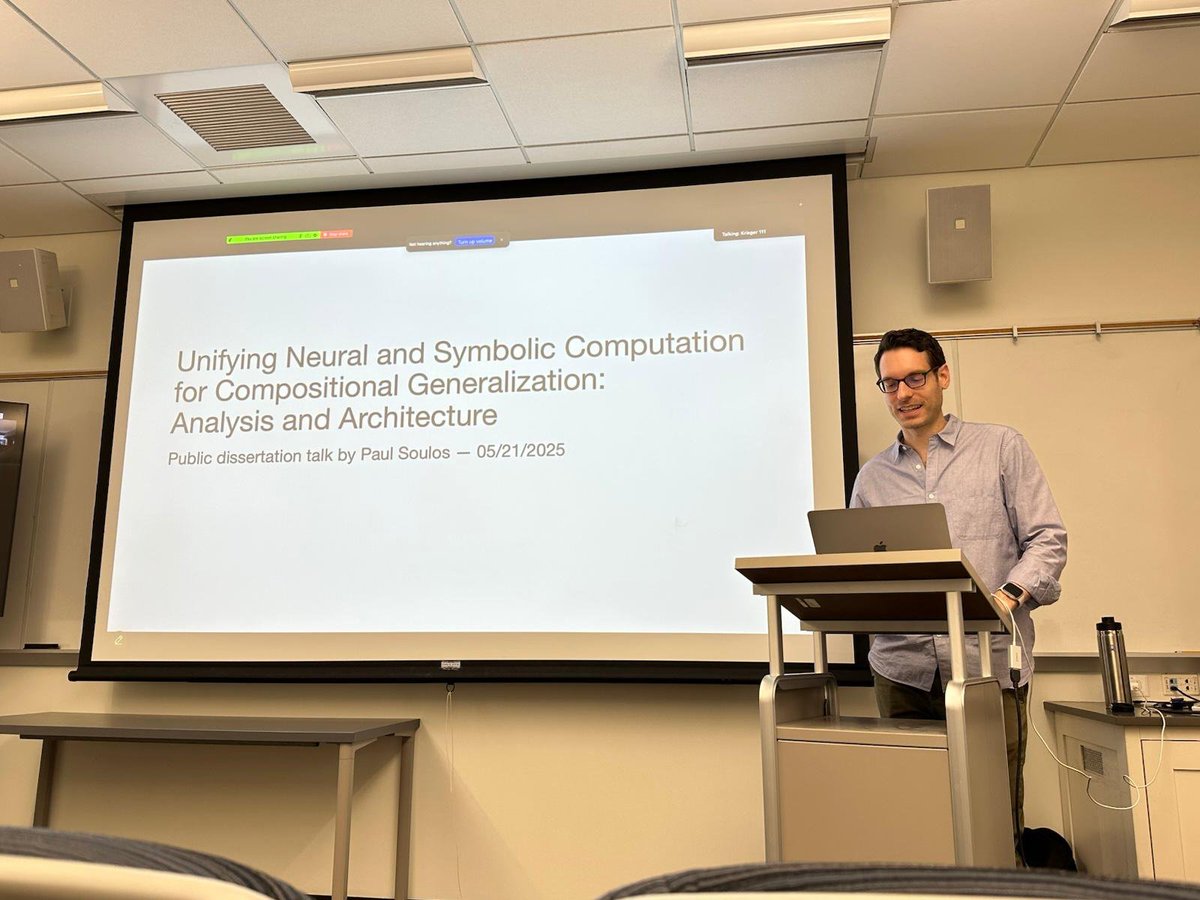

I successfully defended my Ph.D. dissertation and started working at @MicrosoftAI as a Technical Advisor to the CEO! I'm excited to help make Copilot the most empowering, empathetic, and useful AI companion.

r/AmItheAsshole seems perfect as a resource for AI alignment: nuanced moral dilemmas, rich community debates highlighting cultural complexities (albeit U.S.-centric), and reasoning chains that explicitly end in a binary moral verdict.

Can Google please implement a computer-using agent that navigates the unsubscribe web interface on my behalf. The problem space feels pretty well defined.

The speed improvements from NSA are very impressive, and I am trying to figure out why their sparse implementation actually outperforms full attention. My intuition is that the hierarchical computation is more expressive than standard full attention. Do you have any guesses?

🚀 Introducing NSA: A Hardware-Aligned and Natively Trainable Sparse Attention mechanism for ultra-fast long-context training & inference! Core components of NSA: • Dynamic hierarchical sparse strategy • Coarse-grained token compression • Fine-grained token selection 💡 With…

Come visit our poster "MoEUT: Mixture-of-Experts Universal Transformers" on Friday at 4:30 pm in East Exhibit Hall A-C #1907 on #NeurIPS2024. With Kazuki Irie, @SchmidhuberAI, @ChrisGPotts and @chrmanning.

I’m presenting this work at 11a PT today in East Exhibit Hall at poster #4009. Come by and chat!

🚨 Thrilled to share that Compositional Generalization Across Distributional Shifts with Sparse Tree Operations received a spotlight award at #NeurIPS2024! 🌟 I'll present a poster on Tuesday and give an invited lightning talk at the System 2 Reasoning Workshop on Sunday. 🧵👇

Applied AGI scientist is a wild job title considering people have no idea how to even define AGI let alone what we should apply to create it.

An important distinction that Sutskever makes in this article is that scale is not dead, but “Scaling the right thing matters more now than ever.” Vector symbolic architectures are a promising direction to scale symbolic methods in a fully differentiable manner.

OpenAI and others seek new path to smarter AI as current methods hit limitations reut.rs/3Z1SKIw