Paul Bogdan

@paulcbogdan

Postdoc at @DukePsychNeuro. PhD in Cognitive Neuroscience @UofIllinois

New paper: What happens when an LLM reasons? We created methods to interpret reasoning steps & their connections: resampling CoT, attention analysis, & suppressing attention We discover thought anchors: key steps shaping everything else. Check our tool & unpack CoT yourself 🧵

⚓ Our paper now has a LessWrong post! Standard mech interp studies a single token's generation, but CoTs are sequences of reasoning steps w/ many tokens. We decompose CoT into sentences & propose methods to measure their importance. 👉 Read our vision: lesswrong.com/posts/iLHe3vLu…

New paper: What happens when an LLM reasons? We created methods to interpret reasoning steps & their connections: resampling CoT, attention analysis, & suppressing attention We discover thought anchors: key steps shaping everything else. Check our tool & unpack CoT yourself 🧵

1/6: Emergent misalignment (EM) is when you train on eg bad medical advice and the LLM becomes generally evil We've studied how; this update explores why Can models just learn to give bad advice? Yes, easy with regularisation But it’s less stable than general evil! Thus EM

This is neat. CoT faithfulness work illustrates how information doesn't need to be mentioned to silently nudge a CoT trajectory. However, the work here shows how CoT monitoring is not useless by any means. Rather, it appears really promising for detecting unwanted computations

Is CoT monitoring a lost cause due to unfaithfulness? 🤔 We say no. The key is the complexity of the bad behavior. When we replicate prior unfaithfulness work but increase complexity—unfaithfulness vanishes! Our finding: "When Chain of Thought is Necessary, Language Models…

This is neat. So presumably English CoT has lots of filler words/artifacts that don't do meaningful computations. Surely English still has some benefits due to its ubiquity in training As OpenAI and GDM hide their thinking tokens, maybe they're running broken-English CoT models

New paper! Can reasoning in non-English languages be token-efficient and accurate? We evaluate this across 3 models, 7 languages, and 4 math benchmarks. Here’s what we found 🧵 (1/n)

Thrilled to see a news piece by @ScienceMagazine on my recent paper. By analyzing p-values across >240k papers, the study suggests that the rate of statistically questionable findings in psychology has starkly declined since the replication crisis began science.org/content/articl…

I really like this paper. We showed that people have better memories for what came after a negative stimulus compared to what came before. This is notably the first relational memory study (of any kind) to demonstrate forward/backward temporal dissociation in encoding.

New Beckman-led research suggests the moments that follow a distressing episode are more memorable than those leading up to it. 🧠 This may improve how we evaluate eyewitness testimonies, treat PTSD, and combat memory decline. 🗞️bit.ly/3S6T1VY 📒doi.org/10.1080/026999…

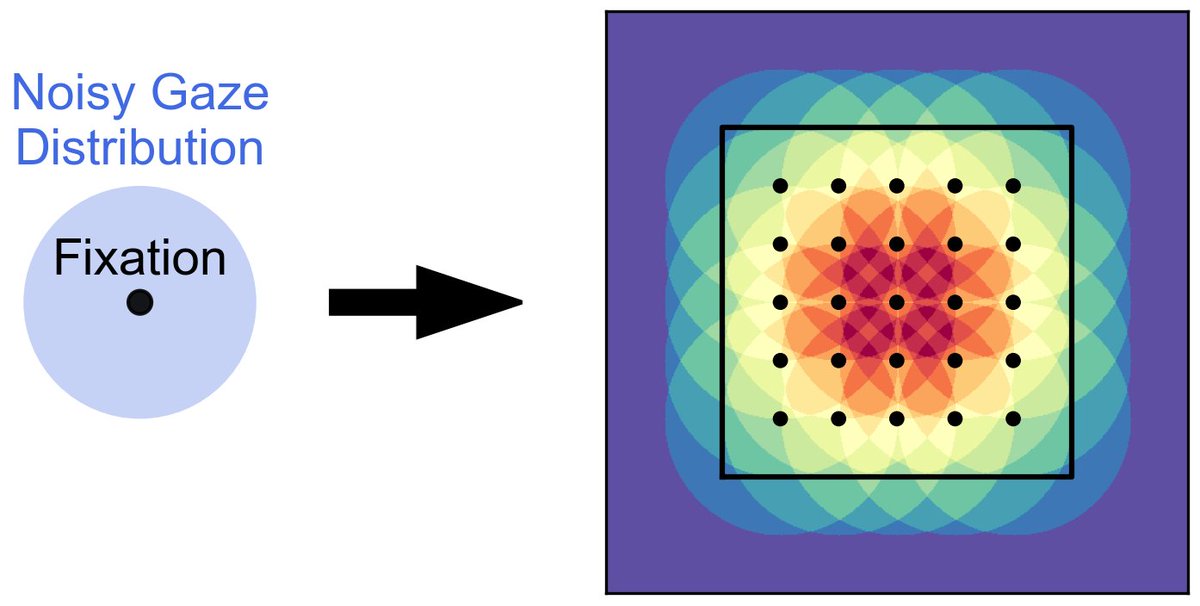

We just got a paper accepted on the use of webcams for eye-tracking research. Webcam eye-tracking is noisy, and we show how this can create biases in the data. See this picture on how a uniform grid of fixations will be recorded as a radial pattern (link.springer.com/article/10.375…).

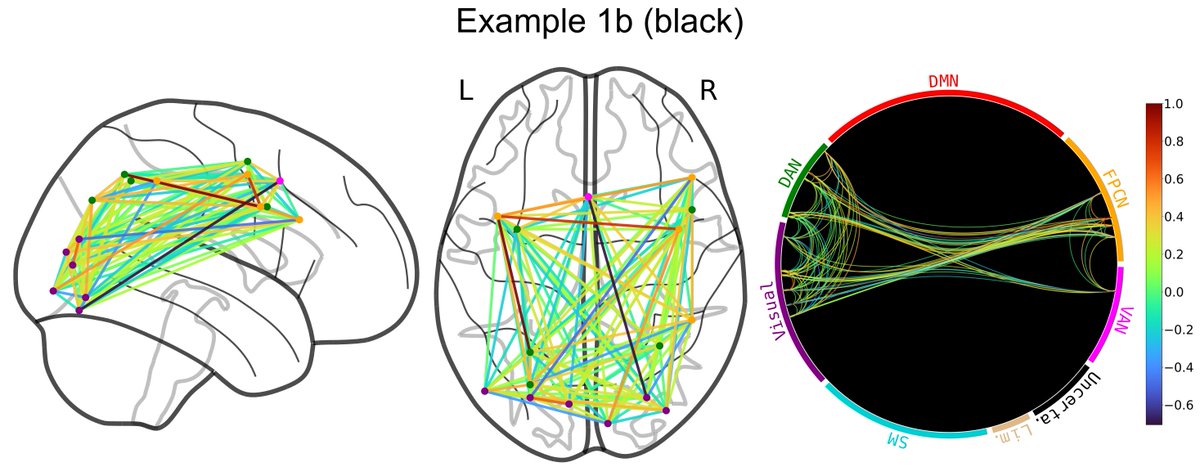

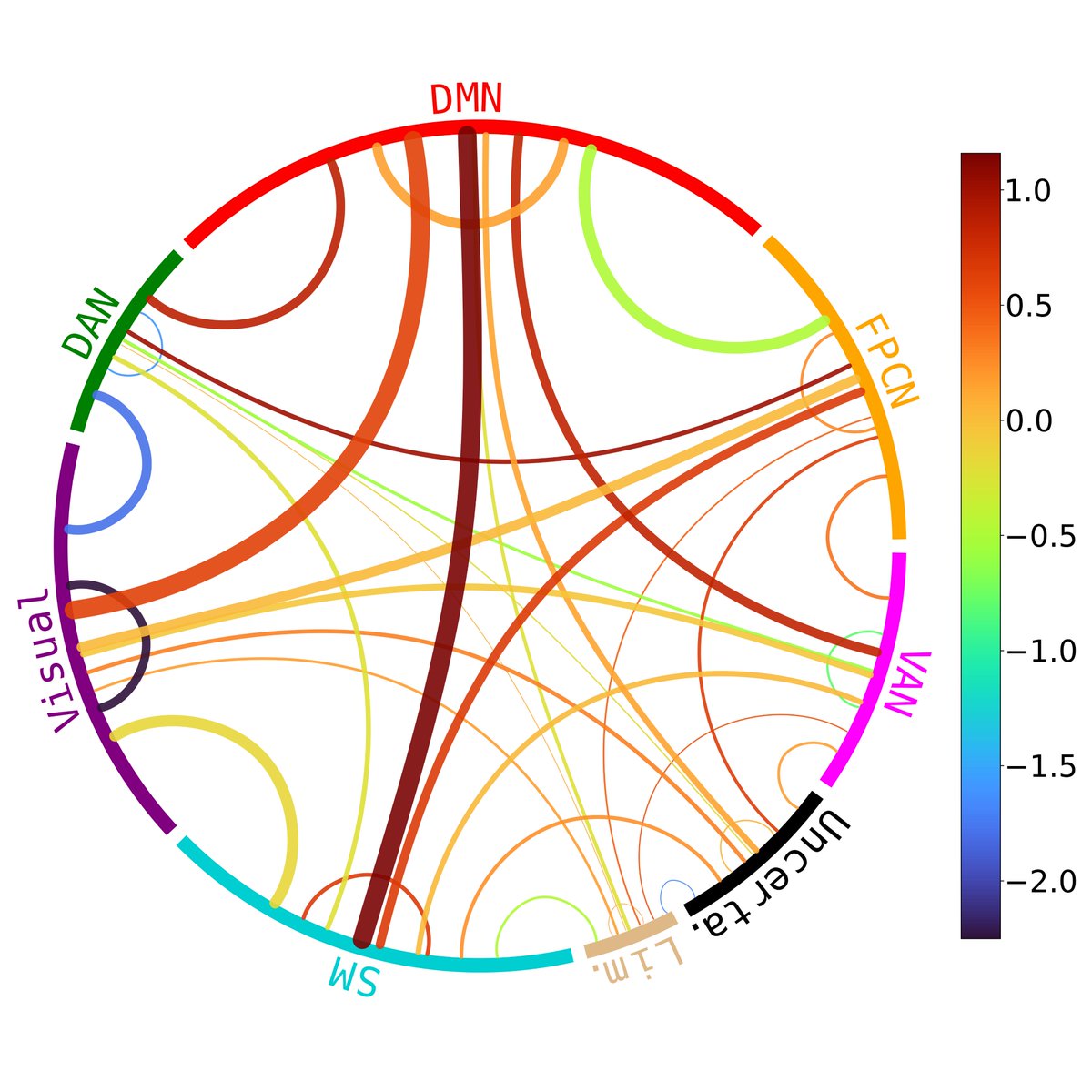

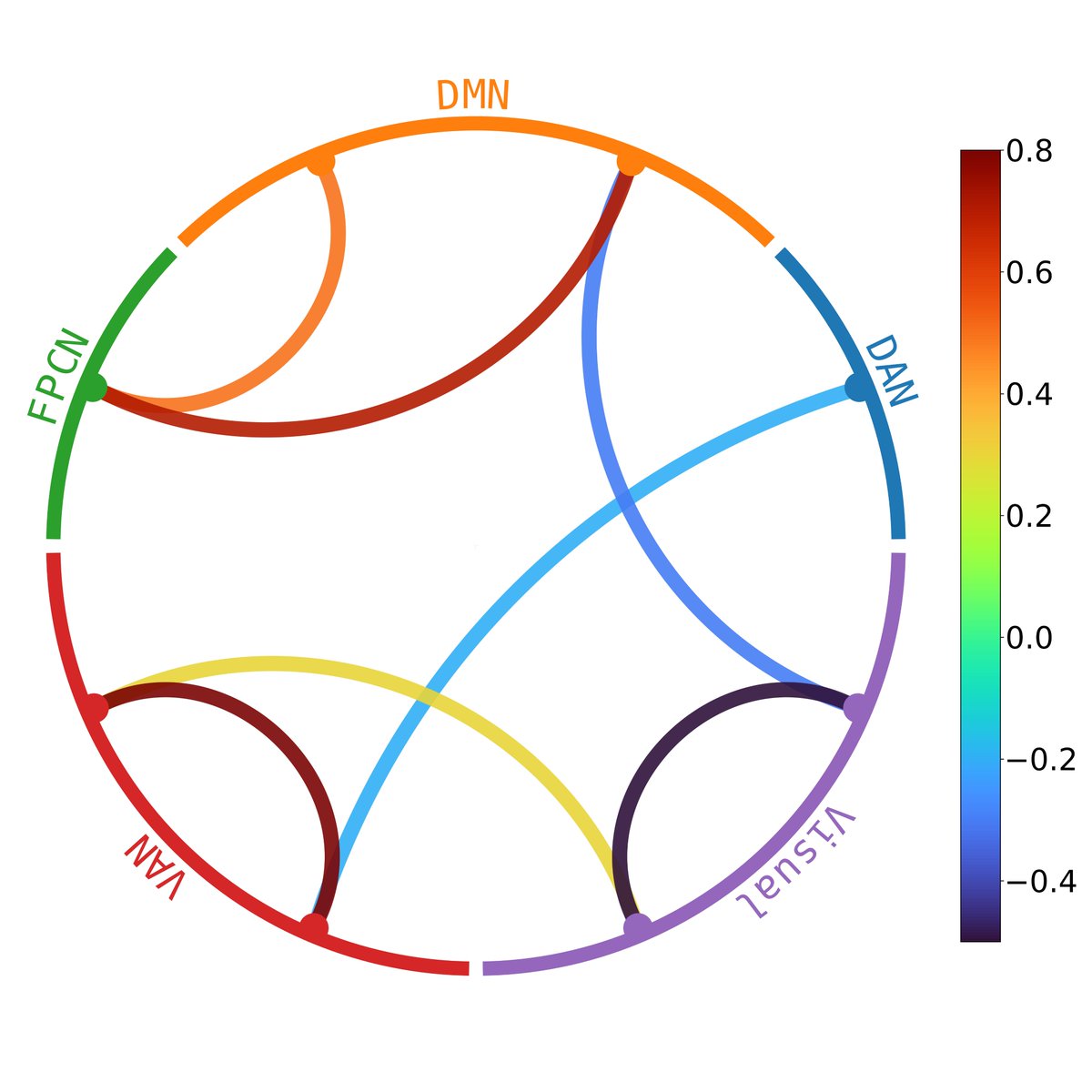

I made a Python package for visualizing brain networks and functional connectivity patterns. I think the plots look nice. github.com/paulcbogdan/Ni…

We can learn a lot by looking at how people handle success and hardship. Can the positive or negative tone of post-game Reddit posts helps us find the saltiest NFL fanbases after wins, losses and more? ✍️: @paulcbogdan fansided.com/2022/08/25/sal…

Can the positive or negative tone of Reddit posts helps us find the saltiest NBA fanbase after wins, losses and more? 📝: @paulcbogdan fansided.com/2022/05/12/nba…

Rock @psychopy socks 🧦 We're giving away two pairs of themed socks to keep your feet cosy as you build experiments in #PsychoPy & celebrate the publication of the 2nd edition of the book. To enter: Follow us RT this tweet You have 48 hours.