snimu

@omouamoua

LLM ideas & experiments

Very cool that @PrimeIntellect 8xH100 starts with negative costs & uptime to make up for spin up times!

New blogpost and demo! I optimized a Flappy Bird world model to run locally in my web browser (30 FPS) (demo and blog in replies)

Holy shit I spent the last three weeks and hundreds of dollars on trying my hand on modded-nanogpt and just found out that by a copy-and-paste error, I had reduced the batch_size by 25% vs the original...

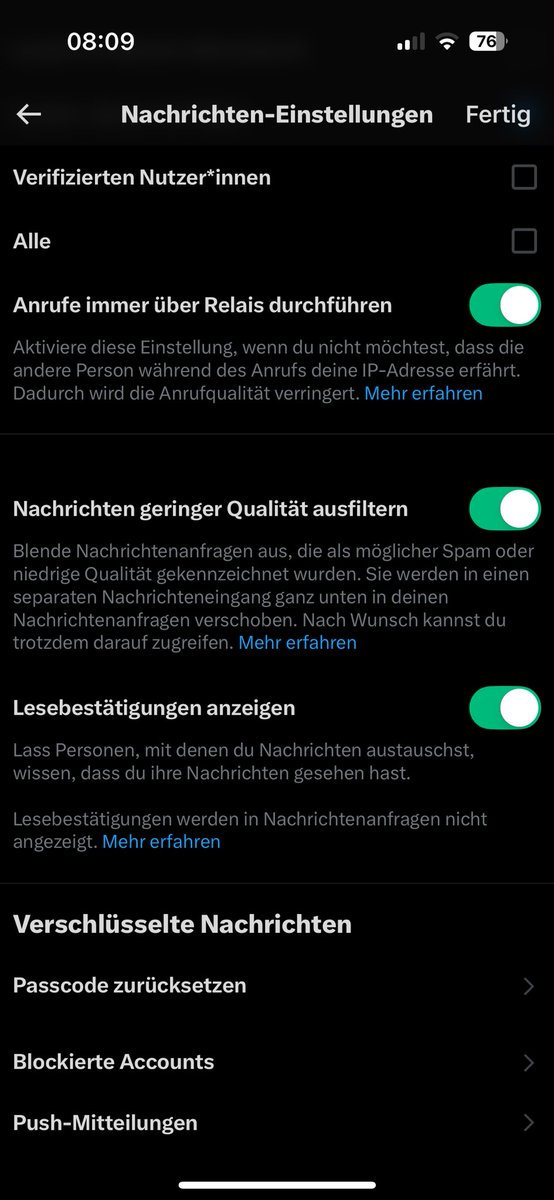

Wait you can just activate “filter out messages of low quality” (or whatever it’s called in English) in your DM settings and the torrent of spam will stop???

Very interesting. Decomposing models into small components might allow for interesting parameter initialization strategies in the future. Or „post-training“ by directly weighing different circuits in existing models. Or characterizing training dynamics. Etc.

(1/7) New research: how can we understand how an AI model actually works? Our method, SPD, decomposes the *parameters* of neural networks, rather than their activations - akin to understanding a program by reverse-engineering the source code vs. inspecting runtime behavior.

For the past year, I've been heads-down building the answer. A full-stack, vertically integrated AI platform for Apple Silicon, built from first principles. PIE: A vLLM-equivalent inference engine. PSE: A 100% reliable structuring engine.