Neuroexplicit Models of Language, Vision, Action

@neuroexplicit

We combine neural with symbolic and other models to improve over both. Up to 24 PhD students with advisors at Saarland U, Max Planck Institutes, DFKI, CISPA.

1/n🤖🧠 New paper alert!📢 In "Assessing Episodic Memory in LLMs with Sequence Order Recall Tasks" (arxiv.org/abs/2410.08133) we introduce SORT as the first method to evaluate episodic memory in large language models. Read on to find out what we discovered!🧵

Final opportunity: Multiple PhD students to start in Fall 2025. Combine neural and symbolic/interpretable models of language, vision, and action, and work with world-class advisors at @Saar_Uni, MPI Informatics, @mpi_sws, @CISPA ,@DFKI. Details: neuroexplicit.org/jobs/

Now hiring: Multiple PhD students to start in fall 2025, for research on combining neural and symbolic/interpretable models of language, vision, and action. Work with world-class advisors at @Saar_Uni, MPI Informatics, @mpi_sws, @CISPA ,@DFKI. Details: neuroexplicit.org/jobs/

Now hiring: Twelve (!) PhD students to start in fall 2025, for research on combining neural and symbolic/interpretable models of language, vision, and action. Work with world-class advisors at @Saar_Uni, MPI Informatics, @mpi_sws, @CISPA, @DFKI. Details: neuroexplicit.org/jobs

It was fantastic to have @tetraduzione as a guest at our fall retreat this year. He taught us a beautiful and effective neurosymbolic approach to factuality in LLMs and brought such a positive spirit. We look forward to seeing you again soon!

spending a great week in Saarland for the @neuroexplicit retreat of @SIC_Saar. great talks, posters and interactions with #PhD students on #neurosymbolic #nesy #AI #ML (and boardgames!) Thanks @alkoller @slusallek @IValeraM for having me!

Ellie Pavlick @Brown_NLP is visiting us for three months as a @dfg_public Mercator Fellow. We are thrilled to have her with us and look forward to many fruitful research collaborations.

Now hiring: Three PhD students and one postdoc, as part of a research team of ~30, combining neural and symbolic/interpretable models of language, vision, action, and ML. Work with advisors at @Saar_Uni, MPI Informatics, @mpi_sws_, @CISPA, @DFKI. Details: neuroexplicit.org/jobs/

Now hiring: Six PhD students and one postdoc, for research on combining neural and symbolic/interpretable models of language, vision, and action and ML. Work with world-class advisors at @Saar_Uni, MPI Informatics, @mpi_sws_, @CISPA, @DFKI. Details: neuroexplicit.org/jobs/

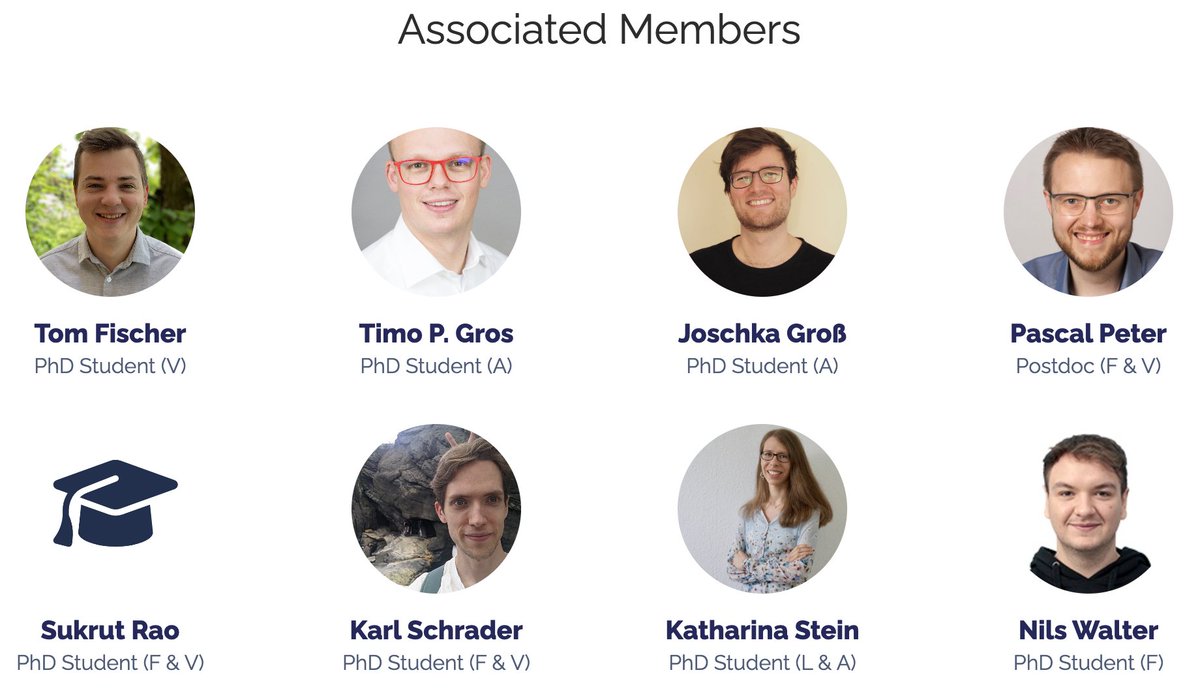

We welcome our first eight associated members to the RTG! They are PhD students and postdocs at @SIC_Saar and @CISPA working on neuroexplicit models of language, vision, and action, and will contribute their own research and ideas to the RTG-funded student's work.

Welcome, Hamza Mughal, as a PhD student starting in October! Hamza holds a BSc from NUST Pakistan and is about to finish his MSc from @SIC_Saar. He plans to work on neuroexplicit models for multimodal gesture synthesis. We look forward to having you! m-hamza-mughal.github.io

We welcome Cameron Braunstein, who will join us as a PhD student in October. With an MSc from @SIC_Saar on quantum computing for optical flow and a BSc from @BrandeisU, Cameron will work on neurosymbolic models of vision, language, and neuroscience. cvmp.cs.uni-saarland.de/?page_id=145#c…

Welcome, @SwetaMahajan1! We are pleased to announce that Sweta Mahajan will join us as a PhD students from IIT Delhi to work on neurosymbolic models at the intersection of vision and language. Looking forward to working with you! swet96.github.io

Now hiring: Six PhD students and one postdoc for research on combining neural and symbolic/interpretable models of language, vision, and action. Work with world-class advisors at @Saar_Uni, MPI Informatics, @mpi_sws, @CISPA, @DFKI. Details: neuroexplicit.org/jobs

We are funded! Job ads for the first six PhD students (will grow to 24), one postdoc, a coordinator, and a secretary will follow. Looking forward to five years of research on combining neural and human-interpretable (e.g. symbolic) models.

The RTG 'Neuroexplicit Models of Language, Vision, and Action' seeks to combine previous approaches in AI in order to create systems that are safer, more reliable and easier to interpret. sic.link/rtg2023 @LstSaar @Saar_Uni @dfg_public @maxplanckpress @DFKI @CISPA